“sh: next: command not found” in Next.js Development? Here’s the Fix!

Struggling with the "sh: next: command not found" error in your Next.js project? Learn how to fix it by...Introduction to Generative AI

Imagine a tool that can create entirely new and original content, from captivating poems to never-before-seen images. That’s the...How to uninstall Ollama

Looking to uninstall Ollama from your system? Follow these simple steps to bid it farewell and clean up your...Top 5 Community Tools Every DevRel Should Have for Building a Thriving Developer Community

Discover the top 5 community tools every DevRel needs! From community management platforms to content creation tools, this guide...Begin Your Java Journey with BlueJ: A Beginner-Friendly Tool for Coding Success

Learn Java with BlueJ, a beginner-friendly tool! Dive into Java programming with a simple interface, visual objects, and interactive...How AI Integration in IoT Transforms Inventory Management

Discover how integrating AI with IoT is revolutionizing inventory management, leading to enhanced efficiency, cost savings, and a competitive...Best Practices For Securing Containerized Applications

Discover the best practices for securing containerized applications in this guide. Learn how to protect your digital assets from...Best Possible Ways to Recreate Content

Struggling to recreate content? This guide will help you revitalize your old content with ease. Learn how to add...All Stories

Using ChatGPT to Build an Optimised Docker Image using Docker Multi-Stage Build

GPT (short for “Generative Pre-trained Transformer”) is a type of language model developed by OpenAI. OpenAI is a nonprofit organisation based...

How to install and Configure NVM on Mac OS

nvm (Node Version Manager) is a tool that allows you to install and manage multiple versions of Node.js on your Mac....

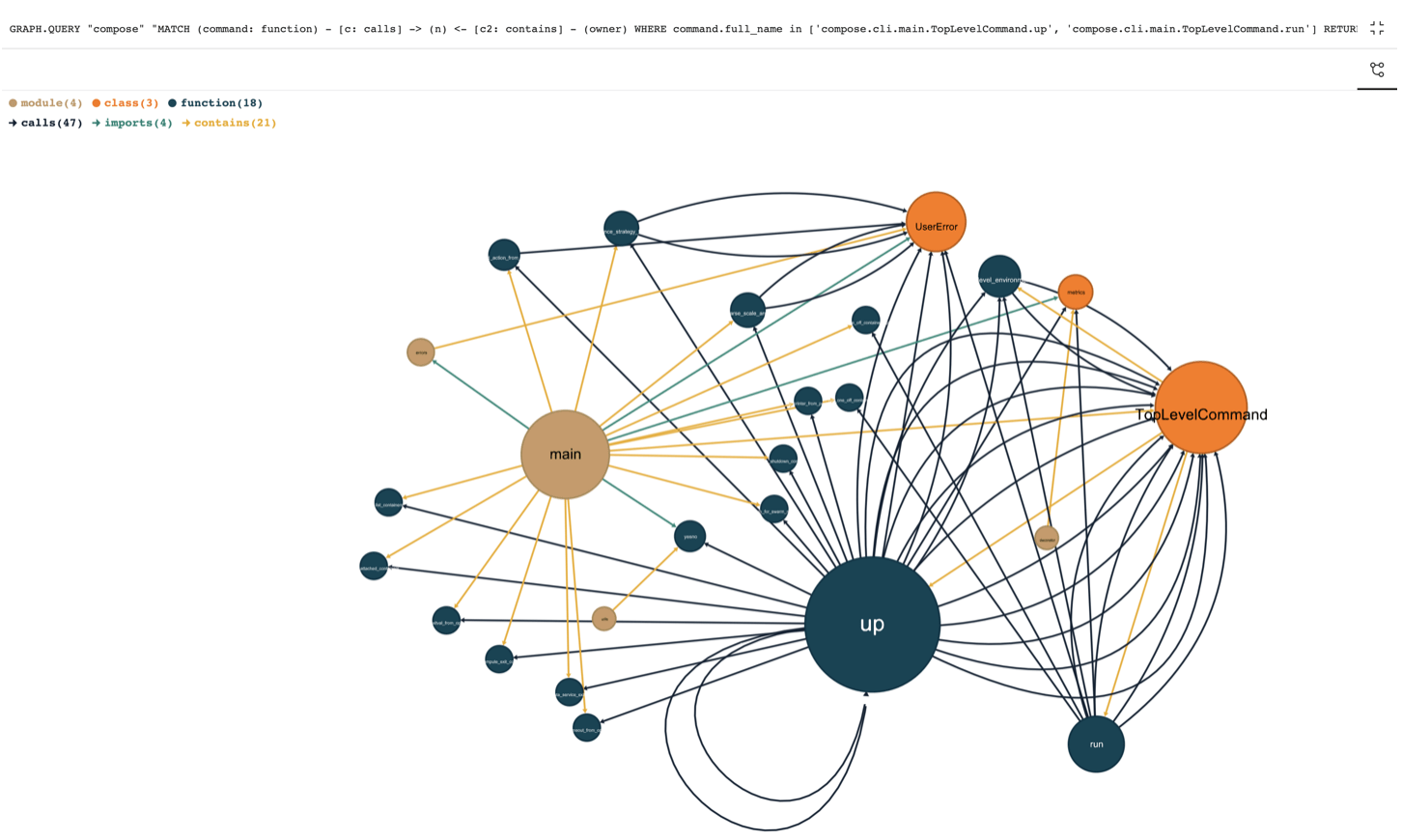

5 Minutes to Memgraph using Docker Extension

Memgraph is a high-performance, distributed in-memory graph database. It is designed to handle large volumes of data and complex queries, making...

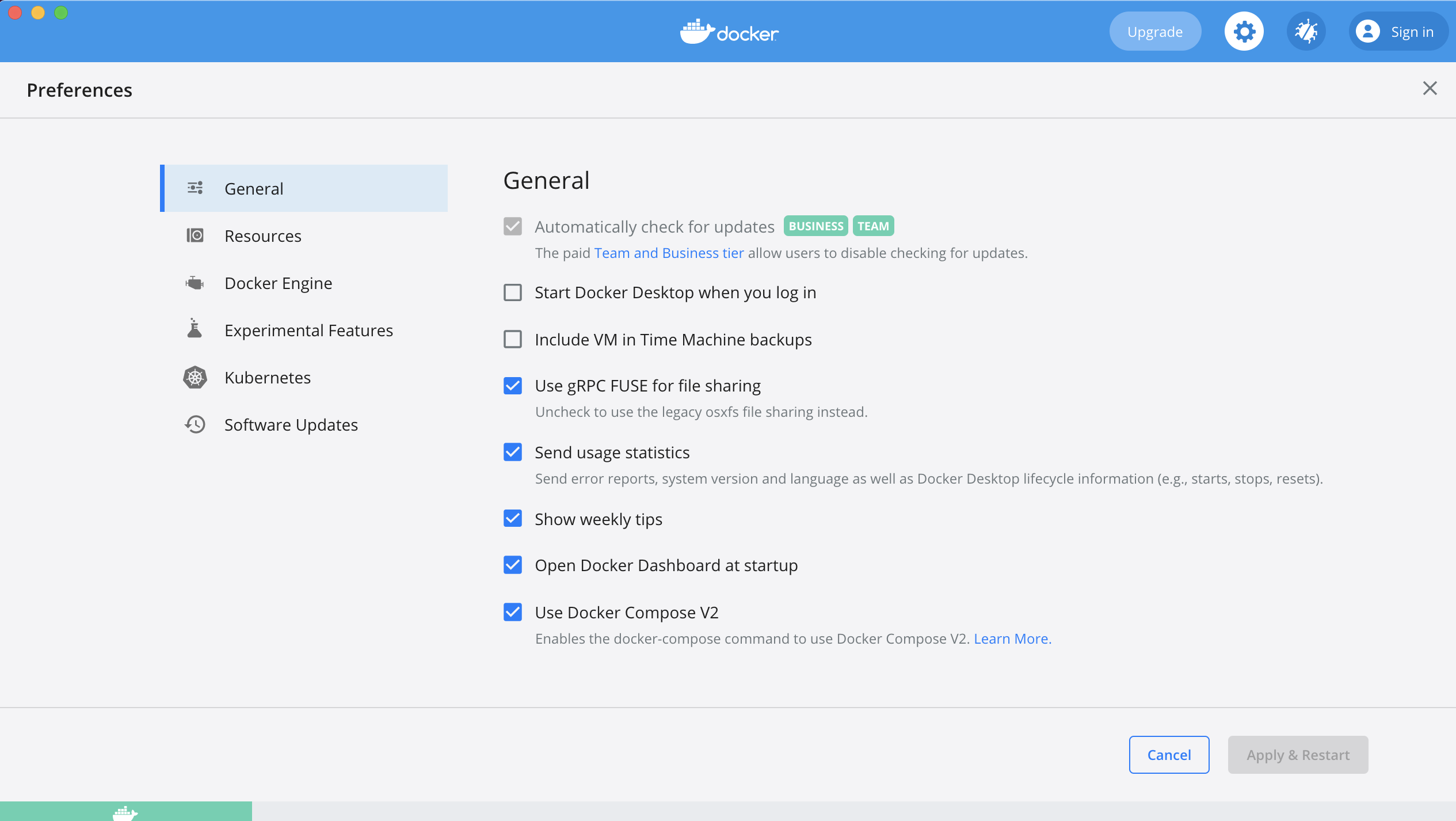

What’s New in Docker Desktop 4.15?

Docker Desktop 4.15 is now available for all platforms – Linux, Windows and macOS. It comes with Docker Compose v2.13.0, Containerd v1.6.10 and Docker Hub...

How to Build & Push Helm Chart to Docker Hub flawlessly

Learn how to build and push Helm Chart to Docker Hub using Docker Desktop

How to Install the latest version of Docker Compose on Alpine Linux(in 2022)

Docker Compose V2 is the latest Compose version that went GA early this year during April 26, 2022. Compose V2 is...

Install and Configure GitLab Runner on Kubernetes using Helm

GitLab Runner is a tool that helps run jobs and send the results back to GitLab. It is often used in...

HubScraper: A Docker Hub Scraper Tool built using Python, Selenium and Docker

Web scraping has become increasingly popular in recent years, as businesses try to stay competitive and relevant in the ever-changing...

Deploy Apache Kafka on Kubernetes running on Docker Desktop

Event-driven architecture is the basis of what most modern applications follow. When an event happens, some other event occurs. In the...

Fluentd – An Open-Source Log Collector

With 11,600 GitHub stars and 1,300 forks, Fluentd is an open-source data collector for unified logging layer. It is a cross-platform...

9 Best Docker and Kubernetes Resources for All Levels

If you’re a developer hunting for Docker and Kubernetes-related resources, then you have finally arrived at the right place. Docker is a...

Getting Started with Docker and Kubernetes on Raspberry Pi

Raspberry Pi OS (previously called Raspbian) is an official operating system for all models of the Raspberry Pi. We will be...

Cloud-Native Development For Higher Education: Why Cloud-Native Is Key For Schools

As per the latest CNCF report, more than 70 percent of companies in the US are focusing on cloud-native apps. What’s...

How to Import CSV data into Redis using Docker

CSV refers to “comma-separated values”. A CSV file is a simple text file in which data record is separated by commas or...

5 Steps to Getting Your First Job as a Web Developer

Every developer you know was once like you, a newbie in web development not knowing how to navigate their way in...

Integrated Search Capability in Docker Desktop to accelerate the Modern Application Development

MongoDB is a popular document-oriented database used to store and process data in the form of documents. In my last blog post,...

How to Dockerize Machine Learning Applications Built with Streamlit and Python

Customer churn is a million-dollar problem for businesses today. The SaaS market is becoming increasingly saturated, and customers can choose from...

Influence of IT on Modern Education: How DevOps Is Transforming Higher Education

When implemented correctly, DevOps has the potential to revolutionize education. A significant number of educators are making an effort to implement...

Venturing into the world of Developer Experience (DX) | Docker Extension

Submit Your Extension

5 Important Rules for Learning Programming in 2022

Programming knows no boundaries in its development because every day, more and morecompanies need websites, companies, CRM systems, and much more....

Celebrating Hacktoberfest with Docker Extensions

This post was originally posted by the author on Docker DEVCommunity site. Community is at the heart of what Docker does....

What is ArgoCD?

It is a tool that will help read your environment configuration from your git repository and apply it to your Kubernetes...

Scaling DevOps to the Edge – Open Source Community Conference (OSCONF)

Learn how to scale DevOps to the Edge

What are Kubernetes Pods and Containers? – KubeLabs Glossary

Why does Kubernetes use a Pod as the smallest deployable unit, and not a single container? While it would seem simpler...

Using Blockchain to Drive Supply Chain Management System

It really is an exaggeration to say that blockchain was among the most emerging technologies yet devised. Any business can benefit...

A First Look at Docker Desktop for Linux

Download Docker Desktop for Linux

Top 50 Essential Docker Developer Tools You Must be Aware Of

Access to Docker Developer Tools

How To Secure Kubernetes Clusters In 7 Steps

Kubernetes is now the most widely used container and container orchestration platform. With the growing adoption of container and container orchestrator...

How To Use & Manage Kubernetes DaemonSets? – KubeLabs Glossary

Join Collabnix Community Slack

How to Connect to Remote Docker using docker context CLI

Listing the container Switching to the new context Listing the overall context

Dockerize an API based Flask App and Redis using Docker Desktop

If you’re a developer looking out for a lightweight but robust framework that can help you in web development with fewer...

Creating Your First React app using Docker Desktop

React is a JavaScript library for building user interfaces. It makes it painless to create interactive UIs. You can design...

Docker Desktop 4.7.0 introduces the SBOM for Docker Images for the first time

Today, it’s hard to find any software built from the scratch. Most of the application built today uses the combination of...Unveiling Docker: The Revolutionary Tech Ruling the Digital World

Docker: Unleashing the Digital Titan of the Tech World

How to Copy files from Docker container to the Host machine?

By utilizing DockerLabs, you can acquire knowledge on Docker and gain entry to over 300 tutorials on Docker designed for novices,...

The Rise of Low-Code/No-Code Application Platforms

Today organizations are experiencing the shortage of software developers and it is expected to hit businesses hard in 2022. Skilled developers...

Awesome-Compose: What It is and Why You Should Really Care About It

With over 14,700 stars, 2,000 forks, awesome-compose is a popular Docker repository that contains a curated list of Docker Compose samples. It helps...

Top Kubernetes Tools You Need for 2022 – Popeye

Thanks to Collabnix community members Abhinav Dubey and Ashutosh Kale for all the collaboration and contribution towards this blog post series....

5 Raspberry Pi Projects That Will Boost Your Child’s Coding Skills

The Raspberry Pi is powerful computer despite its size. This portable device comes with a CPU based on the Advanced RISC...

Getting Started with Argo CD on Docker Desktop

DevOps is a way for development and operations teams to work together collaboratively. It is basically a cultural change. Organizations adopt...

Demystifying Kubernetes in less than 100 slides

Did you know? There are 5.6 million developers using Kubernetes worldwide, representing 31% of all backend developers. 2022 will be an...

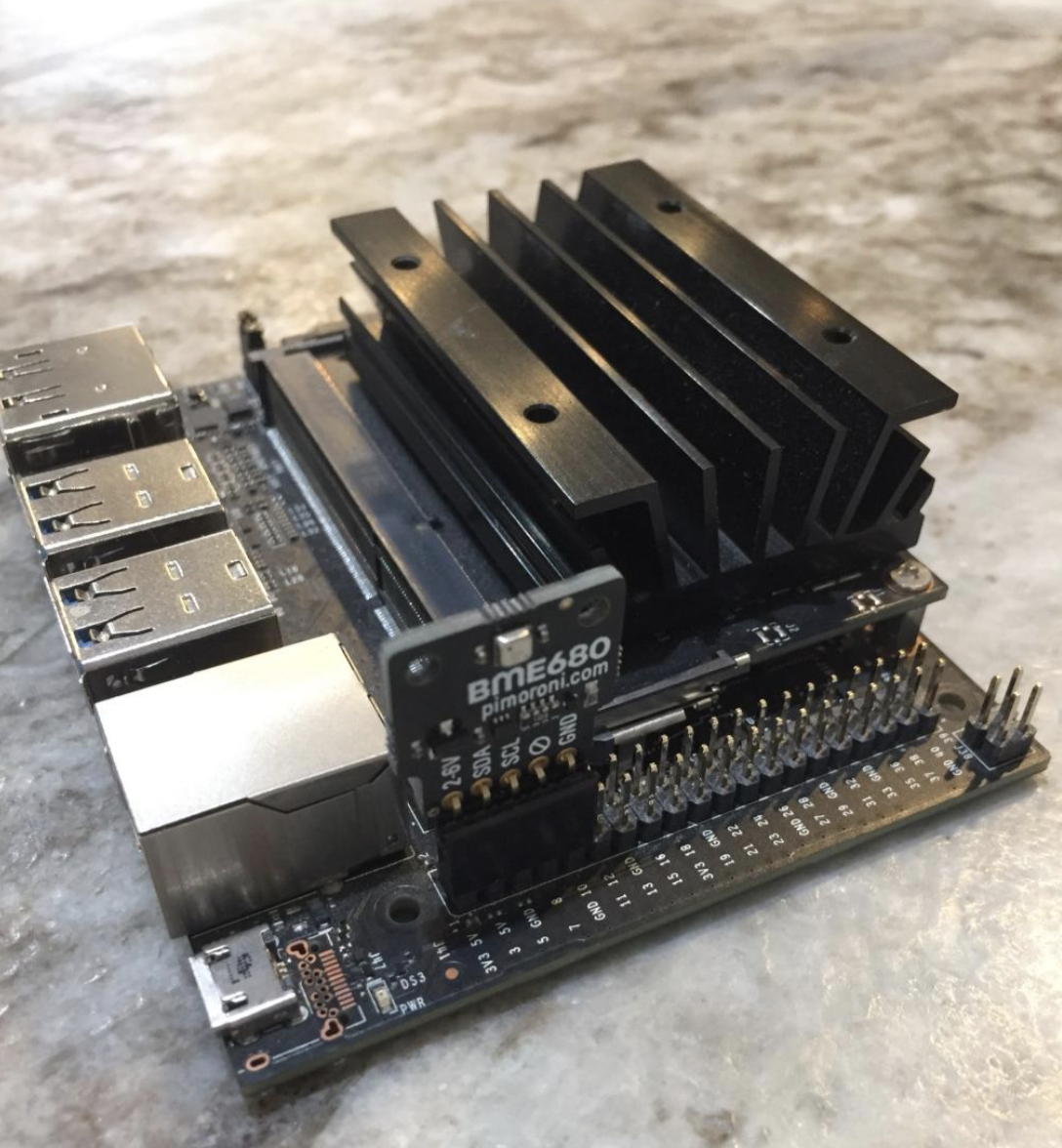

Sensor Data Collection and Analytics – From IoT to Cloud in 5 Minutes

The BME680 is a digital 4-in-1 sensor with gas, humidity, pressure, and temperature measurement based on proven sensing principles. The state-of-the-art...

How to Deploy a Stateful Application using Kubernetes? – KubeLabs Glossary

A StatefulSet is a Kubernetes controller that is used to manage and maintain one or more Pods. However, so do other controllers like...

The Rising Pain of Enterprise Businesses with Kubernetes

As enterprises accelerate digital transformation and adopt the Kubernetes ecosystem, their businesses are experiencing growing pains in multiple domains. As per...

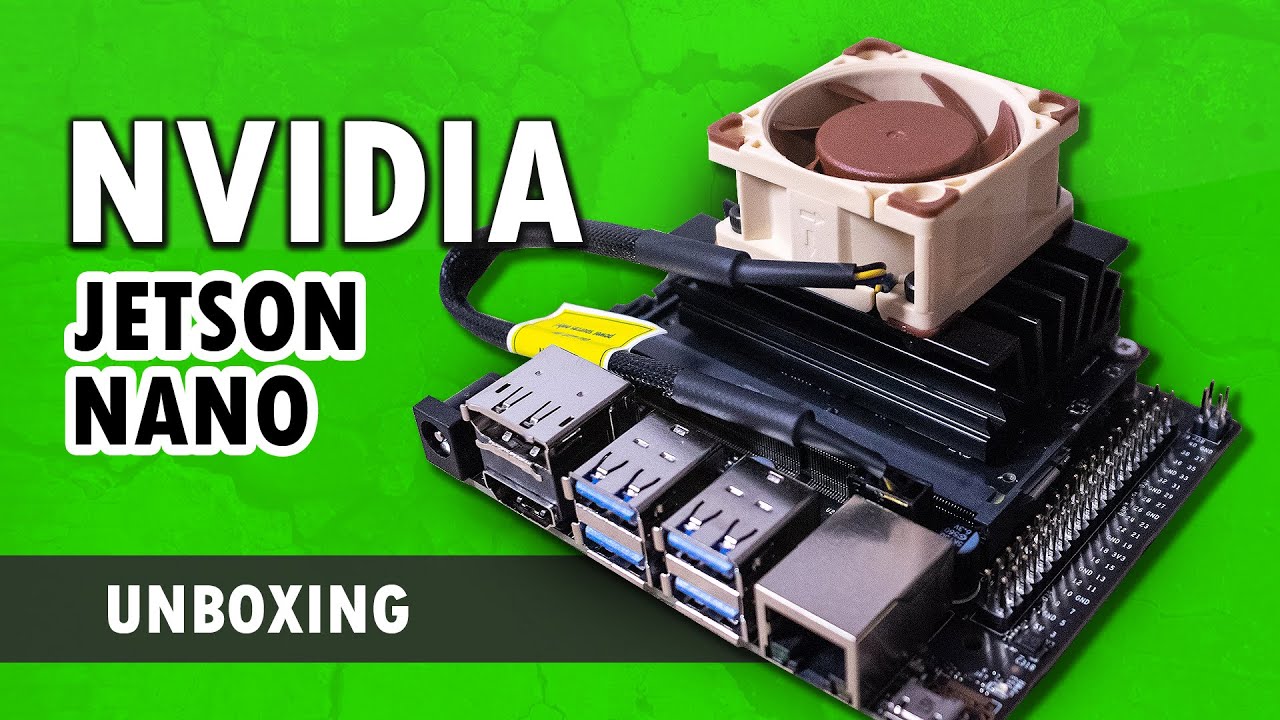

How to Boot NVIDIA Jetson Nano from USB instead of SD card

Booting from a SD card is a traditional way of running OS on NVIDIA Jetson Nano 2GB model and it works...

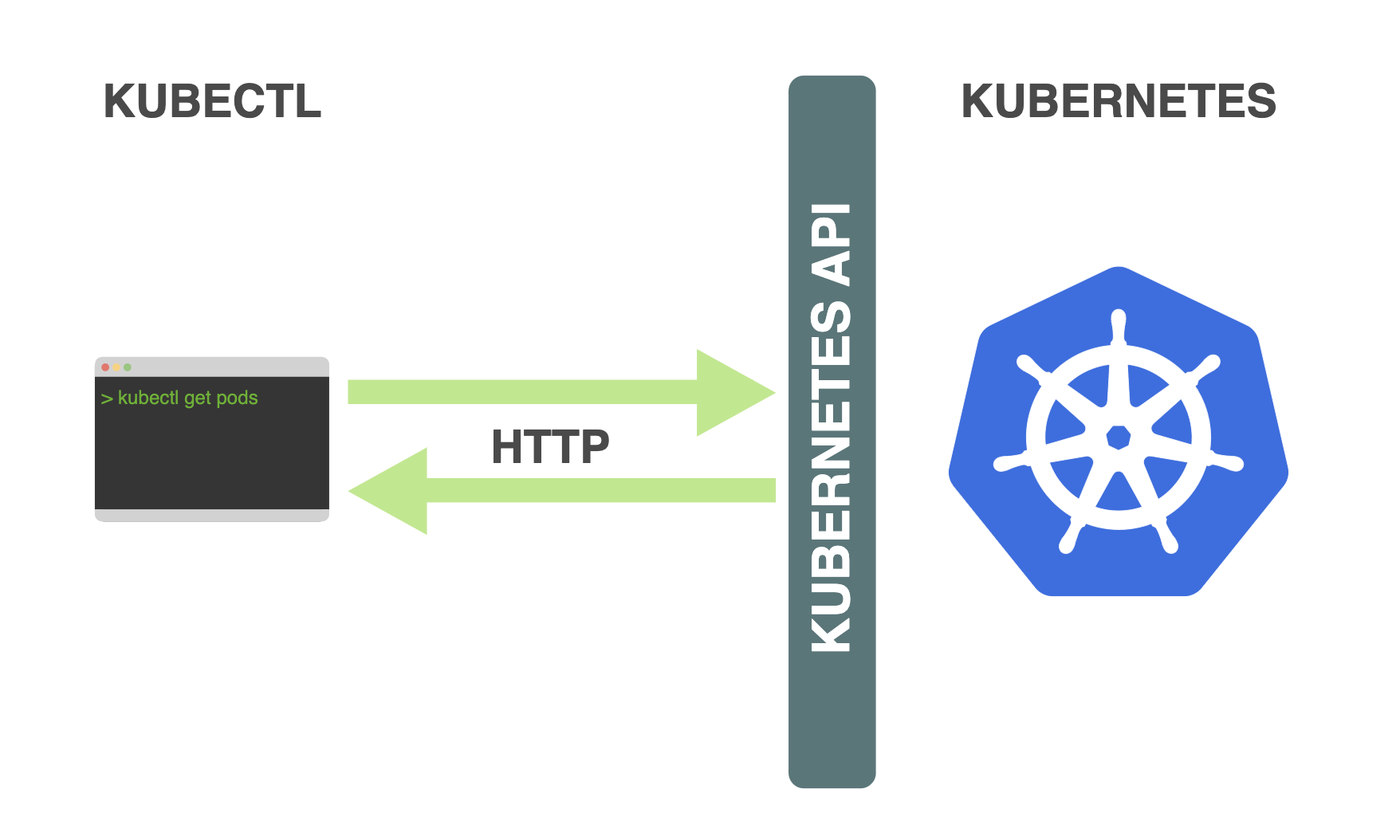

A Quick Look at the Kubernetes API Reference Docs and Concepts

The core of Kubernetes’ control plane is the API server and the HTTP API that it exposes. The Kubernetes API is the...

How to setup and run Redis in a Docker Container

Redis stands for REmote DIctionary Server. It is an open source, fast NoSQL database written in ANSI C and optimized for speed. Redis is an...

Top 25 Active Chatrooms for DevOps Engineers like You

As the tech stacks are becoming more and more complex and business is moving at a fast pace, DevOps Teams worldwide...

How to build a Sample Album Viewer application using Windows containers

This tutorial walks you through building and running the sample Album Viewer application with Windows containers. The Album Viewer app is an ASP.NET...

How to efficiently scale Terraform infrastructure?

Terraform infrastructure gets complex with every new deployment. The code helps you to manage the infrastructure uniformly. But as the organization grows,...

What are Kubernetes Replicasets? – KubeLabs Glossary

How can you ensure that there are 3 Pods instances which are always available and running at one point in time?...

Top 6 Docker Security Scanning Practices

When it comes to running containers and using Kubernetes, it’s important to make security just as much of a priority as...

Shipa Vs OpenShift: A Comparative Guide

With the advent of a popular PaaS like Red Hat OpenShift, developers are able to focus on delivering value via their...

Top 5 Docker Myths and Facts That You Should be Aware of

Today, every fast-growing business enterprise has to deploy new features of their app rapidly if they really want to survive...

How to control DJI Tello Mini-Drone using Python and Docker

If you want to take your drone programming skills to the next level, DJI Tello is the right product to buy....

5 Minutes to AI Data Pipeline

According to a recent Gartner report, “By the end of 2024, 75% of organizations will shift from piloting to operationalizing artificial...

Getting Started with Terraform in 5 Minutes

Terraform is an infrastructure as code (IaC) tool that allows you to build, change, and version infrastructure safely and efficiently. This includes low-level...

Top 5 Effective Discord Bot For Your Server in 2022

Discord is one of the fastest-growing voice, video and text communication services used by millions of people in the world. It...

What is Kubernetes Scheduler and why do you need it? – KubeLabs Glossary

If you are keen to understand why Kubernetes Pods are placed onto a particular cluster node, then you have come to...Using dd command in Linux

In the world of Linux, there are many powerful and versatile tools at your disposal for various tasks. One such tool...

How to build and run a Python app in a container – Docker Python Tutorial

In May 2021, over 80,000 developers participated in StackOverFlow Annual Developer survey. Python traded places with SQL to become the third...

5 Best Redis Tutorials and Free Resources for all Levels

If you’re a developer looking out for Redis related resources, then you have finally arrived at the right place. With more...

Running Automated Tasks with a CronJob over Kubernetes running on Docker Desktop

Docker Desktop 4.1.1 got released last week. This latest version support Kubernetes 1.21.5 that was made available recently by the Kubernetes...

How to setup GPS Module with Raspberry Pi and perform Google Map Geo-Location Tracking in a Real-Time

NEO-6M GPS Module with EPROM is a complete GPS module that uses the latest technology to give the best possible positioning...

How to Create a Perfect Resume for a Startup Company?

Creating a resume is the stepping stone and the biggest challenge you face as a fresh graduate.Many of them prefer entering...

Top Kubernetes Tools You Need for 2022- Devtron

Thanks to Collabnix community members Abhinav Dubey, Ashutosh Kale and Vinodkumar Mandalapu for all the collaboration and contribution towards these blog post series. What’s the biggest benefit you’ve...

Top Kubernetes Tools You Need for 2021 – K3d and Portainer

Thanks to Collabnix community members Abhinav Dubey, Ashutosh Kale and Vinodkumar Mandalapu for all the collaboration and contribution towards this blog post series. In the last...

Getting Started with Docker and AI workloads on NVIDIA Jetson AGX Xavier Developer Platform

If you’re an IoT Edge developer and looking out to build and deploy the production-grade end-to-end AI robotics applications, then check...

Hack into the DJI Robomaster S1 Battle Bot in 5 Minutes

When I saw the RoboMaster S1 for the first time, I was completely stoked. I was impressed by its sturdy look....

How Much Does it Cost to Hire a Software Developer?

This is a guest post contributed by Collabnix Community member Anastasia Stefanuk, a Professional writer, journalist and editor. Hiring a software...

Building a Real-Time Crowd Face Mask Detection System using Docker on NVIDIA Jetson Nano

Did you know? Around 94% of AI Adopters are using or plan to use containers within 1 year time. Containers are...

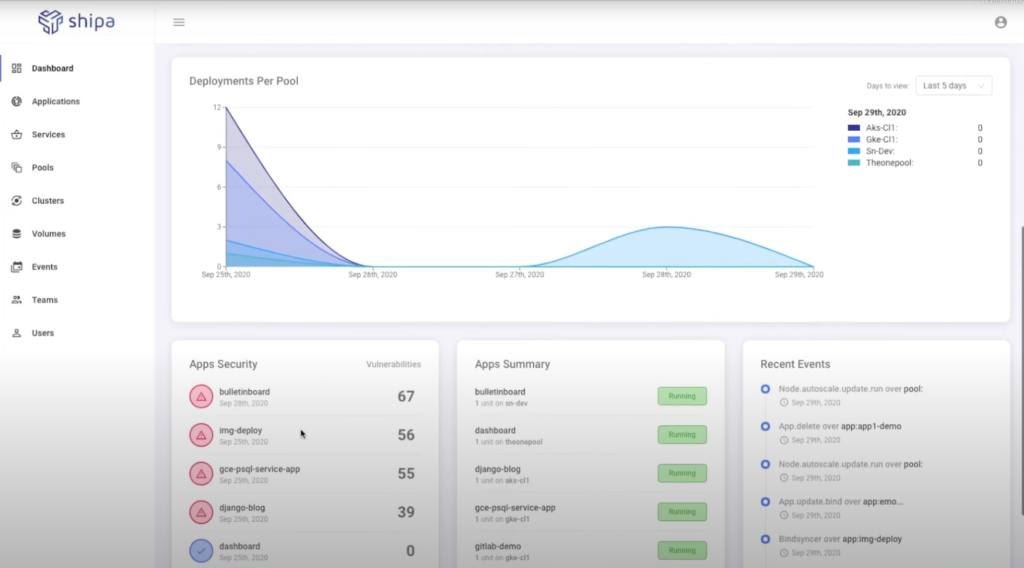

Getting Started with Shipa

Are you frustrated with how much time it takes to create, deploy and manage an application on Kubernetes? Wouldn’t it be...

How I visualized Time Series Data directly over IoT Edge device using Docker & Grafana

Application developers look to Redis and RedisTimeSeries to work with real-time internet of things (IoT) sensor data. RedisTimeseries is a Redis...

A First Look at Dev Environments Feature under Docker Desktop 3.5.0

Starting Docker Desktop 3.5.0, Docker introduced the Dev Environments feature for the first time. The Dev Environments feature is the foundation...

How to assemble DJI Robomaster S1 for the first time?

The RoboMaster S1 is an educational robot that provides users with an in-depth understanding of science, math, physics, programming, and more...

Building Your First Jetson Container

The NVIDIA Jetson Nano 2GB Developer Kit is the ideal platform for teaching, learning, and developing AI and robotics applications. It...

What You Should Expect From Collabnix Joint Meetup with JFrog & Docker Bangalore Event?

This June 19th, 2021, the Collabnix community is coming together with JFrog & Docker Bangalore Meetup Group to conduct a meetup...

Kubectl for Docker Beginners

Kubectl is a command-line interface for running commands against Kubernetes clusters. kubectl looks for a file named config in the $HOME/.kube directory. You can specify...

Running RedisInsight Docker container in a rootless mode

On a typical installation, the Docker daemon manages all the containers. The Docker daemon controls every aspect of the container lifecycle....

Docker Compose now shipped with Docker CLI by default

Last year, Dockercon attracted 78,000 registrants, 21,000 conversations across 193 countries. This year, it was an even much bigger event attracting...

Delivering Container-based Apps to IoT Edge devices | Dockercon 2021

Did you know? Dockercon 2021 was attended by 80,000 participants on the first day. It was an amazing experience hosting “Docker...

What are Kubernetes Pods? | KubeLabs Glossary

Kubernetes (commonly referred to as K8s) is an orchestration engine for container technologies such as Docker and rkt that is taking...

Running a Web Browser in a Docker container

Are you still looking out for a solution that allows you to open multiple web browsers in Docker containers at the...

Running Minecraft in Rootless Mode under Docker 20.10.6

Rootless mode was introduced in Docker Engine v19.03 as an experimental feature for the first time. Rootless mode graduated from experimental...

Running RedisInsight using Docker Compose

RedisInsight is an intuitive and efficient GUI for Redis, allowing you to interact with your databases and manage your data—with built-in...

Getting Started with BME680 Sensor on NVIDIA Jetson Nano

The BME680 is a digital 4-in-1 sensor with gas, humidity, pressure, and temperature measurement based on proven sensing principles. The state-of-the-art...

How to run NodeJS Application inside Docker container on Raspberry Pi

This is the complete guide starting from all the required installation to actual dockerizing and running of a node.js application. But...

OSCONF 2021: Save the date

OSCONF 2021 is just around the corner and the free registration is still open for all DevOps enthusiasts who want to...

A Closer Look at AI Data Pipeline

According to a recent Gartner report, “By the end of 2024, 75% of organizations will shift from piloting to operationalizing artificial...

How I built the first ARM-based Docker Image on Pinebook using buildx tool?

The Pinebook Pro is a Linux and *BSD ARM laptop from PINE64. It is built to be a compelling alternative to...

Portainer Vs Rancher

According to Gartner “By 2024, low-code application development will be responsible for more than 65% of application development activity.” Low code...

Getting Started with NVIDIA Jetson Nano From Scratch

The NVIDIA Jetson Nano 2GB Developer Kit is the ideal platform for teaching, learning, and developing AI and robotics applications. It...

Running Redis on Multi-Node Kubernetes Cluster in 5 Minutes

Redis is a very popular open-source project with more than 47,200 GitHub stars, 18,700 forks, and 440+ contributors. Stack Overflow’s annual...

2 Minutes to “Nuke” Your AWS Cloud Resources

Are you seriously looking out for a tool that can save your AWS bills while being a FREE tier user? If...

A New DockerHub CLI Tool under Docker Desktop 3.0.0

Docker Desktop is the preferred choice for millions of developers building containerized applications. With the latest Docker Desktop Community 3.0.0 Release, a new...

Running RedisAI on NVIDIA Jetson Nano for the first time

The hardest part of AI is not artificial intelligence itself, but dealing with AI data. The accelerated growth of data captured...A First Look at PineBook Pro – A 14” ARM Linux Laptop For Just $200

If you’re a FOSS enthusiast and looking out for a powerful little ARM laptop, PineBook Pro is what you need. The...

Introducing 2GB NVIDIA Jetson Nano: An Affordable Yet Powerful $59 AI Computer

Today at GPU Technology Conference(GTC) 2020, NVIDIA announced a new 2GB Nvidia Jetson Nano for the first time. Last year, during...

Running Docker Compose on NVIDIA Jetson Nano in 5 Minutes

Starting with v4.2.1, NVIDIA JetPack includes a beta version of NVIDIA Container Runtime with Docker integration for the Jetson platform. This...

5 Minutes to Kubernetes Architecture

Kubernetes (a.k.a K8s) is an open-source container-orchestration system which manages the containerised applications and takes care of the automated deployment, storage,...

5 Reasons why you should attend ARM Dev Summit 2020

Register Here With 100+ sessions, 200+ speakers & tons of sponsors , Arm DevSummit is going to kick-off next month. It...

Interview with Docker Captain Ajeet Singh Raina

This article was originally published in the leading Open Source India magazine Ajeet Singh Raina’s journey as a community leader began...

Running Minecraft Server on NVIDIA Jetson Nano using Docker

With over 126 million monthly users, 200 million games sold & 40 million MAU, Minecraft still remains one of the biggest...

A First Look at Portainer 2.0 CE – Now with Kubernetes Support

Irrespective of the immaturity of the container ecosystem and lack of best practices, the adoption of Kubernetes is massively growing for...

Deploy your AWS EKS cluster with Terraform in 5 Minutes

Amazon Elastic Kubernetes Service (a.k.a Amazon EKS) is a fully managed service that helps make it easier to run Kubernetes on...

From 1 to 9000: A Close Look at Collabnix Slack Journey

“Individually We Specialise, Together We Transform” Slack has grown to be an excellent tool for communities- both large and small, open...

Getting Started with Jenkins X in 5 Minutes

Jenkins works perfectly well as a stand-alone open source tool but with the shift to Cloud native and Kubernetes, it invites...

Jenkins X Cloud Native CI/CD with TestProject

Building microservices isn’t merely breaking up a monolithic software application into a collection of smaller services. When shifting to microservices, there...

The Rise of Shipa – A Continuous Operation Platform

Cloud native microservices have undergone an exciting architectural evolution. 4 years back, the industry was busy talking about the rise of...

Monitoring Multi-Node K3s Cluster running on IoT using Datadog – Part 1

The rapid adoption of cloud-based solutions in the IT industry is acting as the key driver for the growth of the...

The Ultimate Docker Tutorial for Automation Testing

If you’re looking out for a $0 cloud-based, SaaS test automation development framework designed for your agile team, TestProject is the right...

How to migrate AWS ElastiCache data to Redis with Zero downtime

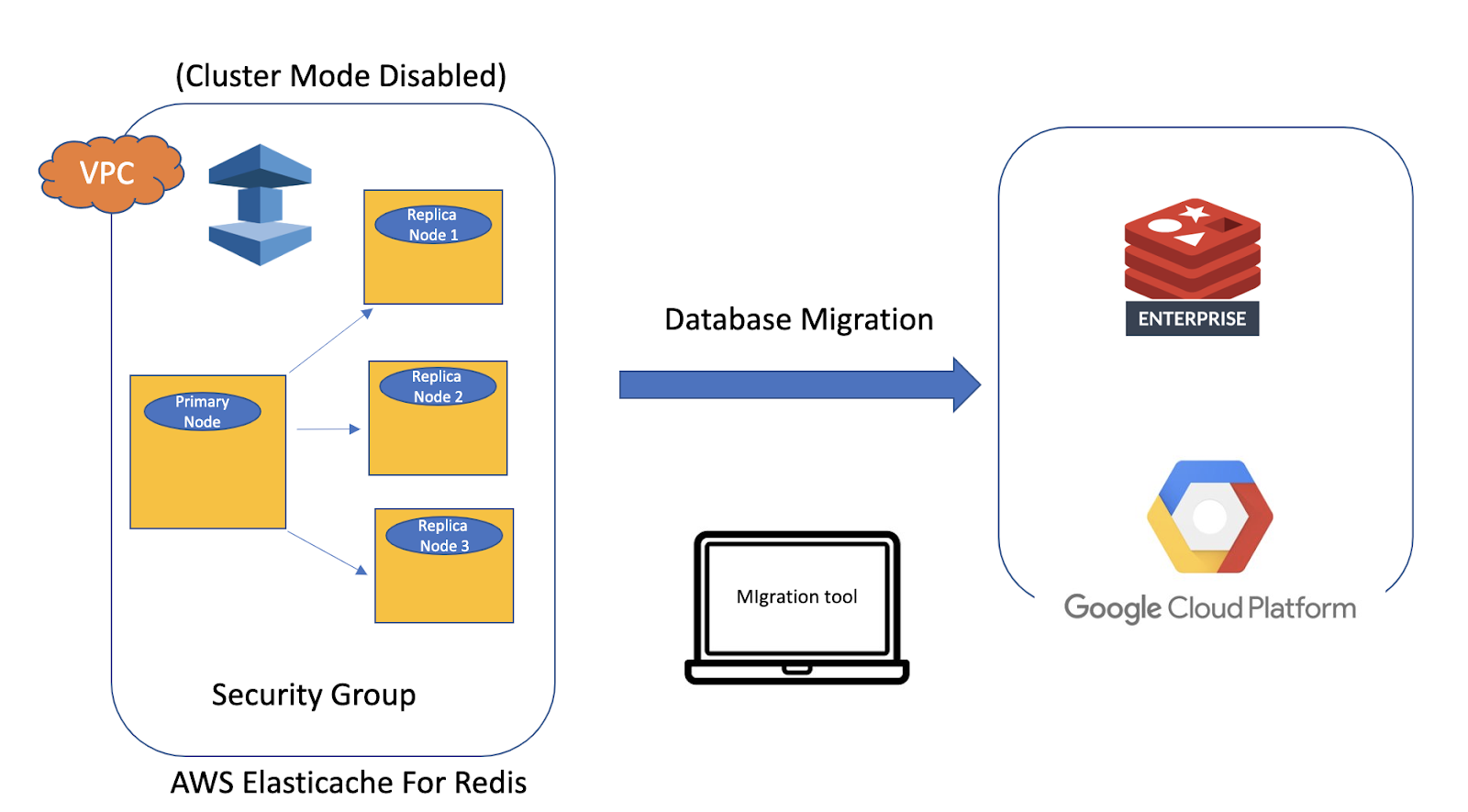

Are you looking out for a tool which can help you migrate Elasticache data to Redis Open Source or Redis Enterprise...

Building Your First Certified Kubernetes Cluster On-Premises, Part 2: – iSCSI Support

In my first post, I discussed how to build your first certified on-premises Kubernetes cluster using Docker Enterprise 3.0. In this...