In industries like healthcare, legal, and finance, real-time voice transcription has become critical. The demand is not only for transcription speed but also for impeccable accuracy. Misinterpretations or delays can lead to costly errors. To navigate this complex landscape, it’s important to choose the right transcription model, carefully balancing accuracy with real-time processing requirements.

In Addition, In today’s fast-paced world, real-time voice transcription has become an indispensable tool across various industries. From healthcare to finance, accurate speech-to-text technology can significantly enhance efficiency and decision-making processes. However, when accuracy is critical, selecting the right model becomes paramount. Let’s dive into the world of real-time voice transcription models and explore how to balance performance with processing requirements.

Choosing the Right Model for Real-Time Transcription

When it comes to real-time voice transcription, especially in industries where accuracy is crucial, deep learning-based models often reign supreme. Among these, transformer-based architectures have shown remarkable promise.Some other big players in the industry are listed below.- Google Cloud Speech-to-Text: Well-known for its accuracy and language support.

- Whisper by OpenAI: Whisper is a cutting-edge model, known for its accuracy and ability to handle noise and accents.

- Amazon Transcribe: A powerful model with real-time transcription capabilities and easy integration with AWS services.

- AssemblyAI: boasts near-human level accuracy, achieving impressive metrics through extensive training on diverse datasets. Their latest models, such as Conformer-2, are designed to handle noisy environments and complex speech patterns effectively, making them ideal for real-time applications

- Speechly: offers a unique advantage by allowing on-device transcription, which not only enhances privacy but also reduces latency significantly. With an accuracy rate exceeding 95%, Speechly is particularly suited for applications requiring immediate feedback without compromising performance

For industries where precision is critical, I’d recommend Whisper, which strikes a great balance between real-time processing and transcription accuracy, especially in noisy environments. Its ability to understand different accents, and deliver high-quality transcription makes it an excellent choice.

Here’s a basic code snippet using Whisper in Python to process voice transcription:

import whisper

# Load the whisper model

model = whisper.load_model("base")

# Transcribe an audio file

result = model.transcribe("audio_file.wav")

# Output the transcription

print(result['text'])

In addition, One of the most popular and powerful models for this task is the Transformer-XL model. Developed by Google Research, this model has demonstrated exceptional performance in various natural language processing tasks, including speech recognition. Let’s take a look at how we might implement a simple version of this model using PyTorch:

import torch

import torch.nn as nn

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load pre-trained model and tokenizer

model_name = "google/t5-base"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Function to encode text

def encode_text(text):

encoding = tokenizer.encode_plus(

text,

max_length=512,

padding='max_length',

truncation=True,

return_attention_mask=True,

return_tensors='pt'

)

return encoding['input_ids'], encoding['attention_mask']

# Function to decode output

def decode_output(output):

decoded = tokenizer.decode(output, skip_special_tokens=True)

return decoded.strip()

# Example usage

text_to_transcribe = "Hello, how are you doing today?"

input_ids, attention_mask = encode_text(text_to_transcribe)

with torch.no_grad():

output = model.generate(input_ids=input_ids, attention_mask=attention_mask, max_length=100)

transcription = decode_output(output)

print(f"Transcription: {transcription}")

This code snippet demonstrates how to use a pre-trained T5 model for text generation, which can be adapted for speech recognition tasks. While this isn’t a full-fledged speech recognition system, it gives you an idea of how transformer models work.

To illustrate how you might implement a basic transcription service using AssemblyAI, consider the following Python code snippet:

import requests

API_KEY = 'your_api_key_here'

AUDIO_URL = 'url_to_your_audio_file'

def transcribe_audio(audio_url):

headers = {

'authorization': API_KEY,

'content-type': 'application/json'

}

json_data = {

'audio_url': audio_url

}

response = requests.post('https://api.assemblyai.com/v2/upload', headers=headers, json=json_data)

return response.json()

transcription_result = transcribe_audio(AUDIO_URL)

print(transcription_result)

This code snippet demonstrates how to send an audio file to AssemblyAI’s API for transcription. Replace your_api_key_here with your actual API key and url_to_your_audio_file with the URL of the audio you want to transcribe.

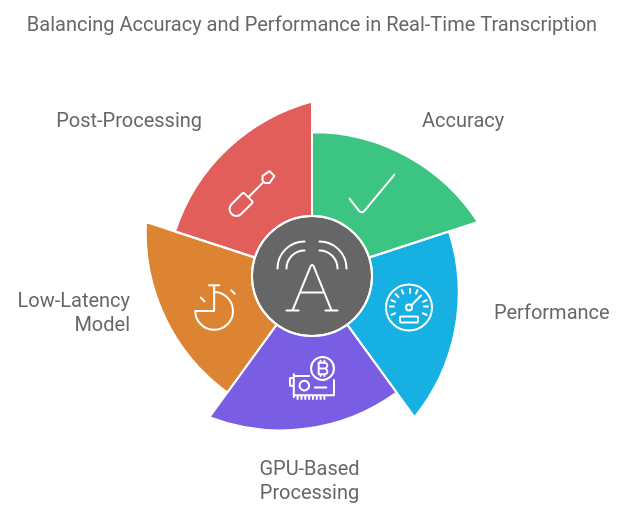

Balancing Accuracy with Performance

While accuracy is paramount, real-time systems must handle transcriptions fast enough to keep up with live audio feeds. Whisper, while highly accurate, can be resource-intensive, making the choice of hardware critical. For real-time systems, GPU-based processing is often recommended to ensure both speed and performance.

A potential strategy to balance real-time processing with accuracy is to use a hybrid model approach:

- Low-latency model for live transcription: Use a faster model (like Google’s real-time Speech-to-Text) to capture initial transcriptions quickly.

- Post-processing for accuracy: After the real-time transcription, run Whisper in the background to refine the results where precision matters most.

- Optimization Techniques: A Implement techniques like knowledge distillation or pruning to reduce model size while maintaining accuracy.

- Hardware: Consider upgrading your infrastructure to support faster processing. GPUs can significantly boost performance for neural network operations.

- Inference Speed: Look for models optimized for inference speed, such as quantized versions or models specifically designed for low-latency processing.

- Model Size: Larger models generally offer better performance but come with increased computational requirements. You’ll need to strike a balance between model size and available resources.

Furthermore,Here’s a simple example of how you might optimize a model for faster inference:

import torch

from torch.quantization import QuantStub, DeQuantStub

class OptimizedModel(nn.Module):

def __init__(self, base_model):

super().__init__()

self.quant_conv1 = QuantStub()

self.dequant_linear = DeQuantStub()

# Replace model parameters with quantized versions

self.conv1 = torch.quantization.quantize_dynamic(base_model.conv1, {torch.nn.Conv2d}, dtype=torch.qint8)

self.fc = torch.quantization.quantize_dynamic(base_model.fc, {torch.nn.Linear}, dtype=torch.qint8)

def forward(self, x):

x = self.quant_conv1(x)

x = self.conv1(x)

x = self.dequant_linear(x)

x = self.fc(x)

return x

# Usage

optimized_model = OptimizedModel(base_model)

Here’s an example of using Google’s Speech-to-Text for low-latency live transcription:

import speech_recognition as sr

# Initialize recognizer

recognizer = sr.Recognizer()

# Real-time microphone transcription

with sr.Microphone() as source:

print("Listening...")

audio = recognizer.listen(source)

# Recognize and print the transcription

try:

print("Transcription: " + recognizer.recognize_google(audio))

except sr.UnknownValueError:

print("Could not understand audio")

By combining real-time processing with Whisper’s post-processing, you can achieve both speed and accuracy without compromising user experience.

Challenges in Integration

Integrating a real-time transcription system with existing infrastructure comes with its own set of challenges:

- Latency and Network Issues: Real-time systems rely heavily on low-latency data transmission. In industries where every second counts, high latency can cause transcription delays, affecting overall workflow. Solutions like using edge computing or cloud-based GPUs can help mitigate these issues.Maintaining real-time performance while dealing with potential network delays or server load can be tricky.

- Scalability: When dealing with large-scale operations, especially in call centers or healthcare, the system must handle multiple concurrent streams. Implementing asynchronous processing in Python, using libraries like asyncioor Celerycan help manage numerous transcription requests simultaneously. As demand grows, you may need to scale your system horizontally or vertically, which can be complex depending on your chosen deployment strategy.

- API Design: Ensuring seamless integration often requires creating custom APIs that fit your organization’s architecture.

- Data Security: Transcribing sensitive information requires robust security measures to protect privacy. li> Error Handling: Developing robust error handling mechanisms to deal with unexpected inputs or system failures. li>Compatibility Issues: Ensuring that the chosen transcription model integrates seamlessly with current systems (like CRM or customer service platforms) can be complex. It may require custom development or middleware solutions.

- Training Data Requirements: Many models need extensive training data to perform optimally in specific environments. Gathering this data and ensuring it is representative of actual use cases can be resource-intensive.

- User Adoption: Employees may resist new technologies due to fear of change or lack of familiarity. Providing adequate training and demonstrating the benefits of accurate transcription can facilitate smoother transitions.

- Ongoing Maintenance: Continuous improvement is necessary as language evolves and new terminologies emerge. Regular updates and retraining of models will be essential for maintaining high accuracy levels over time.

Example of using asyncio to handle multiple audio streams:

import asyncio

import whisper

model = whisper.load_model("base")

async def transcribe_audio(file):

result = model.transcribe(file)

print(f"Transcription: {result['text']}")

async def main():

tasks = [

transcribe_audio("audio1.wav"),

transcribe_audio("audio2.wav")

]

await asyncio.gather(*tasks)

asyncio.run(main())

- Handling Accents and Noisy Environments: One of the common issues in voice transcription systems is the difficulty in handling diverse accents and background noise. Whisper’s model excels in these scenarios, but incorporating noise-cancellation techniques like WebRTC and pre-processing the audio before transcription can improve the system’s overall performance.

- Data Security and Privacy: In high-stakes industries, ensuring the privacy of sensitive data is essential. Encrypting both data at rest and in transit, alongside complying with regulatory standards like HIPAA or GDPR, is critical. This can be done by configuring secure API endpoints and using secure cloud services like AWS with VPCs.

Conclusion

Choosing the right model for real-time transcription in critical industries is a delicate balance between speed, accuracy, and performance. Whisper offers excellent accuracy, but for real-time applications, a hybrid approach can help maintain responsiveness while post-processing ensures transcription quality. Implementing asynchronous processes, handling network latency, and ensuring privacy are all essential for a successful deployment.

By thoughtfully integrating these components, industries can leverage real-time voice transcription technology effectively without compromising on the performance or precision required in high-stakes environments. Implementing a state-of-the-art voice transcription system for critical industries is a complex task that requires careful consideration of performance, real-time requirements, and system integration. By leveraging advanced models like Transformer-XL and applying optimization techniques, you can achieve high accuracy while balancing processing needs.Remember, the journey to perfect transcription is ongoing. Stay updated with the latest advancements in AI and NLP, and continuously refine your system based on feedback and evolving industry standards.As you embark on this exciting project, keep in mind that the goal is not just to transcribe words, but to unlock new possibilities in your industry. Happy coding!

References

For more in-depth exploration of Real Time Voice Transcription, you can refer to these resources below