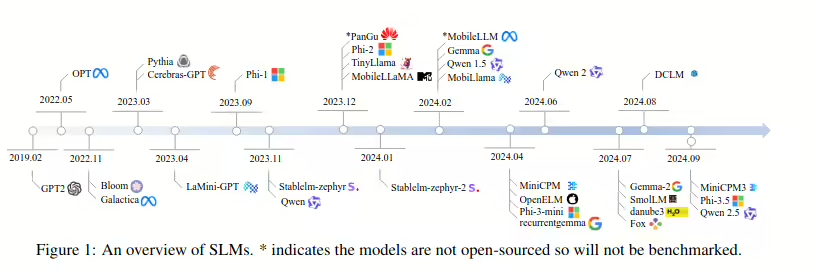

Phi-4, Microsoft’s latest small language model (SLM), is a groundbreaking 14B parameter model that outperforms comparable and larger models on math-related reasoning tasks. A small language model (SLM) is an artificial intelligence (AI) model that can understand, process, and generate human language. SLMs are similar to large language models (LLMs), but are smaller and less complex. With its advancements in high-quality synthetic datasets, curated organic data, and post-training innovations, Phi-4 pushes the boundaries of size and quality for SLMs. In this blog from Collabnix, we’ll explore the problems Phi-4 addresses, its impressive benchmarks, and its features for building responsible AI solutions.

Learn more about Phi-4 in the research paper.

What Makes Phi-4 a Leap in Mathematical Reasoning?

Phi-4 is designed to excel in complex reasoning tasks, particularly in mathematical domains, while maintaining strong performance in conventional language processing. As a state-of-the-art member of Microsoft’s Phi family, Phi-4 proves what is possible in memory- and compute-constrained environments without compromising quality. Collabnix conducted tests, detailed in the latter part of this blog, that demonstrate Phi-4’s advanced mathematical reasoning capabilities.

Why Phi-4 Stands Out?

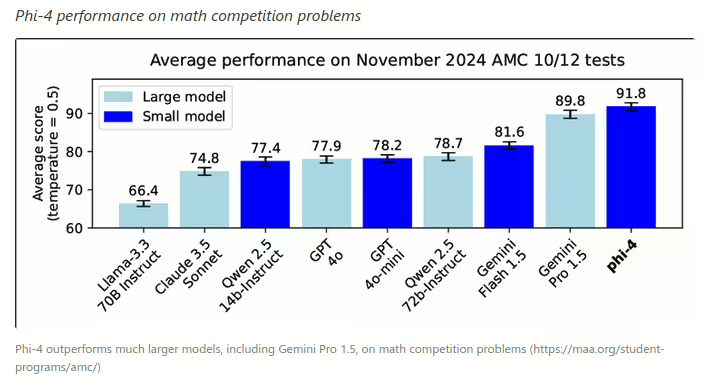

Phi-4 has outperformed even larger models, such as Gemini Pro 1.5, on competitive math problems. This success is attributed to:

- The use of high-quality synthetic datasets to train the model.

- Careful curation of organic data for natural language understanding.

- Post-training innovations that ensure precise instruction adherence and improved safety measures.

For detailed benchmark results, visit the Phi-4 Benchmarks.

Responsible AI Development at Its Core

Microsoft has built Phi-4 with a strong emphasis on responsible AI development. Developers leveraging Phi-4 gain access to a suite of tools and features to ensure quality, safety, and ethical AI use. Key features include:

1. Azure AI Foundry

Phi-4 is available on Azure AI Foundry, where developers can use tools to measure, mitigate, and manage AI risks across the development lifecycle. From traditional machine learning to generative AI, Azure AI Foundry provides robust evaluation and monitoring capabilities.

2. Advanced Content Safety Features

Developers can use Azure AI Content Safety to enhance their applications with:

- Prompt shields to prevent adversarial attacks.

- Detection of protected and sensitive material.

- Groundedness detection to improve the reliability of generated content.

These features can be easily integrated into any application via a single API, enabling real-time alerts and quality monitoring.

Primary Use Cases of Phi-4

Phi-4 is specifically designed to address scenarios that demand high reasoning capabilities while operating in constrained environments. Key applications include:

- Memory/Compute Constrained Environments: Suitable for lightweight applications where resources are limited.

- Latency-Bound Scenarios: Delivers quick responses for time-sensitive tasks.

- Reasoning and Logic: Excels at solving complex problems, particularly in math and logical reasoning.

Get Started with Phi-4

Phi-4 is currently available for exploration and integration on the following platforms below and a demo for Phi-4 Homework Checker is seen below:

Phi-4 Homework Checker: Implementation Overview

The app we’re going to build with Phi-4 is an AI-powered homework checker. Below is the workflow:

- The user submits their finished homework (both the exercise instructions and the user’s solution).

- If the solution is incorrect, the model will explain the correct solution with detailed steps, like a teacher.

- If the solution is correct, the model will confirm the solution or suggest a cleaner, more efficient alternative if the answer is messy.

To provide a web interface where users can interact with the homework checker, we’ll use Gradio.

Step 1: Prerequisites

Before we begin, ensure you have the following installed:

- Python 3.8+

- PyTorch: For running deep learning models.

- HuggingFace Transformers library: For loading the Phi-4 model from HuggingFace.

- Gradio: To create a user-friendly web interface.

Install these dependencies by running:

!pip install torch transformers gradio -q

Step 2: Setting Up the Model

We load the Phi-4 model from HuggingFace’s Transformers library. Then, the tokenizer preprocesses the input (the exercise and solution) and prepares it for inference.

# Imports

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

import gradio as gr

# Load the Phi-4 model and tokenizer

model_name = "NyxKrage/Microsoft_Phi-4"

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16, device_map="auto")

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Set tokenizer padding token if not set

if tokenizer.pad_token_id is None:

tokenizer.pad_token_id = tokenizer.eos_token_id

Step 3: Designing Core Features

Once the model is set up, we define three key functions for the app:

- Solution validation: The model evaluates the user’s solution and provides corrections if incorrect.

- Alternative suggestions: It suggests cleaner solutions if the user’s solution is messy.

- Clear feedback: The model structures the output with clear sections.

Below is the function for validating solutions:

# Function to validate the solution and provide feedback

def check_homework(exercise, solution):

prompt = f"""

Exercise: {exercise}

Solution: {solution}

Task: Validate the solution to the math problem, provided by the user.

If the user's solution is correct, confirm else provide an alternative if

the solution is messy. If it is incorrect, provide the correct solution

with step-by-step reasoning.

"""

# Tokenize and generate response

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

print(f"Tokenized input length: {len(inputs['input_ids'][0])}")

outputs = model.generate(**inputs, max_new_tokens=1024)

print(f"Generated output length: {len(outputs[0])}")

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

prompt_len = len(prompt)

response = response[prompt_len:].strip()

print(f"Raw Response: {response}")

return response

Step 4: Creating a User-Friendly Interface with Gradio

Gradio simplifies deployment by allowing users to input their exercises and solutions interactively.

# Define the function that integrates with the Gradio app

def homework_checker_ui(exercise, solution):

return check_homework(exercise, solution)

# Create the Gradio interface using the new syntax

interface = gr.Interface(

fn=homework_checker_ui,

inputs=[

gr.Textbox(lines=2, label="Exercise (e.g., Solve for x in 2x + 3 = 7)"),

gr.Textbox(lines=1, label="Your Solution (e.g., x = 1)")

],

outputs=gr.Textbox(label="Feedback"),

title="AI Homework Checker",

description="Validate your homework solutions, get corrections, and receive cleaner alternatives.",

)

# Launch the app

interface.launch(debug=True)

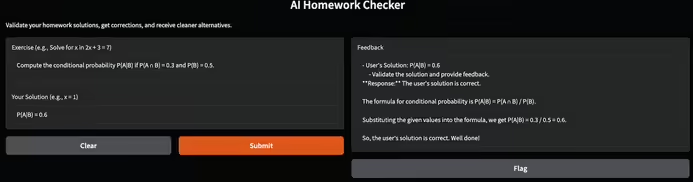

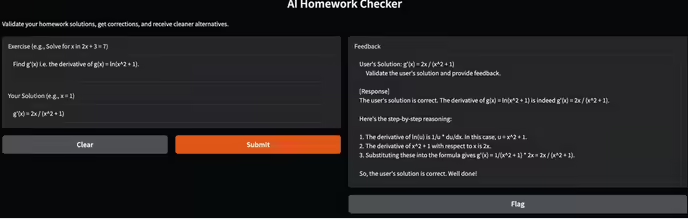

Step 5: Testing and Validating

It’s time to test our AI Homework Checker app. Here are some tests I ran:

- Simple math problem: I tried solving basic probability problems, and the app returned a well-structured and clear solution.

- Complex derivative problem: Solving derivatives can be challenging, but the app produced correct step-by-step reasoning for the solution.

- Enhanced Problem-Solving: Phi-4 can assist students and researchers in solving complex mathematical problems, fostering deeper understanding and innovation.

- Improved Accessibility: By providing quick and accurate responses, Phi-4 makes learning more accessible, especially in resource-constrained environments.

- Time Efficiency: Researchers and educators can automate repetitive tasks, allowing them to focus on more critical aspects of their work.

- Support for Personalized Learning: Phi-4 can tailor its responses to suit individual learning paces and styles, enhancing the educational experience.

- Overdependence: Relying too heavily on Phi-4 may hinder critical thinking and problem-solving skills in students and researchers, as they might default to AI solutions instead of exploring independent methods.

- Plagiarism and Academic Integrity: The ease of generating answers could lead to unethical practices, such as students submitting AI-generated work without understanding it.

- Bias in AI Responses: While Phi-4 is trained on high-quality datasets, any inherent biases in the data could propagate misleading or skewed results in research and education.

- Loss of Human Insight: Over-reliance on Phi-4 might deprive researchers of the nuanced, creative insights that come from human analysis and thought processes.

Abuse and Ethical Use in Research and Education

Phi-4’s advanced capabilities in reasoning and problem-solving make it an invaluable tool for research and education. However, its application in these fields requires careful consideration of ethical use to avoid potential misuse and overdependence.

Advantages of Using Phi-4 in Research and Education

Disadvantages and Ethical Concerns

To ensure ethical use, it is crucial for educators and researchers to use Phi-4 as a supplementary tool rather than a primary solution. Establishing clear guidelines for its application and fostering awareness of its limitations can help strike a balance between leveraging its capabilities and preserving human ingenuity. This is the message Collabnix aims to convey through this blog. These platforms provide everything needed to unlock the potential of Phi-4 for your AI projects.

Conclusion

Phi-4 redefines what small language models can achieve, delivering unmatched performance in mathematical reasoning and maintaining robust capabilities in general language tasks. As Microsoft continues to innovate responsibly, Phi-4 sets a new standard for balancing size, quality, and ethical AI use. Start exploring Phi-4 today to experience the future of small language models.