Over the past year, Microsoft developments with AutoGen have underscored the remarkable capabilities of agentic AI and multi-agent systems. Microsoft is thrilled to unveil AutoGen v0.4 , a major update shaped by invaluable feedback from our vibrant community of users and developers. This release marks a comprehensive overhaul of the AutoGen library, designed to elevate code quality, enhance robustness, broaden applicability, and scale efficiently within agent-driven workflows.

Building on a Strong Foundation

The initial launch of AutoGen captured significant attention within the agentic AI landscape. However, users encountered challenges related to architectural limitations, an inefficient API hindered by rapid expansion, and restricted debugging and intervention tools. Feedback emphasized the necessity for improved observability and control, more adaptable multi-agent collaboration frameworks, and reusable components. AutoGen v0.4 directly addresses these concerns through its new asynchronous, event-driven architecture.

Key Enhancements in AutoGen v0.4

This update transforms AutoGen into a more resilient and adaptable platform, accommodating a wider array of agentic use cases. The revamped framework introduces several cutting-edge features, inspired by contributions from both Microsoft insiders and the broader community:

- Asynchronous Messaging: Facilitates communication between agents using asynchronous messages, supporting both event-driven and request/response interaction models.

- Modular and Extensible Design: Allows users to easily tailor systems with pluggable components, including custom agents, tools, memory modules, and models. It also supports the creation of proactive and long-running agents through event-driven patterns.

- Enhanced Observability and Debugging: Incorporates built-in metric tracking, message tracing, and debugging tools to monitor and control agent interactions and workflows. Integration with OpenTelemetry ensures industry-standard observability.

- Scalability and Distributed Operation: Empowers users to design intricate, distributed agent networks that function seamlessly across organizational boundaries.

- Comprehensive Extensions: The extensions module augments the framework’s capabilities with advanced model clients, agents, multi-agent teams, and tools tailored for agentic workflows. Open-source developers can contribute by managing their own extensions.

- Cross-Language Compatibility: Enables interoperability between agents developed in different programming languages, currently supporting Python and .NET, with additional languages in the pipeline.

- Full Type Support: Enforces type checks at build time through interfaces, enhancing robustness and maintaining high code quality.

A Redesigned Framework for Enhanced Functionality

As depicted in Figure 1, the AutoGen framework now features a layered architecture with clearly defined roles across the framework, developer tools, and applications. The structure is divided into three primary layers:

- Core Layer: The essential building blocks for an event-driven agentic system.

- AgentChat Layer: A high-level, task-oriented API built upon the core, offering group chat, code execution, pre-built agents, and more. This layer closely resembles AutoGen v0.2, simplifying the migration process.

- Extensions Layer: Implements core interfaces and integrates third-party services, such as the Azure code executor and OpenAI model client.

Figure 1. The v0.4 update introduces a cohesive AutoGen ecosystem that includes the framework, developer tools, and applications. The framework’s layered architecture clearly defines each layer’s functionality. It supports both first-party and third-party applications and extensions.

Figure 1. The v0.4 update introduces a cohesive AutoGen ecosystem that includes the framework, developer tools, and applications. The framework’s layered architecture clearly defines each layer’s functionality. It supports both first-party and third-party applications and extensions.

Enhanced Developer Tools

AutoGen v0.4 not only upgrades the framework but also introduces enhanced programming tools and applications to support developers in building and experimenting with AutoGen:

- AutoGen Bench: Allows developers to benchmark their agents by measuring and comparing performance across various tasks and environments.

- AutoGen Studio: Rebuilt on the v0.4 AgentChat API, this low-code interface facilitates rapid prototyping of AI agents, offering new capabilities such as:

- Real-Time Agent Updates: Monitor agent action streams in real time through asynchronous, event-driven messages.

- Mid-Execution Control: Pause conversations, redirect agent actions, adjust team compositions, and seamlessly resume tasks.

- Interactive Feedback via UI: Incorporate a UserProxyAgent to enable user input and guidance during team operations in real time.

- Message Flow Visualization: Gain insights into agent communications with an intuitive visual interface that maps message paths and dependencies.

- Drag-and-Drop Team Builder: Visually design agent teams by dragging components into place and configuring their relationships and properties.

- Third-Party Component Galleries: Import and utilize custom agents, tools, and workflows from external galleries to extend functionality.

- Magnetic-One: A new generalist multi-agent application designed to tackle open-ended web and file-based tasks across various domains. This tool represents a significant advancement towards creating agents capable of handling tasks commonly encountered in both professional and personal settings.

Seamless Migration to AutoGen v0.4

To ensure a smooth transition from the previous v0.2 API, we have implemented several measures addressing the core architectural changes:

- Maintained Abstraction Level: The AgentChat API preserves the abstraction level of v0.2, facilitating easy migration of existing code to v0.4. For instance, AgentChat offers an AssistantAgent and UserProxy agent with behaviors similar to those in v0.2.

- Comprehensive Team Interfaces: The team interface includes implementations like RoundRobinGroupChat and SelectorGroupChat, covering all capabilities of the GroupChat class from v0.2.

- Expanded Functionalities: v0.4 introduces numerous new features, including streaming messages, enhanced observability, the ability to save and restore task progress, and the capability to resume paused actions from their previous state.

For detailed instructions, please refer to the migration guide.

Getting Started with AutoGen v0.4

Installation

To use AutoGen, you need to install the required package. AutoGen requires Python 3.10 or later. You can install AutoGen and its dependencies using pip or visit https://github.com/microsoft/autogen and follow the installation guide:

Installation Code:

pip install -U "autogen-agentchat"pip install "autogen-ext[openai]"

If you plan to use additional extensions like OpenAI or LangChain, you may need to install their corresponding packages, but for this demo, we use python 3.11 version and the requirements.txt is seen below:

Code:

pip install autogen[openai] autogen[langchain]

For developers planning to contribute or explore advanced features, you can install AutoGen directly from the source:

Code:

git clone https://github.com/microsoft/autogen.git

cd autogen

pip install -e .

Getting Started with AutoGen: AssistantAgent Example

Introduction

The following example demonstrates how to initialize the AssistantAgent, integrate tools like web_search, and interact with the agent using on_messages() and on_messages_stream() methods.

Code Snippet

Below is the Python code for setting up and running the AssistantAgent:

Code Implementation:

import asyncio

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.messages import TextMessage

from autogen_agentchat.ui import Console

from autogen_core import CancellationToken

from autogen_ext.models.openai import OpenAIChatCompletionClient

# Step 1: Define a custom tool

async def web_search(query: str) -> str:

"""Simulate a web search for the given query."""

return "AutoGen is a programming framework for building multi-agent applications."

# Step 2: Create a model client

model_client = OpenAIChatCompletionClient(

model="gpt-4o",

# Uncomment and set your API key

# api_key="sk-proj--xxxxx"

)

# Step 3: Initialize the AssistantAgent

agent = AssistantAgent(

name="assistant",

model_client=model_client,

tools=[web_search], # Attach the custom tool

system_message="Use tools to solve tasks.",

)

# Step 4: Define an asynchronous function to run the agent and get a response

async def assistant_run():

print("Fetching response using on_messages()...")

response = await agent.on_messages(

[TextMessage(content="Find information on AutoGen", source="user")],

cancellation_token=CancellationToken(),

)

# Print the agent's thought process and the final response

print("Agent's thought process:", response.inner_messages)

print("Final response:", response.chat_message.content)

# Step 5: Define an asynchronous function to stream messages from the agent

async def assistant_run_stream():

print("\nStreaming response using on_messages_stream()...")

await Console(

agent.on_messages_stream(

[TextMessage(content="Find information on AutoGen", source="user")],

cancellation_token=CancellationToken(),

)

)

# Run the example using asyncio

if __name__ == "__main__":

asyncio.run(assistant_run())

asyncio.run(assistant_run_stream())

Features Demonstrated

-

Tool Integration: The

web_searchfunction is used as a tool by the agent to fetch information. -

Model Initialization: The

OpenAIChatCompletionClientintegratesGPT-4oas the model client for the agent. -

Fetching Responses:

on_messages()is used to retrieve the final response along with the agent’s thought process. -

Streaming Responses:

on_messages_stream()showcases the asynchronous streaming capability of the agent, with real-time updates displayed usingConsole.

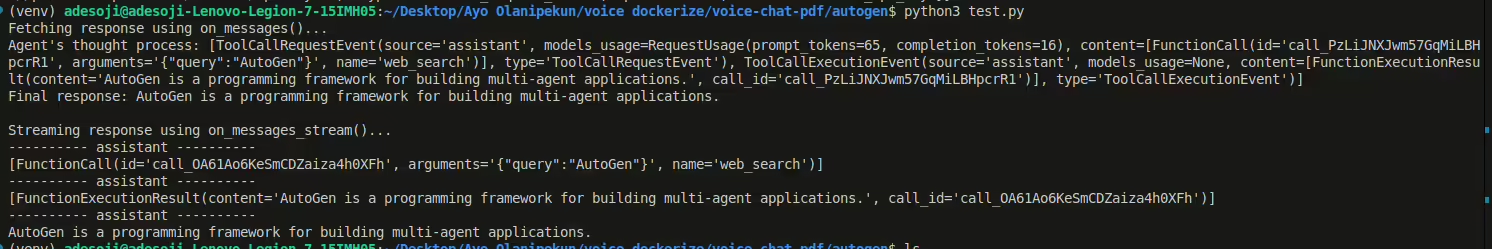

Code response in Terminal

When running the script, the output will include:

- The agent’s internal thought process (tool calls and results).

- The final response from the agent as seen in the picture above.

The streaming function (on_messages_stream()) will display real-time messages as the agent processes the query.

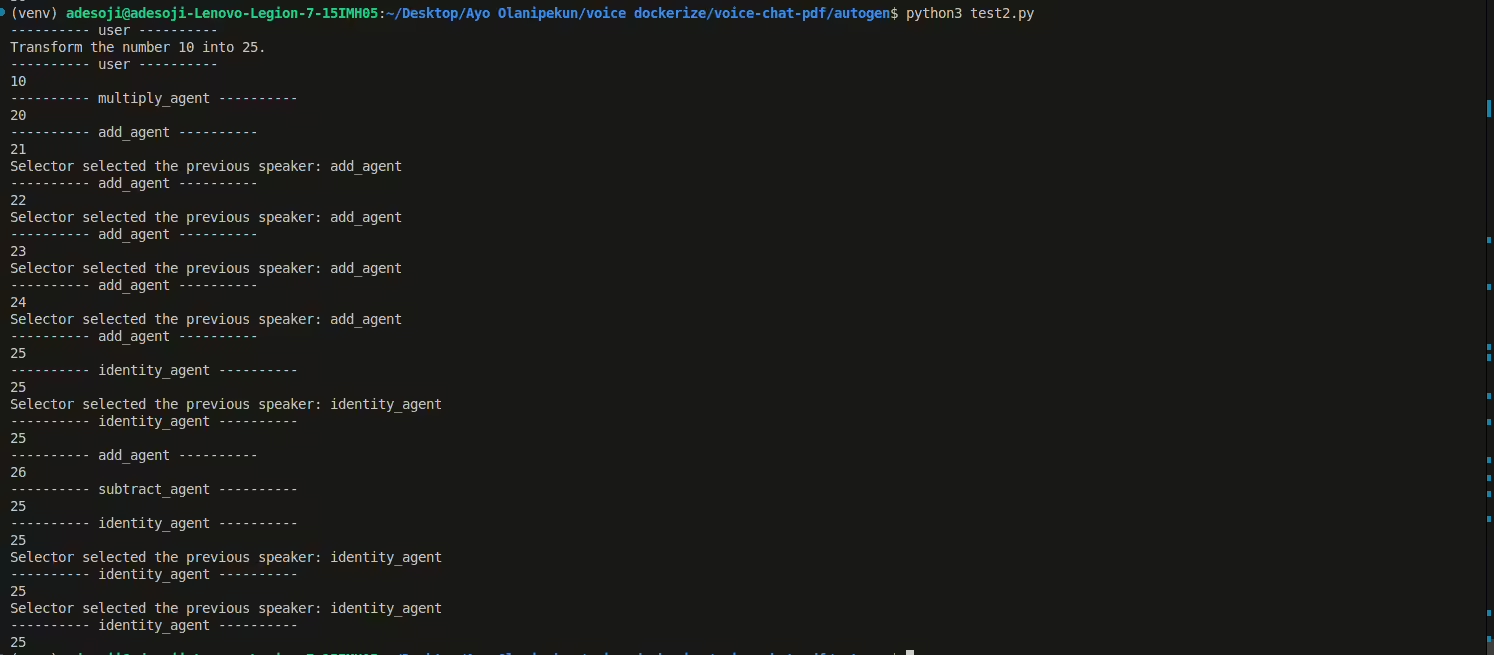

Advanced Code Example: Multi-Agent Collaboration with SelectorGroupChat

This example demonstrates a team of agents performing arithmetic operations to transform a number into a target value.

Code Snippet

import asyncio

from typing import Callable, Sequence, List

from autogen_agentchat.agents import BaseChatAgent

from autogen_agentchat.base import Response

from autogen_agentchat.messages import ChatMessage, TextMessage

from autogen_agentchat.teams import SelectorGroupChat

from autogen_agentchat.ui import Console

from autogen_core import CancellationToken

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_agentchat.conditions import MaxMessageTermination

class ArithmeticAgent(BaseChatAgent):

def __init__(self, name: str, description: str, operator_func: Callable[[int], int]) -> None:

super().__init__(name, description=description)

self._operator_func = operator_func

self._message_history: List[ChatMessage] = []

@property

def produced_message_types(self) -> Sequence[type[ChatMessage]]:

return (TextMessage,)

async def on_messages(self, messages: Sequence[ChatMessage], cancellation_token: CancellationToken) -> Response:

if not messages:

if not self._message_history:

raise ValueError(f"Agent {self.name} has no history to work with.")

last_message = self._message_history[-1]

number = int(last_message.content)

else:

number = int(messages[-1].content)

self._message_history.extend(messages)

result = self._operator_func(number)

response_message = TextMessage(content=str(result), source=self.name)

self._message_history.append(response_message)

return Response(chat_message=response_message)

async def on_reset(self, cancellation_token: CancellationToken) -> None:

self._message_history = []

async def run_selector_group_chat():

# Step 1: Define agents

add_agent = ArithmeticAgent("add_agent", "Adds 1 to the number.", lambda x: x + 1)

multiply_agent = ArithmeticAgent("multiply_agent", "Multiplies the number by 2.", lambda x: x * 2)

subtract_agent = ArithmeticAgent("subtract_agent", "Subtracts 1 from the number.", lambda x: x - 1)

divide_agent = ArithmeticAgent("divide_agent", "Divides the number by 2 and rounds down.", lambda x: x // 2)

identity_agent = ArithmeticAgent("identity_agent", "Returns the number as is.", lambda x: x)

# Step 2: Define a termination condition

termination_condition = MaxMessageTermination(max_messages=15)

# Step 3: Define the SelectorGroupChat

selector_group_chat = SelectorGroupChat(

participants=[add_agent, multiply_agent, subtract_agent, divide_agent, identity_agent],

model_client=OpenAIChatCompletionClient(model="gpt-4", api_key="xxxxxx"), # Replace with your API key

termination_condition=termination_condition,

allow_repeated_speaker=False,

selector_prompt=(

"You are a team of agents working to transform the number into 25 efficiently.\n"

"Roles:\n{roles}\n"

"Descriptions of each agent:\n{participants}\n"

"Conversation history:\n{history}\n"

"Goal:\nTransform the given number into 25 efficiently.\n"

"Guidelines:\n"

"- Choose the agent that will make the most progress toward 25 without exceeding it.\n"

"- Avoid backtracking or oscillations.\n"

"- Prefer multiplication or addition for increasing the number when below 25.\n"

"- Use subtraction or division to reduce the number if it exceeds 25.\n"

"- Minimize the number of steps to reach 25.\n"

"Return only the role name of the next agent to speak."

),

)

# Step 4: Define the task

task = [

TextMessage(content="Transform the number 10 into 25.", source="user"),

TextMessage(content="10", source="user"),

]

# Step 5: Run the SelectorGroupChat and stream the response

await Console(selector_group_chat.run_stream(task=task))

# Run the example using asyncio

if __name__ == "__main__":

asyncio.run(run_selector_group_chat())

Features Demonstrated

- Custom Agent Creation: Each

ArithmeticAgentperforms a specific operation (e.g., addition, multiplication). Agents collaborate within theSelectorGroupChat. - Termination Conditions:

MaxMessageTerminationensures the conversation terminates after 15 messages. - Selector Prompt: Includes rules and guidelines to direct the agents towards the goal efficiently.

- Streaming Response:

Consolestreams the conversation dynamically, showcasing agent interactions in real-time.

How to Use

- Install the required

autogenpackage and its dependencies and save as test2.py. - Replace the placeholder API key (

xxxxxx) with your actual OpenAI API key. - Run the code using Python 3.10 or higher.

- Observe the agent interactions and final output in the console.

This example shows the versatility of AutoGen in multi-agent collaboration scenarios.The response from the code is seen in the image below in the terminal.

Conclusion

This snippet provides a hands-on introduction to AutoGen’s AssistantAgent and SelectorGroupChat, making it ideal for exploring agentic AI workflows. For further details, refer to the official documentation.

One Reply to “Introducing AutoGen v0.4: Revolutionizing Agentic AI with Enhanced Scalability,…”

Comments are closed.