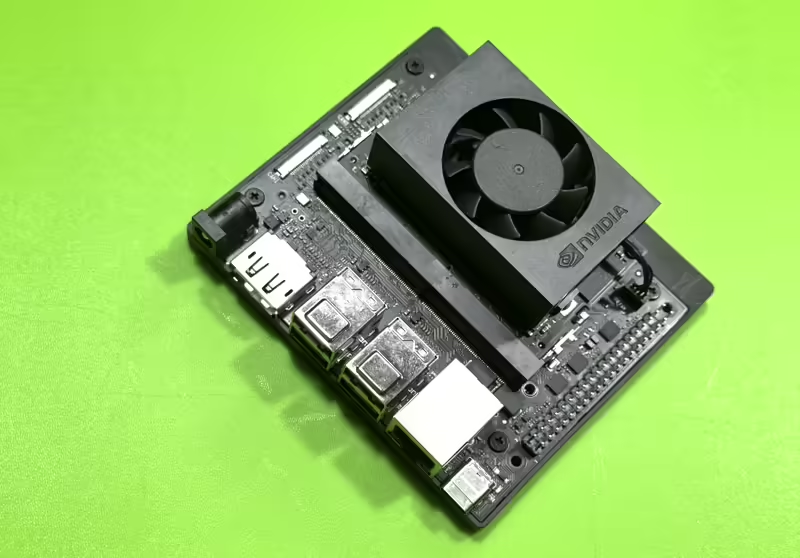

I’ve been eyeing the NVIDIA Jetson lineup for ages, and when the Orin Nano Super was released, I knew I had to get my hands on one. After weeks of hunting—and honestly, some desperate emails to NVIDIA—I finally scored this tiny yet mighty AI computer.

My first thought?

“Let’s get Docker Desktop running on this thing!” As someone who lives and breathes containers, I was curious how Docker would perform on this edge device, especially for AI workloads. Here’s my journey of getting Docker Desktop up and running on the Jetson Orin Nano Super, complete with surprises, workarounds, and plenty of “aha!” moments.

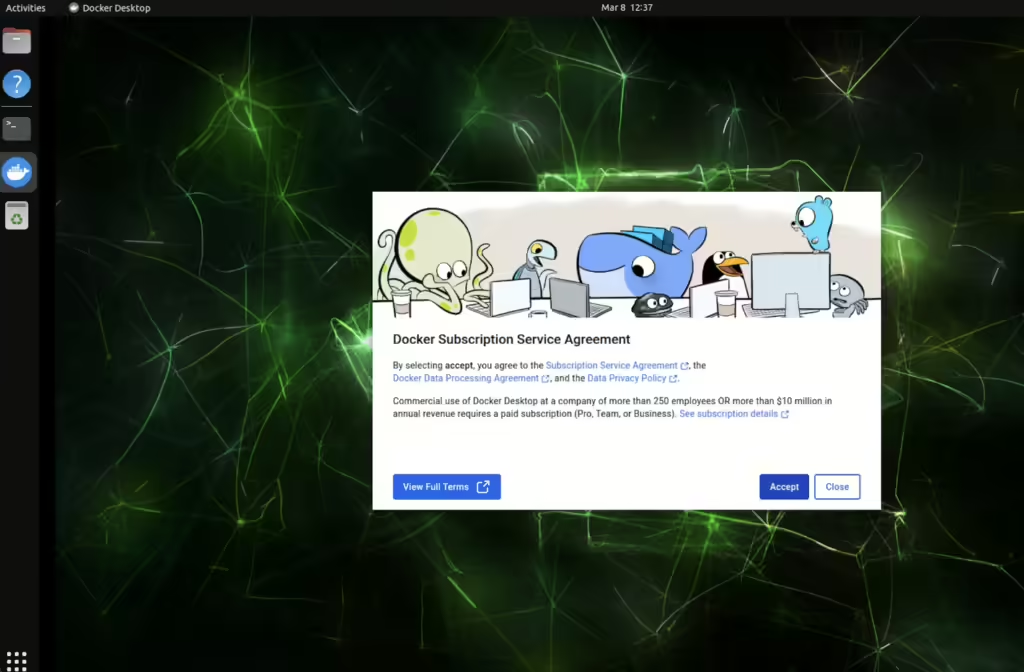

My first challenge was getting Docker Desktop up and running. Here’s the thing – Docker Desktop isn’t officially supported on the Jetson Orin Nano Super yet, but I love a good challenge. I decided to try the Arm-based package, figuring that since the Jetson runs on Arm architecture, it might just work.

After downloading the Docker Desktop arm64 package and accepting the usual agreements, I was pleasantly surprised to see it installed without major hiccups. A quick check with docker desktop status confirmed I was up and running with a valid session ID. That feeling when something works on the first try? Priceless!

Will Docker AI Assistant work on Jetson Orin?

The next exciting part was exploring Docker AI features – particularly Ask Gordon, the built-in AI assistant. I was curious about what was possible with the MCP (Model Context Protocol) integration. Setting up the gordon-mcp.yml file with various services like time, postgres, git, and filesystem allowed Gordon to access more context when answering my questions.

Setting Up the Hardware

Before diving into Docker Desktop, I needed to get the basic hardware configuration right. The Jetson Orin Nano Super is impressive on its own, but I wanted to maximize its potential with a few additions:

- NVIDIA Jetson Orin Nano Super

NVMe SSD card for faster storage

A Crucial NVMe SSD card for faster storage and better performance A wireless network adapter to avoid being tethered to an ethernet cable

- Wireless Network Adapter

The hardware setup was straightforward, though I had to be careful when connecting components to the board. The Jetson’s compact form factor means you need to be precise when adding peripherals.

Preparing the Software Environment

The Jetson requires some preparation before you can start installing additional software. Here’s what I did:

- Set up a Linux system with Ubuntu 20.04+ (I used Ubuntu 22.04)

- Downloaded the NVIDIA SDK Manager to flash the operating system

One tricky part was putting the device into recovery mode using jumper pins before flashing the OS to the NVMe SSD. I connected a USB Type A cable to the USB Type C port on the board, and after a few attempts (and some frustrated sighs), I got it working.

Installing Docker Desktop

Here’s where things get interesting. Docker Desktop isn’t officially supported on the NVIDIA Jetson Orin Nano Super, but I wasn’t about to let that stop me. Since the Jetson runs on Arm architecture, I figured the Arm-based package might work.

1. Download Docker Desktop

I downloaded the Docker Desktop arm64 package directly from this link and installed it using:

sudo apt install ./docker-desktop-arm64.deb2. Accept the Agreement

3. Verifying the Installation

To make sure everything was working properly, I ran a few checks:

4. Checking Docker Desktop CLI Status

The output confirmed that Docker Desktop was indeed running:

docker desktop status

Name Value

Status running

SessionID c2bedfc9-ca9c-47dc-931e-f5f2f6b7de16

That feeling when something works on the first try? Priceless!

5. Enabling Docker AI Features

One of the main reasons I wanted to run Docker Desktop on the Jetson was to explore Docker AI features, particularly Ask Gordon—the built-in AI assistant designed to help with Docker-related tasks.

Ask Gordon is part of Docker’s suite of AI-powered capabilities (collectively referred to as “Docker AI”). These features are currently in Beta and not enabled by default. Like all LLM-based tools, Gordon’s responses might occasionally be inaccurate, but it’s still incredibly useful for streamlining Docker workflows.

To enable Docker AI, I went to Settings > Features in development and toggled on the Docker AI option. After restarting Docker Desktop, I was ready to start exploring Gordon’s capabilities.

6. Adding MCP to Ask Gordon

The real power of Gordon comes from its integration with the Model Context Protocol (MCP). Anthropic recently announced this open protocol that standardizes how applications provide context to large language models. MCP functions as a client-server protocol, where Gordon (the client) sends requests, and the server processes them to deliver context to the AI.

When you run the docker ai command in your terminal to ask a question, Gordon looks for a gordon-mcp.yml file in your working directory for a list of MCP servers that should be used when in that context. The gordon-mcp.yml file is a Docker Compose file that configures MCP servers as Compose services for Gordon to access.

To set up MCP, I created a file called gordon-mcp.yml in my working directory:

services:

time:

image: mcp/time

postgres:

image: mcp/postgres

command: postgresql://postgres:dev@host.docker.internal:5433/postgres

git:

image: mcp/git

volumes:

- /Users/ajeetsraina:/Users/ajeetsraina

gh:

image: mcp/github

environment:

GITHUB_PERSONAL_ACCESS_TOKEN: ${GITHUB_PERSONAL_ACCESS_TOKEN}

fetch:

image: mcp/fetch

fs:

image: mcp/filesystem

command:

- /rootfs

volumes:

- .:/rootfs

This configuration gave Gordon access to various services like time, PostgreSQL databases, git repositories, GitHub, web content via fetch, and the local filesystem. To verify that everything was set up correctly, I ran:

$ docker ai mcp

And there it was—Gordon with enhanced context awareness! https://asciinema.org/a/JeWbUhLk6EUA7u7DMzKGdxjRp – A Quick Glimpse of DeepSeek R1 using Ollama on Jetson Orin Nano Super.

Running AI Models on the Jetson

I still wanted to see what was possible with the Jetson’s AI capabilities. I turned to the Jetson Generative AI Lab, which serves as a hub for discovering the latest generative AI models that can run locally on Jetson devices.

One of the fascinating aspects of the Jetson platform is its ability to run large language models and vision transformers locally. NVIDIA has built dedicated pages for each supported model, complete with Docker run commands and Docker Compose files.

I decided to try running the DeepSeek R1 model using Ollama. You can see a quick demonstration in this asciinema recording.

Troubleshooting GPU Access

When I first tried to run the DeepSeek R1 model using a Docker Compose file from NVIDIA’s examples, I hit a roadblock:

docker: Error response from daemon: could not select device driver "" with capabilities: [[gpu]]

The issue was in how GPU access was configured in the Docker Compose file. Initially, it specified:

devices:

- driver: nvidiaAfter some searching and experimenting, I found that the fix was to use:

runtime: nvidia

This simple change made all the difference, allowing Docker containers to access the Jetson’s GPU capabilities properly.

In future blog posts, I’ll dive deeper into:

- Performing AI model conversion using Hugging Face and Docker Desktop

- Optimizing container performance on the Jetson platform

- Creating edge AI applications that leverage Docker’s containerization benefits

- Working around the limitations of running Docker Desktop on unsupported hardware

Conclusion

Getting Docker Desktop running on the NVIDIA Jetson Orin Nano Super was a rewarding challenge. While there are certainly some limitations, the core functionality works well enough to make this a viable development environment for containerized edge AI applications.

If you’re working with Jetson devices and Docker, I’d love to hear about your experiences. Drop a comment below or reach out on Twitter!

Until next time, happy containerizing on the edge!