First and foremost, what is Gemma? Gemma is a family of open, lightweight, state-of-the-art AI models developed by Google, built from the same research and technology used to create the Gemini models, designed to democratize AI and empower developers and researchers. Running generative artificial intelligence (AI) models like Gemma can be challenging without the right hardware or configuration. Fortunately, open-source frameworks such as llama.cpp and Ollama make this easier by providing a pre-configured runtime environment. Using these frameworks, you can run quantized versions of Gemma on a typical laptop—even without a GPU.

This guide walks you through setting up and using Ollama to run Gemma, focusing on how to install, configure, and generate text from Gemma models.

Why Use Quantized Models?

Quantized Gemma models use the Georgi Gerganov Unified Format (GGUF) and are designed to run with fewer compute resources. By storing and processing data with lower precision, they reduce hardware requirements. The trade-off is a slight loss in generation quality compared to larger, higher-precision model variants. However, for many use cases, the performance and resource savings of quantized models outweigh this quality difference.

Top Reasons to Use Gemma 3

1. Local Deployment with Minimal Hardware

Gemma 3 is designed to run efficiently on consumer hardware. With quantized models (GGUF format), you can run powerful AI capabilities directly on your laptop—even without a dedicated GPU. This makes AI accessible to developers with standard equipment.

2. Open-Source Flexibility

As an open family of models from Google, Gemma 3 gives you complete freedom to customize, fine-tune, and adapt the models for your specific use cases. This openness encourages innovation and experimentation without restrictive licensing.

3. Research-Backed Quality

Built on the same technology that powers Google’s premium Gemini models, Gemma 3 brings enterprise-grade AI capabilities to the open-source community. It leverages cutting-edge research while remaining lightweight and accessible.

4. Variety of Model Sizes

Gemma 3 comes in multiple parameter sizes (1B, 4B, 12B, 27B), letting you choose the right balance between performance and resource requirements. This flexibility means you can scale your implementation based on your specific needs and available hardware.

5. Easy Integration with Ollama

Tools like Ollama make working with Gemma 3 straightforward—just a few commands to get up and running. This simplicity removes technical barriers for developers who want to start experimenting quickly.

6. Multimodal Capabilities

Larger variants of Gemma 3 support both text and image inputs, allowing for creative applications that combine visual and textual understanding (captioning, visual question answering, etc.).

7. Active Community

As part of Google’s open model ecosystem, Gemma 3 benefits from a growing community of developers sharing fine-tuned models, implementation tips, and creative applications.

8. Lower Compute Costs

By running locally instead of depending on cloud APIs, Gemma 3 eliminates ongoing usage fees. This makes it ideal for startups, researchers, and hobbyists with limited budgets.

9. Privacy Advantages

Running models locally means your data never leaves your device. For applications handling sensitive information, this local execution provides significant privacy benefits over cloud-based alternatives.

10. Education and Learning

Gemma 3’s accessible nature makes it an excellent platform for learning about large language models, fine-tuning processes, and AI implementation—perfect for students and self-taught developers entering the field.

Setup

1. Get Access to Gemma Models

Before working with Gemma, you must first request access via kaggle and review the Gemma terms of use. Once approved, you’ll be able to download or pull these models into Ollama.

2. Install Ollama

The Ollama software provides a local environment to run the models. To install Ollama:

-

Navigate to the Ollama download page.

-

Select your operating system, then click the Download button or follow any prompts provided.

- Windows: Run the installer (*.exe) and follow the steps.

- macOS: Unpack the ZIP and move the Ollama folder into your Applications directory.

- Linux: Follow the instructions in the provided Bash script installer.

-

Confirm Ollama is installed by opening your launchpad in your MacOS to search for the Ollama image as seen in the image below, then in a terminal, run the code below:

ollama --versionYou should see output similar to ollama version is 0.5.11 as seen in the image below: ollama version #.#.##.

3. Configure Gemma in Ollama

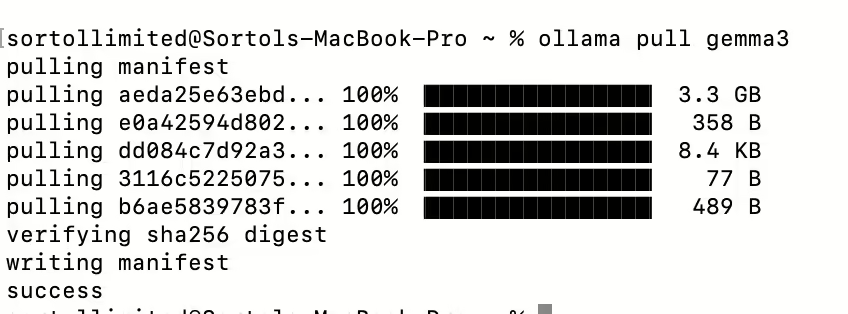

But note that you need to have the latest or newest version of Ollama in order not to get 412 Error, Error: pull model manifest: 412, The fix for this 😐 is to download the latest version or click the update option in your Ollama icon in your Dock. After installing Ollama, you can pull specific Gemma models into your local environment with the ollama pull command:

ollama pull gemma3

By default, this downloads the 4B, Q4_0 quantized version of Gemma 3. You can verify the download with:

ollama list

If you want a different model size, specify it by appending a parameter size (1B, 4B, 12B, 27B). For example:

ollama pull gemma3:12b

For a full list of available Gemma 3 variants, see Gemma 3 tags on the Ollama website. You can also explore older or alternate Gemma versions, such as Gemma 2 and Gemma.

Generate Responses

Once you have installed a Gemma model with Ollama, you can generate responses directly through the command line or via Ollama’s local web service.

Generate from the Command Line

Use the run command to pass a prompt to the model:

ollama run gemma3 "roses are red"

If your Gemma model supports images, include an image path as follows:

ollama run gemma3 "caption this image /Users/sortollimited/Downloads/jq-filters.svg"

Note: Gemma 3 with 1B parameters does not support images; only text input is available for that model size.

Generate via Local Web Service

By default, Ollama sets up a server on localhost:11434. You can send a request to this endpoint using curl:

curl http://localhost:11434/api/generate -d '{

"model": "gemma3",

"prompt": "roses are red"

}'

For visual inputs, provide a list of base64-encoded images:

curl http://localhost:11434/api/generate -d '{

"model": "gemma3",

"prompt": "caption this image",

"images": [ ... ]

}'

Tuned Gemma Models

Ollama offers several official Gemma model variants that are already quantized and saved in GGUF format. If you have a tuned Gemma model, you can convert it to GGUF and run it with Ollama. For instructions, see the Ollama README.

Conclusion

With Ollama, running Gemma on a local machine is straightforward. By pulling prequantized GGUF models, you can start generating text (and sometimes image-related responses) without powerful GPUs or specialized hardware. Whether you’re experimenting with prompts or refining a custom Gemma model, Ollama provides a convenient, open-source solution to get started quickly.

Collabnix wishes you a happy experimenting with Gemma and Ollama 😊 !