Introduction to DeepSeek-R1 and Ollama

In the era of generative AI, efficiently deploying large language models (LLMs) in production environments has become crucial for developers and organizations. DeepSeek-R1 is a powerful quantitative LLM developed for complex natural language processing tasks, offering state-of-the-art performance in text generation, question answering, and semantic analysis. Its optimized architecture makes it particularly suitable for deployment in resource-constrained environments while maintaining high accuracy.

This is where Ollama comes into play – an open-source framework that simplifies running LLMs locally. The Ollama Operator extends this capability to Kubernetes, providing Kubernetes-native management of LLM workloads through custom resources. This operator abstracts away the complexity of managing GPU resources, model scaling, and persistent storage, making it ideal for production deployments.

In this demo, we will walk through the steps to deploy the DeepSeek-R1 quantitative model on your machine using the Ollama Operator for Kubernetes. This operator makes it easy to run large language models on your cluster. The demo below includes installing the operator, deploying a model, and accessing it.

Prerequisites

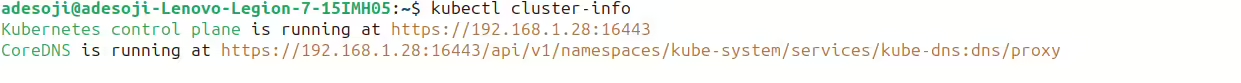

- A running Kubernetes cluster (minikube, kind, or any other distribution)

kubectlconfigured to interact with your cluster- Go installed for installing the

kollamaCLI - At least 8GB RAM on your node (more for larger models)

Make sure to have your environment ready. You can use screenshots to verify your cluster status:

Step 1: Install the Ollama Operator

First, install the operator on your Kubernetes cluster by applying the installation YAML. Open your terminal and run:

kubectl apply --server-side=true -f https://raw.githubusercontent.com/nekomeowww/ollama-operator/v0.10.1/dist/install.yamlYou should see output confirming the creation of resources as seen below.

Step 2: Wait for the Operator to be Ready

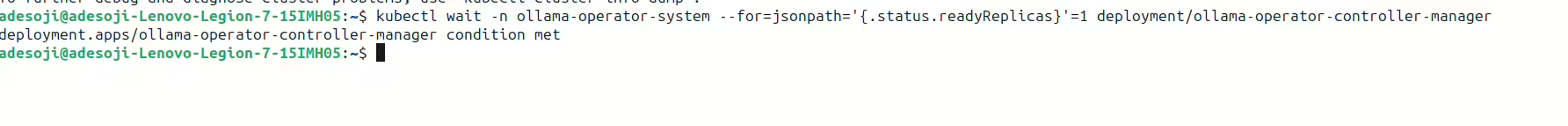

After installation, wait for the operator to be fully ready with the following command:

kubectl wait -n ollama-operator-system --for=jsonpath='{.status.readyReplicas}'=1 deployment/ollama-operator-controller-managerThis command waits until the operator’s controller manager is running. A screenshot of a successful wait might look like:

Step 3: Install the Kollama CLI

The kollama CLI makes it easier to interact with the operator. Install it using Go:

go install github.com/nekomeowww/ollama-operator/cmd/kollama@latest

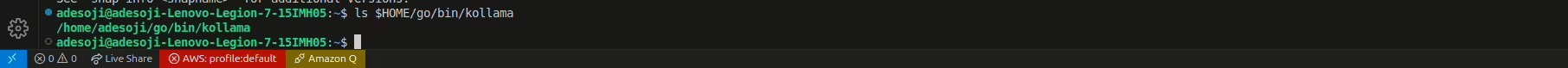

Step 3.1: Locate the Binary

By default, Go installs binaries in the $HOME/go/bin directory. Check if the kollama binary is present by running:

ls $HOME/go/bin/kollamaIf the binary exists as seen below, proceed to the next step.

Step 3.2: Add the Go Binary Directory to Your PATH

You need to update your PATH environment variable to include $HOME/go/bin. You can do this by adding the following line to your shell’s configuration file (e.g., .bashrc or .zshrc):

export PATH=$PATH:$HOME/go/bin

After adding the line, reload your configuration. For example, if you are using bash, run:

source ~/.bashrcStep 3.3: Verify the Installation

Now, try checking the version again:

kollama version

This should display the installed version of kollama. If the issue persists, ensure there are no typos in your configuration file and that the installation path is correct.

Additional Notes

-

If you are using a different shell (like

zsh), update the corresponding configuration file (e.g.,~/.zshrc). -

You can also temporarily update your

PATHin the current session by running the export command directly in your terminal.

With these steps, the kollama command should be recognized, allowing you to continue with your deployment tasks.

Once installed, verify that it is accessible from your terminal by running kollama version (if available).

Step 4: Deploy the DeepSeek-R1 Model

You can deploy your model using the kollama CLI. For example, deploy the phi model with the following command:

kollama deploy phi --expose --node-port 30001

Note: If you are using kind (or any environment with a StorageClass that supports only ReadWriteOnce), you might need to customize your Model Custom Resource (CR). Here is a sample YAML file (ollama-model-phi.yaml):

apiVersion: ollama.ayaka.io/v1

kind: Model

metadata:

name: phi

spec:

image: phi

persistentVolume:

accessMode: ReadWriteOnce

Apply the Model CR to your cluster:

kubectl apply -f ollama-model-phi.yamlWait for the model to be ready:

kubectl wait --for=jsonpath='{.status.readyReplicas}'=1 deployment/ollama-model-phiStep 5: Access and Interact with the Model

Once the model is deployed and ready, forward the ports to access it:

kubectl port-forward svc/ollama-model-phi 8080:80Now, you can interact with the model using the following command:

ollama run phiStep 6: Clean Up Resources

After finishing your demo, it’s important to clean up the deployed resources. Follow these steps:

Remove the Deployed Model

Delete the model custom resource:

kubectl delete -f ollama-model-phi.yamlWait until the resources are removed. You can verify by checking the deployments in your cluster.

Uninstall the Ollama Operator

Remove the operator by deleting the installed YAML:

kubectl delete --server-side=true -f https://raw.githubusercontent.com/nekomeowww/ollama-operator/v0.10.1/dist/install.yamlThis command cleans up all operator-related resources.

Stop Port Forwarding

If you have an active port-forward session, stop it by pressing Ctrl+C in the terminal window where it is running.

Additional Information and Resources

For further details on the Ollama Operator, check out the GitHub repository and the release notes for v0.10.6.

The operator supports multiple models on the same cluster, and you can customize deployments using additional options like scaling replicas, setting the imagePullPolicy, and more. A full configuration example is provided below:

apiVersion: ollama.ayaka.io/v1

kind: Model

metadata:

name: phi

spec:

replicas: 2

image: phi

imagePullPolicy: IfNotPresent

storageClassName: local-path

persistentVolumeClaim: your-pvc

persistentVolume:

accessMode: ReadWriteOnce

Wrapping Up

With these steps, you have successfully deployed the DeepSeek-R1 (phi) model on your Kubernetes cluster using the Ollama Operator. Enjoy exploring and scaling your large language models in a clustered environment!

Have Queries? Join https://launchpass.com/collabnix