In the world of modern application deployment, two names frequently dominate conversations: Docker and Kubernetes. While they are often mentioned together, it’s essential to understand that Docker and Kubernetes serve distinct purposes. Docker focuses on development, while Kubernetes focuses on deployment. This post explores the differences, use cases, and how they work together.

What is Docker?

Docker is a platform designed to simplify the development, deployment, and operation of applications by using containers. Containers package an application’s code, dependencies, and runtime environment into a single unit, ensuring consistency across various environments, whether it’s a developer’s laptop, a testing server, or a production environment.

Key Features of Docker:

- Lightweight: Containers share the host operating system’s kernel, making them much lighter than virtual machines.

- Portability: Build once, run anywhere—Docker containers run consistently across different platforms.

- Speed: Containers start almost instantly compared to traditional virtual machines.

- Developer-Friendly: Docker simplifies workflows, enabling developers to focus on writing code without worrying about environment inconsistencies.

Use Cases:

- Local development environments.

- Continuous Integration/Continuous Deployment (CI/CD) pipelines.

- Simplifying application packaging and distribution.

How Docker Works:

- Image Creation: Developers create Docker images using Dockerfile (using tools like docker init), which defines the application and its dependencies.

- Containerization: Images are used to spin up containers that isolate applications from the host system.

- Deployment: Containers can be deployed consistently across any environment with Docker Compose.

Read: Container-supported development workflow

What is Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform. While Docker helps you build and run containers, Kubernetes excels at managing and scaling them in production. It automates the deployment, scaling, and operation of containerized applications across clusters of machines.

Key Features of Kubernetes:

- Orchestration: Automatically manages the deployment and scaling of containers.

- Load Balancing: Distributes traffic to ensure reliability and performance.

- Self-Healing: Restarts failed containers and reschedules workloads if a node goes down.

- Scalability: Dynamically scales applications up or down based on demand.

- Declarative Configuration: Uses YAML or JSON files to define desired system states.

Use Cases:

- Managing microservices architectures.

- Handling high-availability applications.

- Automating DevOps workflows.

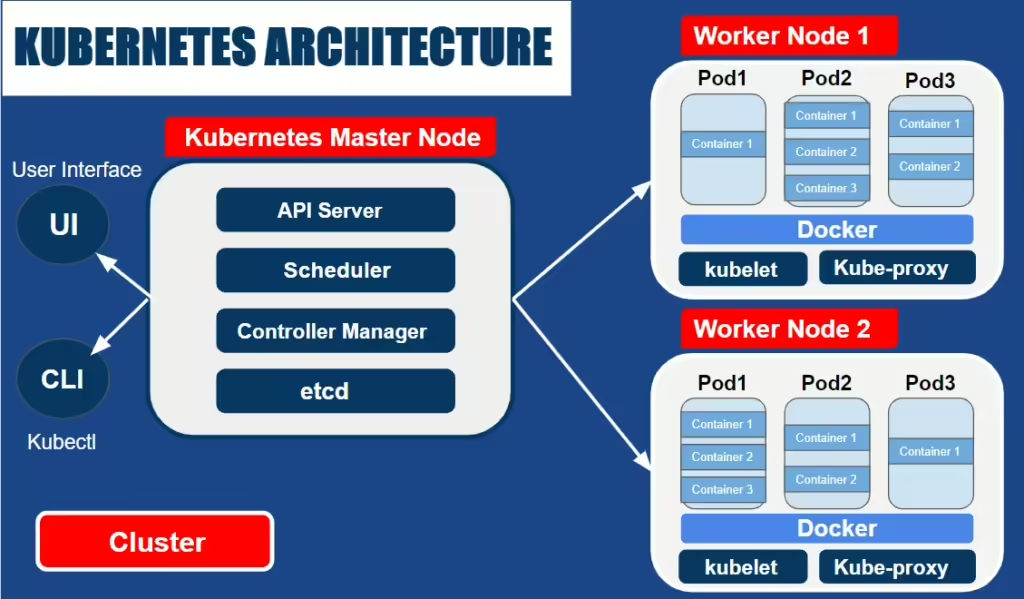

Kubernetes Architecture

Kubernetes follows the master/slave architecture. So, we have the master nodes and the worker nodes. The master nodes manage the worker nodes and together they form a cluster. A cluster is a set of machines called nodes. A Kubernetes cluster has at least one master node and one worker node. However, there can be multiple clusters too.

Kubernetes Master Node/ Control Plane

Kubernetes Master Node/Control Plane is the controlling unit of the cluster which manages the cluster, monitors the Nodes and Pods in the cluster, and when a node fails, it moves the workload of the failed node to another working node.

The various components of the Kubernetes Master Node:

API Server

The API Server is responsible for all communications (JSON over HTTP API). The Users, management devices, and Command line interfaces talk to the API Server to interact with the Kubernetes cluster. kubectl is the CLI tool used to interact with the Kubernetes API.

Scheduler

The Scheduler schedules Pods across multiple nodes based on the information it receives from etcd, via the API Server.

Controller Manager

The Controller Manager is a component on the Master Node that runs the Controllers. It runs the watch-loops continuously to drive the actual cluster state towards the desired cluster state. It runs the Node/Replication/Endpoints/Service account and token Controllers and in case of the Cloud Platforms, it runs the Node/Route/Service/Volume Controllers.

etcd

etcd is the open-source persistent, lightweight, distributed key-value database developed by CoreOS, which communicates only with the API Server. etcd can be configured externally or inside the Master Node.

Worker Node

A Worker Node can have one or more Pods, and a Pod can have one or more Containers, and a Cluster can have multiple Worker Nodes as well as Master nodes. Node components (Kube-proxy, kubelet, Container runtime) run on every Worker Node, maintaining the running Pods and providing the Kubernetes run-time environment.

The various components of the Kubernetes Worker Node:

kubelet

kubelet is an agent running on each Worker Node which monitors the state of a Pod (based on the specifications from PodSpecs), and if not in the desired state, the Pod re-deploys to the same node or other healthy nodes.

Kube-proxy

The Kube-proxy is an implementation of a network proxy (exposes services to the outside world) and a load-balancer (acts as a daemon, which watches the API server on the Master Node for the addition and removal of services and endpoints).

Container runtime/ Docker

Kubernetes does not have the capability to directly handle containers, so it requires a Container runtime. Kubernetes supports several container runtimes, such as Docker, Containerd, Cri-o etc.

Add-ons

Add-ons add to the functionality of Kubernetes.Some of the important add-ons are:

DNS — Cluster DNS is a DNS server required to assign DNS records to Kubernetes objects and resources.

Dashboard — A general purpose web-based user interface for cluster management.

Monitoring — Continuous and efficient monitoring of workload performance by recording cluster-level container metrics in a central database.

Logging — Saving cluster-level container logs in a central database.

Don’t Miss: Check out Kubectl CheatSheet — https://collabnix.com/kubectl-cheatsheet/

How Kubernetes Works:

- Deployment: Developers define the desired state of applications in YAML or JSON files.

- Orchestration: Kubernetes schedules and deploys containers to nodes within the cluster.

- Monitoring and Scaling: Kubernetes continuously monitors the cluster and adjusts resources as needed, scaling applications up or down.

Docker vs Kubernetes: Key Differences

| Feature | Docker | Kubernetes |

|---|---|---|

| Primary Function | Containerization platform. | Container orchestration system. |

| Scope | Focuses on development and individual containers. | Focuses on deployment and managing clusters of containers. |

| Setup | Easy to set up and use. | Requires more complex setup. |

| Scaling | Manual scaling of containers. | Automated and dynamic scaling. |

| Networking | Simplified networking for containers. | Advanced networking capabilities. |

| Self-Healing | No built-in self-healing. | Automatically restarts/replaces failed containers. |

| Dependency | Docker is standalone. | Often works with Docker or other container runtimes. |

| Containerization | Allows to create and manage containers. | Allows to run and manage containers. |

| Orchestration | Does not have native orchestration features; relies on tools like Docker Swarm. | Allows to manage and automate container deployment and scaling across clusters of hosts. |

| Scaling | Allows for horizontal scaling of containers. | Allows for horizontal scaling of containers. |

| Self-Healing | Relies on third-party tools like Docker Swarm or Compose. | Automatically replaces failed containers with new ones. |

| Load Balancing | Relies on third-party tools like Docker Swarm. | Provides internal load balancing. |

| Storage Orchestration | Relies on third-party tools. | Provides a framework for storage orchestration across clusters of hosts. |

Using Kubernetes with Docker

When using Kubernetes with Docker, Kubernetes acts as an orchestrator for the Docker containers. This means that Kubernetes can manage and automate the deployment, scaling, and operation of Docker containers.

Kubernetes can create and manage Docker containers, schedule them to run on the appropriate nodes in a cluster, and automatically scale the number of containers up or down based on demand. Kubernetes can also manage the storage and networking of Docker containers, making it easier to create and deploy complex containerized applications.

By using Kubernetes with Docker, you can take advantage of the benefits of both tools. Docker provides an easy way to build and package containerized applications, while Kubernetes provides a powerful platform for managing and scaling those applications. Together, they can provide a complete solution for managing containerized applications at scale.