Quantizing DeepSeek-V3 for Smaller GPUs

Large language models (LLMs) like DeepSeek-V3 offer incredible capabilities, but their size often makes them challenging to run on consumer hardware. One technique to address this is quantization, which reduces the precision of the model’s weights, allowing it to fit into smaller GPUs. This blog post demonstrates how to load and use DeepSeek-V3 with 4-bit quantization using the `bitsandbytes` library in the `transformers` library.

Requirements

Before you begin, ensure you have the following installed:

- Python 3.11 or higher

- PyTorch

- Transformers library

- bitsandbytes library

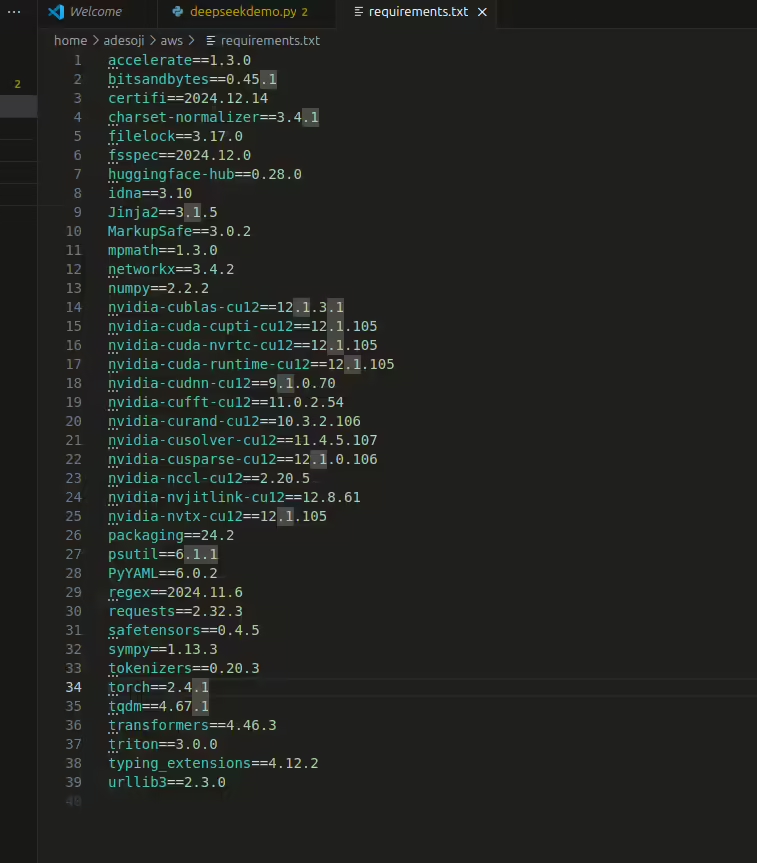

You can install the necessary libraries using pip but use the requirements.txx in the image below:

pip install torch transformers bitsandbytes

Code Implementation

Here’s the Python code to load DeepSeek-V3 with 4-bit quantization:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline, BitsAndBytesConfig

def main():

model_name = "deepseek-ai/DeepSeek-V3"

tokenizer = AutoTokenizer.from_pretrained(

model_name,

trust_remote_code=True

)

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_quant_type="nf4",

#bnb_4bit_use_double_quant=True, # Optional

)

model = AutoModelForCausalLM.from_pretrained(

model_name,

trust_remote_code=True,

quantization_config=quantization_config,

device_map="auto",

torch_dtype=torch.float16, # or torch.bfloat16 if supported

)

generate_pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

device_map="auto",

torch_dtype=torch.float16

)

prompt = "Write a short story about a mysterious ancient artifact that changes history."

outputs = generate_pipe(

prompt,

max_new_tokens=256,

do_sample=True,

top_k=50,

top_p=0.9,

temperature=0.7

)

print("\nGenerated text:\n")

print(outputs[0]["generated_text"])

if __name__ == "__main__":

main()

Explanation

The code first imports the necessary libraries. The key change is the use of `BitsAndBytesConfig` to specify the 4-bit quantization. We set `load_in_4bit=True`, `bnb_4bit_compute_dtype=torch.float16` for faster computations, and `bnb_4bit_quant_type=”nf4″` which is the recommended quantization type. This configuration is then passed to the `from_pretrained` method via the `quantization_config` argument. The rest of the code sets up the text generation pipeline and generates text based on a provided prompt.

Running the Code

Save the code as a Python file (e.g., deepseek_quantized.py) and run it from your terminal:

python deepseek_quantized.py

The generated text will be printed to the console.

Important Considerations

Even with 4-bit quantization, DeepSeek-V3 is a large model. A GPU with sufficient VRAM (at least 24GB is recommended) is crucial. If you have less memory, consider using a smaller model, further quantization techniques, or cloud-based GPU instances.

This method provides a starting point for working with quantized LLMs. You can adjust the generation parameters (e.g., `max_new_tokens`, `temperature`) to fine-tune the output as needed.