In the rapidly evolving landscape of AI development, Ollama has emerged as a game-changing tool for running Large Language Models locally. With over 43,000+ GitHub stars and 2000+ forks, Ollama has become the go-to solution for developers seeking to integrate LLMs into their local development workflow.

The Rise of Ollama: By the Numbers

– 43k+ GitHub Stars

– 2000+ Forks

– 100+ Contributors

– 500k+ Monthly Docker Pulls

– Support for 40+ Popular LLM Models

What Makes Ollama Different?

Ollama isn’t just another model runner – it’s a complete ecosystem for local LLM development. Built in Go and optimized for performance, Ollama provides:

– Native GPU acceleration support

– Memory-mapped model loading

– Efficient quantization

– REST API for seamless integration

– Custom model creation capabilities

Architecture Deep Dive

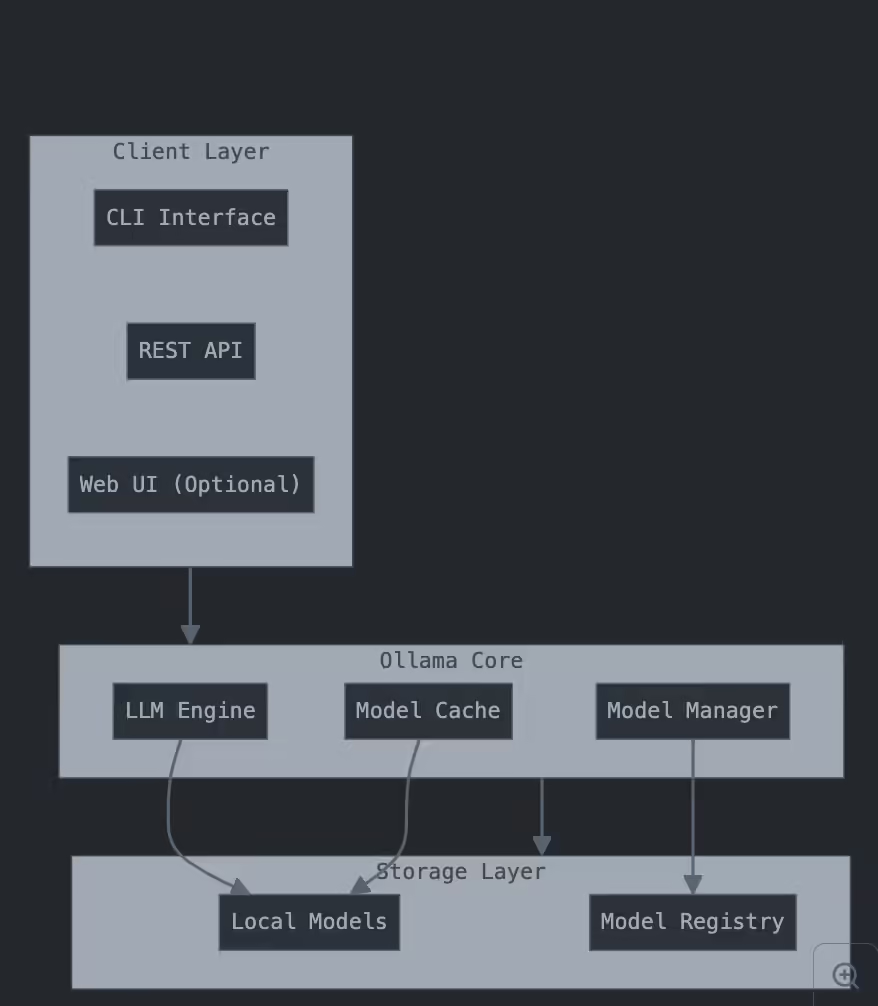

Ollama follows a modular architecture with three main components:

Popular Models and Performance

Based on community metrics, here are the most popular models with their performance characteristics:

| Model | Size | RAM Required | Typical Speed |

|----------------|-------|--------------|--------------|

| mistral | 4.1GB | 8GB | 32 tok/s |

| llama2 | 3.8GB | 8GB | 28 tok/s |

| codellama | 4.1GB | 8GB | 35 tok/s |

| neural-chat | 4.1GB | 8GB | 30 tok/s |

| phi-2 | 2.7GB | 6GB | 25 tok/s |Installation and Setup

1. Installing Ollama

For Linux/macOS:

curl https://ollama.ai/install.sh | shFor Windows (requires WSL2):

# Inside WSL2

curl https://ollama.ai/install.sh | sh2. Installing Ollama Web UI

Using Docker (recommended):

docker pull ghcr.io/ollama-webui/ollama-webui:main

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway \

-v ollama-webui:/app/backend/data --name ollama-webui \

--restart always ghcr.io/ollama-webui/ollama-webui:mainAPI Integration Examples

Basic Generation

curl -X POST http://localhost:11434/api/generate \

-d '{

"model": "llama2",

"prompt": "Write a function to calculate fibonacci numbers",

"stream": false

}'Python Integration

import requests

def generate_response(prompt, model="llama2"):

response = requests.post(

"http://localhost:11434/api/generate",

json={

"model": model,

"prompt": prompt,

"stream": False

}

)

return response.json()["response"]

# Example usage

result = generate_response("Explain quantum computing in simple terms")Custom Model Creation

# Create a Modelfile

FROM llama2

PARAMETER temperature 0.7

PARAMETER top_p 0.9

SYSTEM """You are a specialized coding assistant.

Always provide code examples in Python with detailed comments."""

# Build the model

ollama create coder -f Modelfile

# Use the model

ollama run coder "Write a binary search implementation"Advanced Usage: REST API Endpoints

Ollama provides a comprehensive REST API:

POST /api/generate # Generate text from a prompt

POST /api/chat # Interactive chat session

POST /api/embeddings # Generate embeddings

GET /api/tags # List available models

POST /api/pull # Pull a model from registry

POST /api/push # Push a model to registryPerformance Optimization Tips

1. GPU Acceleration:

– NVIDIA GPUs: Install CUDA 11.7 or later

– AMD GPUs: Enable ROCm support

# Check GPU availability

ollama run llama2 "Hello" --verbose

# Enable specific GPU

CUDA_VISIBLE_DEVICES=0 ollama run llama2

2. Memory Management:

– Use mmap for larger models

– Enable swap space optimization

# Set memory map configuration

echo "vm.max_map_count=1048576" | sudo tee -a /etc/sysctl.conf

sudo sysctl -pUseful Resources

– Official GitHub Repository

– Model Library

– Ollama WebUI Repository

– Official Documentation

Future Roadmap

The Ollama team is actively working on:

– Improved GPU optimization

– Extended model compatibility

– Enhanced API features

– Better memory management

– Native Windows support

Conclusion

Ollama represents a significant advancement in making LLMs accessible for local development. Its combination of ease of use, performance, and flexibility makes it an invaluable tool for developers working with AI models.