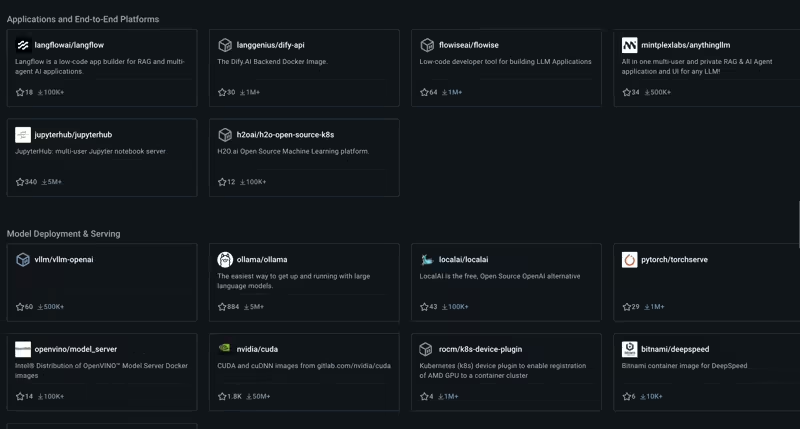

Imagine a place where developers find trusted, pre-packaged AI tools, and publishers gain the visibility they deserve. That’s the Docker AI Catalog for you!

Think of it as a marketplace, but curated and supercharged. Developers get quick access to reliable tools, while publishers can:

- Differentiate themselves in a crowded space.

- Track adoption of their tools.

- Engage directly with a growing AI developer community.

Now that you know the “why,” let’s explore the “how.”

What Makes the AI Catalog Special?

It’s more than just a collection of AI tools. It’s a system designed to make AI development faster, smarter, and less error-prone. For developers, it’s about access. For publishers, it’s about reach. For both, it’s about collaboration.

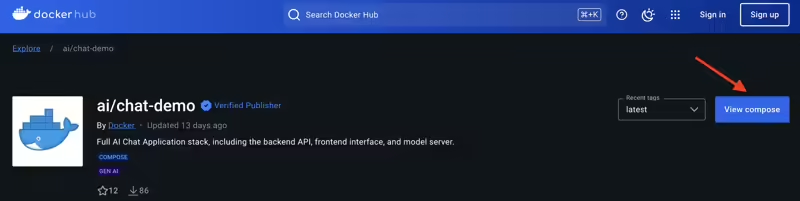

Let’s put this into action with an exciting tool available in the AI Catalog—the AI Chat Demo.

AI Chat Demo: Your Gateway to Real-Time AI

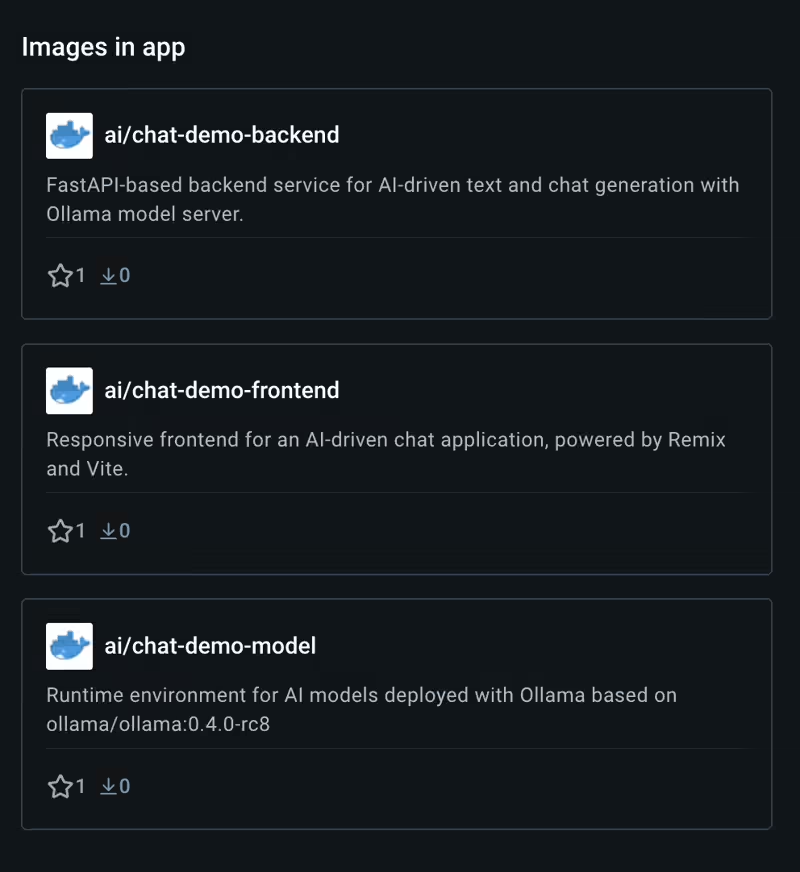

AI Chat Demo is a full AI Chat Application stack, including the backend API, frontend interface, and model server. This isn’t just any demo. It’s a fully containerized AI Chat stack with three key components:

- Backend API: Handles the brains of the operation.

- Frontend Interface: The user-facing layer.

- Model Server: Powers the AI magic.

Features

- Full Stack Setup: Backend, frontend, and model services included.

- Easy Model Configuration: Change models with a single environment variable.

- GPU and CPU Support: Easily switch between CPU or GPU mode. Live AI Chat: Real-time AI response integration.

By the end of this guide, you’ll have this stack running on your machine. But first, let’s crack open the Compose file that makes this magic happen.

Breaking Down the Compose File

The Compose file is like the blueprint for your AI stack. It defines how each component (or service) runs, interacts, and scales. Let’s go line by line.

This Compose setup lets you easily launch the full AI Chat Application stack, including the backend API, frontend interface, and model server. Ideal for real-time AI interactions, it runs fully containerized with Docker Compose.

Getting Started

Pre-requisite

- Docker Desktop

- 8 GB of available memory within the Docker VM and performs best on systems with at least 16 GB of available memory.

- Systems with higher memory, like 32 GB or more, will experience smoother performance, especially when handling intensive AI tasks.

- To enable GPU support, ensure that NVIDIA driver is installed.

Click “View Compose” on Docker Hub to view the Compose file

Let’s dig into each of the Compose services:

1. The Backend Service

backend:

image: ai/chat-demo-backend:latest

build: ./backend

ports:

- "8000:8000"

environment:

- MODEL_HOST=http://ollama:11434

depends_on:

ollama:

condition: service_healthy

Explanation:

- image: This points to the prebuilt backend image hosted in the Docker registry. If you need to customize, you can modify the code in ./backend and rebuild it.

- ports: Maps the container’s internal port (8000) to your machine’s port (8000). This is how the backend API becomes accessible. environment:

- MODEL_HOST: This tells the backend where to find the model server.

- depends_on: Ensures the ollama service (model server) starts first and passes its health check before the backend starts.

2. The Frontend Service

frontend:

image: ai/chat-demo-frontend:latest

build: ./frontend

ports:

- "3000:3000"

environment:

- PORT=3000

- HOST=0.0.0.0

command: npm run start

depends_on:

- backend

Explanation:

- image: The frontend image, serving the user interface. build: If you want to tweak the frontend, you can modify the code in ./frontend and rebuild it.

- ports: Maps port 3000 for the frontend. Open http://localhost:3000 to access the app.

- command: Specifies the command to run when the container starts. Here, it runs the React app.

- depends_on: Ensures the backend is running before starting the frontend. This ensures smooth API communication.

3. The Model Server (Ollama)

ollama:

image: ai/chat-demo-model:latest

build: ./ollama

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

environment:

- MODEL=${MODEL:-mistral:latest}

healthcheck:

test: ["CMD-SHELL", "curl -s http://localhost:11434/api/tags | jq -e \".models[] | select(.name == \\\"${MODEL:-mistral:latest}\\\")\" > /dev/null"]

interval: 10s

timeout: 5s

retries: 50

start_period: 600s

deploy:

resources:

limits:

memory: 8G

- image: The AI model server image, which runs the inference models.

- volumes: Maps a persistent volume (ollama_data) to store model data. This ensures your model configurations aren’t lost when the container restarts. environment: Sets the default model (mistral:latest) but allows overrides with the MODEL variable.

- healthcheck: Runs a shell command to verify if the model server is ready. It retries up to 50 times, waiting 10 seconds between checks.

- deploy.resources: Allocates up to 8GB of memory to the model server.

Docker Compose for CPU

Here’s how the final Compose file look like:

services:

backend:

image: ai/chat-demo-backend:latest

build: ./backend

ports:

- "8000:8000"

environment:

- MODEL_HOST=http://ollama:11434

depends_on:

ollama:

condition: service_healthy

frontend:

image: ai/chat-demo-frontend:latest

build: ./frontend

ports:

- "3000:3000"

environment:

- PORT=3000

- HOST=0.0.0.0

command: npm run start

depends_on:

- backend

ollama:

image: ai/chat-demo-model:latest

build: ./ollama

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

environment:

- MODEL=${MODEL:-mistral:latest} # Default to mistral:latest if MODEL is not set

healthcheck:

test: ["CMD-SHELL", "curl -s http://localhost:11434/api/tags | jq -e \".models[] | select(.name == \\\"${MODEL:-mistral:latest}\\\")\" > /dev/null"]

interval: 10s

timeout: 5s

retries: 50

start_period: 600s

deploy:

resources:

limits:

memory: 8G

volumes:

ollama_data:

name: ollama_data

GPU-Optimized Configuration

Pre-requisite:

Ensure the nvidia driver is installed. Copy the compose file from the gpu-latest tag with the View Compose button and save it as compose.yaml.

If you have an NVIDIA GPU, you can add this snippet under the ollama service:

services:

backend:

image: ai/chat-demo-backend:latest

build: ./backend

ports:

- "8000:8000"

environment:

- MODEL_HOST=http://ollama:11434

depends_on:

ollama:

condition: service_healthy

frontend:

image: ai/chat-demo-frontend:latest

build: ./frontend

ports:

- "3000:3000"

environment:

- PORT=3000

- HOST=0.0.0.0

command: npm run start

depends_on:

- backend

ollama:

image: ai/chat-demo-model:latest

build: ./ollama

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

environment:

- MODEL=${MODEL:-mistral:latest} # Default to mistral:latest if MODEL is not set

healthcheck:

test: ["CMD-SHELL", "curl -s http://localhost:11434/api/tags | jq -e \".models[] | select(.name == \\\"${MODEL:-mistral:latest}\\\")\" > /dev/null"]

interval: 10s

timeout: 5s

retries: 50

start_period: 600s

deploy:

resources:

limits:

memory: 8G

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]

volumes:

ollama_data:

name: ollama_data

This ensures the model server utilizes your GPU, significantly improving performance for large-scale AI tasks.

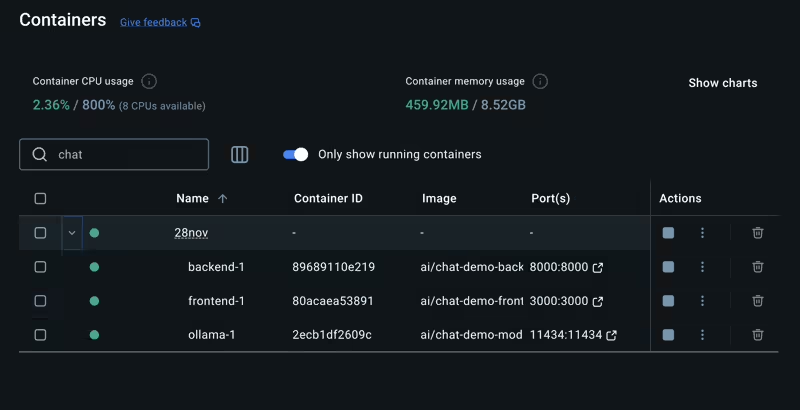

Launching the Stack

Here’s the best part: launching this entire stack is as simple as running:

docker compose up -d

This command:

- Builds and starts all services.

- Ensures dependencies are respected (e.g., backend waits for the model server).

Run with a Custom Model

Specify a different model by setting the MODEL variable:

MODEL=llama3.2:latest docker compose up

Note: Always include the model tag, even when using the latest.

Interacting with the AI Chat Demo

- Open your browser and navigate to http://localhost:3000.

- Use the intuitive chat interface to interact with the AI model.

This setup supports real-time inference, making it perfect for chatbots, virtual assistants, and more.

Why This AI Stack Stands Out?

- Seamless Orchestration: Docker Compose makes deploying complex AI stacks effortless.

- Portability: Run this stack anywhere Docker is available—your laptop, cloud, or edge device.

- Scalability: Add more resources or services without disrupting your workflow.

Conclusion

The Docker AI Catalog, paired with tools like Compose, democratizes AI development. With prebuilt stacks like the AI Chat Demo, you can go from zero to a fully functional AI application in minutes.

So, what are you waiting for? Dive in, experiment, and unlock the future of AI with Docker. And if you hit any bumps along the way, let me know—I’m here to help! 🚀