Scaling applications in Kubernetes is essential for maintaining optimal performance, ensuring high availability, and managing resource utilization effectively. Whether you’re handling fluctuating traffic or optimizing costs, understanding how to scale your Kubernetes deployments is crucial. In this blog, we’ll delve into the intricacies of scaling in Kubernetes, explore manual and automated scaling techniques using kubectl and KEDA, and highlight how StormForge can elevate your scaling strategy with advanced optimization features.

Understanding Kubernetes Scaling

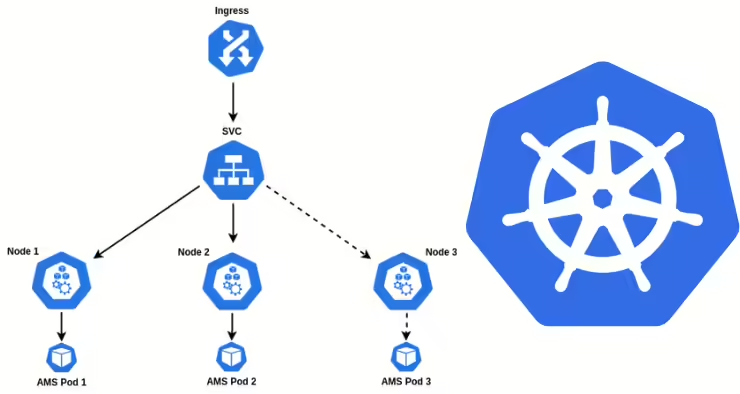

Scaling in Kubernetes involves adjusting the number of pod replicas or modifying the resource allocations of pods to align with your application’s demand. Kubernetes supports two primary types of scaling:- Horizontal Scaling: Increasing or decreasing the number of pod replicas to handle varying loads.

- Vertical Scaling: Adjusting the CPU, memory, or other resource requests of existing pods to better fit their workloads.

Manual Horizontal Scaling with kubectl

The kubectl command-line tool provides a straightforward way to manually scale your Kubernetes resources. Using the scale subcommand, you can adjust the number of replicas for a deployment or StatefulSet. Here’s the basic syntax:

kubectl scale --replicas=<number_of_replicas> <resource_type>/<resource_name> [options]

kubectl scale --replicas=3 deployment/nginx-deployment

kubectl scale --replicas=2 deployment -l app=nginx

kubectl scale statefulset,deployment --all --replicas=2

kubectl scale --current-replicas=2 --replicas=3 deployment/mysql

- Using the –current-replicas option ensures that the scaling action only occurs if the current number of replicas matches the specified value, preventing race conditions.

Scaling to Zero: When and How

There are scenarios where you might want to scale a deployment down to zero replicas, effectively stopping all running pods. This can be useful for:- Cost Savings: Eliminating resource usage when the application isn’t needed, especially in non-production environments.

- Maintenance: Simplifying updates or performing blue-green deployments without affecting live traffic.

- Testing: Ensuring a clean state by scaling down applications during test runs.

kubectl scale --replicas=0 deployment/my-app

- Application Unavailability: No pods are running, making the application inaccessible.

- Service Disruption: Active sessions and connections are terminated.

- Impact on Stateful Applications: Ensure that state is preserved externally to avoid data loss.

The Limitation of HPA with Zero Replicas

The Horizontal Pod Autoscaler (HPA) in Kubernetes automatically adjusts the number of replicas based on metrics like CPU utilization. However, HPA struggles when scaling a deployment to zero because no pods are available to provide the necessary metrics. This means HPA cannot scale up from zero on its own, requiring manual intervention to restart the deployment.Introducing KEDA: Bridging the Scaling Gap

Kubernetes Event-Driven Autoscaling (KEDA) enhances the capabilities of HPA by allowing scaling based on a wide range of external metrics and event sources. Unlike HPA, KEDA can scale deployments down to zero and back up based on triggers such as message queue depth, HTTP requests, or custom metrics.

Key Features of KEDA:- Event-Driven Scaling: React to external events like queue lengths or database queries.

- Scale to Zero: Automatically reduce replicas to zero when there are no events to process.

- Flexibility: Support for various metrics and integration with services like Azure Monitor, Prometheus, AWS CloudWatch, and Kafka.

Now, Let’s walk through an example of using KEDA to scale a RabbitMQ consumer deployment based on queue length.

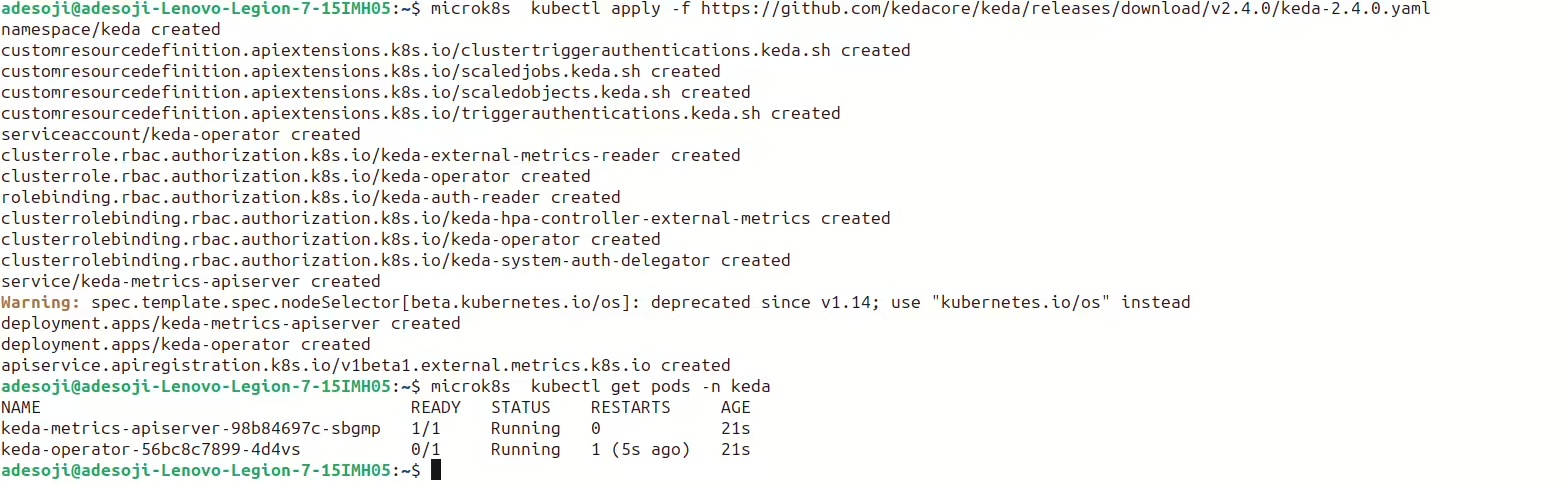

Prerequisites: Step 1: Install KEDA

helm repo add kedacore https://kedacore.github.io/charts

helm repo update

helm install keda kedacore/keda --namespace keda --create-namespace

kubectl apply -f https://github.com/kedacore/keda/releases/download/v2.4.0/keda-2.4.0.yaml

Now we see KEDA pods running and ready within a few minutes

Step 2: Deploy RabbitMQ

helm repo add bitnami https://charts.bitnami.com/bitnami

helm install rabbitmq bitnami/rabbitmq

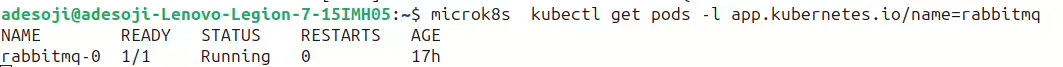

kubectl get pods -l app.kubernetes.io/name=rabbitmq

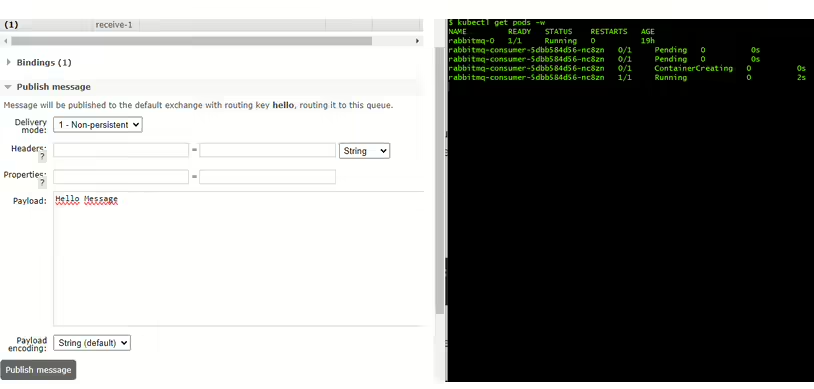

Now the RabbitMQ Deployment Pods is Up and Running 😊

Most times, it takes some minutes before the pod is running and ready.

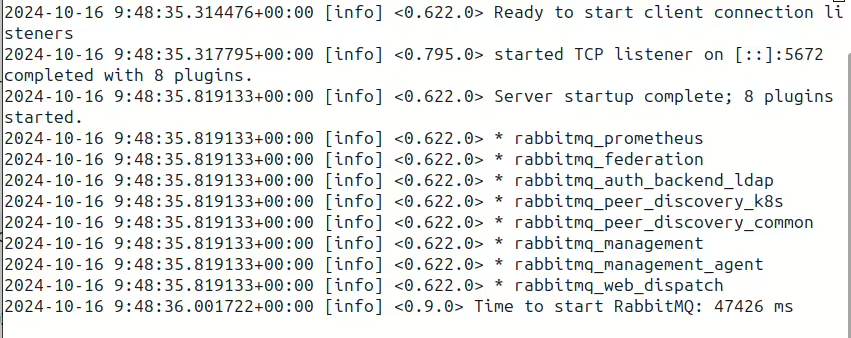

Sample of RabbitMQ pod logs after successful installation:

apiVersion: apps/v1

kind: Deployment

metadata:

name: rabbitmq-consumer

namespace: default

labels:

app: rabbitmq-consumer

spec:

selector:

matchLabels:

app: rabbitmq-consumer

template:

metadata:

labels:

app: rabbitmq-consumer

spec:

containers:

- name: rabbitmq-consumer

image: ghcr.io/kedacore/rabbitmq-client:v1.0

env:

- name: RABBITMQ_PASSWORD

valueFrom:

secretKeyRef:

name: rabbitmq

key: rabbitmq-password

imagePullPolicy: Always

command:

- receive

args:

- "amqp://user:${RABBITMQ_PASSWORD}@rabbitmq.default.svc.cluster.local:5672"

" | kubectl apply -f -

kubectl port-forward pod/rabbitmq-0 15672:15672

Navigate to http://localhost:15672 and log in with the username user and the retrieved password.

Step 6: Configure KEDA ScaledObjectNow, we create , define and apply a KEDA ScaledObject to automatically scale the worker/consumer deployment based on the RabbitMQ queue length. For Emphasis, A KEDA ScaledObject is a Custom Resource definition that defines the triggers and scaling behaviors used by KEDA to scale Deployment, StatefulSet and Custom Resource target resources.

echo "

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: rabbitmq-worker-scaledobject

spec:

scaleTargetRef:

name: rabbitmq-consumer

minReplicaCount: 0

maxReplicaCount: 5

triggers:

- type: rabbitmq

metadata:

host: \"amqp://user:@rabbitmq.default.svc.cluster.local:5672\"

queueName: \"hello\"

queueLength: '1'

" | kubectl apply -f -

Ensure you replace <RABBITMQ_PASSWORD> with the actual password or use a TriggerAuthentication object for secure handling.

Step 7: Verify Scaling to Zero

kubectl get deployment rabbitmq-consumer

With an empty queue, the deployment should scale down to zero replicas.

Step 8: Test Scaling Up Add messages to the RabbitMQ queue via the UI. KEDA will detect the queue length and scale the consumer deployment up accordingly.

Enhancing Resource Management with StormForge

While HPA and KEDA offer robust scaling capabilities, achieving optimal resource utilization can be challenging. Incorrect metric thresholds or inefficient scaling policies can lead to wasted resources or performance bottlenecks. This is where StormForge Optimize Live comes into play.

Before using stormforge view the defaul graph of the CPu Request with No Autoscaling

After using stormforge to do/p>

- Machine Learning-Based Recommendations: Analyze resource usage patterns to provide optimal settings for HPA and vertical scaling.

- Automated Resource Optimization: Dynamically adjust resource requests and limits based on real-time data, ensuring efficient performance and cost management.

- Trend Detection: Forecast resource needs by identifying usage patterns, enabling proactive scaling adjustments.

- Integration with GitOps: Apply recommendations through GitOps workflows, allowing for controlled and automated deployments.

- Improved Performance: Optimize resource allocations to prevent contention and ensure smooth application operations.

- Cost Reduction: Minimize waste by accurately sizing resources based on actual usage.

- Enhanced Efficiency: Leverage machine learning to continuously refine scaling strategies, adapting to changing workloads seamlessly.

Ready to optimize your Kubernetes scaling? Experience the power of StormForge in their sandbox environment—no email required. Access the Sandbox here

Similar to stormforge is CAST AI – Kubernetes Automation Platform and datadog. Considering CAST AI, You have the option to add extra overhead for CPU and RAM, modify their percentile values, and establish a threshold for automatically applying the recommendations you receive. For instance, adjustments can be applied to a workload only after it surpasses specific thresholds.

The CAST AI team is working on enhancing automated workload optimization. In the near future, the platform will introduce seasonal models for resource management, allowing it to predict hourly, daily, weekly, and monthly usage patterns to improve responsiveness and availability.

Conclusion

Effective scaling is pivotal for maintaining the balance between performance and cost in Kubernetes environments. While kubectl scale offers manual control and HPA provides automated scaling based on metrics, KEDA enhances these capabilities by enabling event-driven scaling, including scaling down to zero. To further optimize your scaling strategy, StormForge’s advanced machine learning and automation features ensure that your resources are utilized efficiently, delivering both performance and cost benefits. Embrace these scaling techniques and tools to achieve a resilient, cost-effective, and high-performing Kubernetes infrastructure. Continue exploring advanced autoscaling solutions with KEDA and node scaling options like Karpenterto fully harness the power of Kubernetes in your deployments.