This blog demonstrates how to use DeepSeek-R1 for text generation using Ollama, a tool for running LLMs locally. These instructions align with the usage described on the DeepSeek-R1 page at ollama.com.

1. Install Ollama

Ollama currently supports macOS (both Intel and Apple Silicon). Install it using Homebrew:

brew install ollama

Confirm the installation by checking the help menu:

ollama help

You should now see the usage instructions for the `ollama` CLI.

2. Download (Pull) the DeepSeek-R1 Model

Download the DeepSeek-R1 model weights using the following command:

ollama pull deepseek-r1

This command downloads the model and stores it locally. By default, models are stored in `~/Library/Application Support/Ollama/models/`.

3. Generate Text with DeepSeek-R1

There are two ways to generate text:

Option A: Use the `ollama prompt` command

For a quick, one-shot prompt:

ollama prompt -m deepseek-r1 "Write a short sci-fi story about an interstellar library."

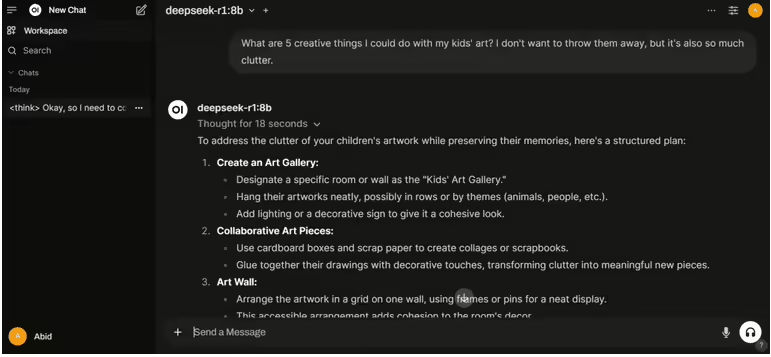

The `-m deepseek-r1` flag specifies the model. The string following the flag is your prompt. Ollama will load the model, run inference, and print the generated text to your terminal. Using Open WebUI is seen below for UI

Option B: Interactive Chat

For an interactive chat session:

ollama chat -m deepseek-r1

Type your message, press Enter, and DeepSeek-R1 will respond. Example:

$ ollama chat -m deepseek-r1 > Hello, who are you? [Model's reply appears here...] > Tell me about the theory of relativity. [Reply...] ...

Press Ctrl+C to exit the chat.

4. Example Output

Here’s an example of running `ollama prompt`:

$ ollama prompt -m deepseek-r1 "Explain the basics of quantum mechanics."

You might see output similar to this:

Quantum mechanics is the branch of physics that describes the behavior of matter and energy at very small scales, typically at the atomic or subatomic level... < Model continues generating more text >

DeepSeek-R1, being an instruction-following model, will attempt to answer your query helpfully. Actual responses will vary.

5. (Optional) Scripting with Ollama

You can script prompts in a `.sh` file or from other programs:

#!/usr/bin/env bash # my_script.sh PROMPT="Suggest three unique recipes using avocados." ollama prompt -m deepseek-r1 "$PROMPT"

Run the script:

chmod +x my_script.sh ./my_script.sh

That’s It!

You now have a DeepSeek-R1 text generation demo using the Ollama CLI, following the documentation at ollama.com/library/deepseek-r1.

A Tip: To explore other models available on Ollama, visit ollama.com/library. Replace `deepseek-r1` with your chosen model name in the `pull` and `prompt`/`chat` commands.