In today’s world of machine learning and AI, managing models and running them efficiently is crucial for developers. Ollama is a powerful platform for running AI models, while Open WebUI provides a user-friendly interface to interact with these models. Running both Ollama and Open WebUI in a Kubernetes cluster provides scalability, flexibility, and easy management.

In this blog post, we’ll walk you through the steps to set up Ollama and Open WebUI in a Kubernetes environment using Docker Desktop on macOS. By the end of this guide, you will have a fully operational AI model environment running in Kubernetes that you can access and configure through a web interface.

Prerequisites

Before we begin, ensure that you have the following tools installed and configured:

- Docker Desktop

- Install Docker Desktop on your macOS if you haven’t already.

- Enable Kubernetes in Docker Desktop under Settings > Kubernetes.

- Git

- You will need Git to clone the repository.

Running Ollama and Open WebUI in a Kubernetes Cluster

Clone the repository

git clone https://github.com/ajeetraina/ollama-openwebui-kubernetes

cd ollama-openwebui-kubernetes

Apply the Kubernetes Manifest

kubectl apply -f ./

Listing the Kubernetes Pods

kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-55cb58b774-n5v46 1/1 Running 0 85m

kube-system coredns-55cb58b774-snwn9 1/1 Running 0 85m

kube-system etcd-docker-desktop 1/1 Running 1 85m

kube-system kube-apiserver-docker-desktop 1/1 Running 1 85m

kube-system kube-controller-manager-docker-desktop 1/1 Running 1 85m

kube-system kube-proxy-t9vf4 1/1 Running 0 85m

kube-system kube-scheduler-docker-desktop 1/1 Running 1 85m

kube-system storage-provisioner 1/1 Running 0 85m

kube-system vpnkit-controller 1/1 Running 0 85m

kubernetes-dashboard dashboard-metrics-scraper-7cbc78bdc6-8kv8d 1/1 Running 0 80m

kubernetes-dashboard kubernetes-dashboard-7d748f6c6b-nfsjz 1/1 Running 0 80m

ollama ollama-56c4986548-qk2r6 2/2 Running 0 84m

ollama open-webui-85799c995c-zxhgf 1/1 Running 0 84m

Accessing the Open Web UI

kubectl port-forward svc/svc-open-webui 8080:8080 -n ollama

Open Open WebUI

Open http://localhost:8080 to access Open WebUI.

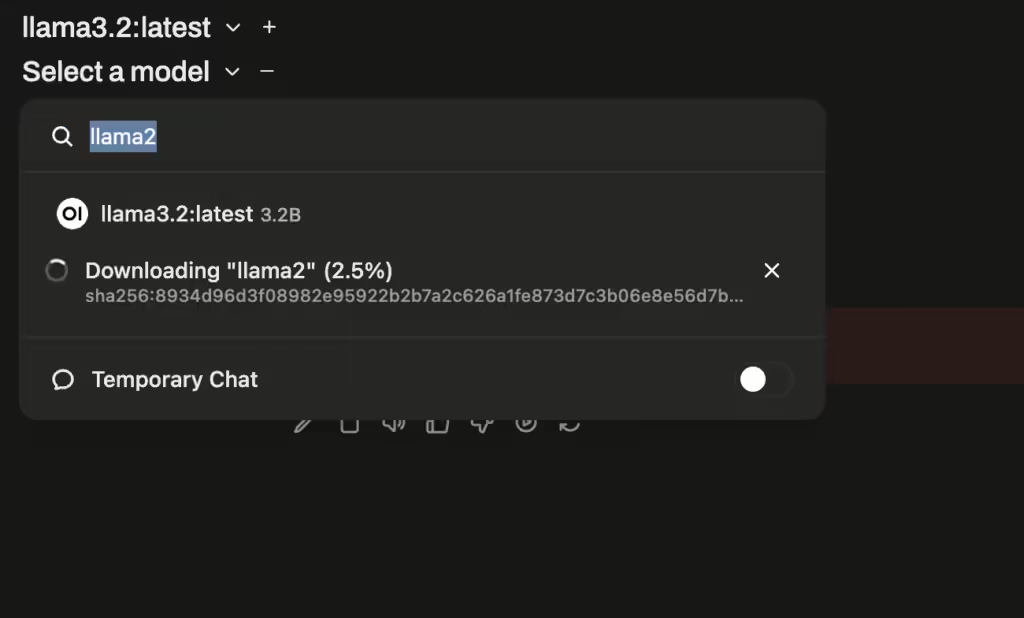

Pull a model

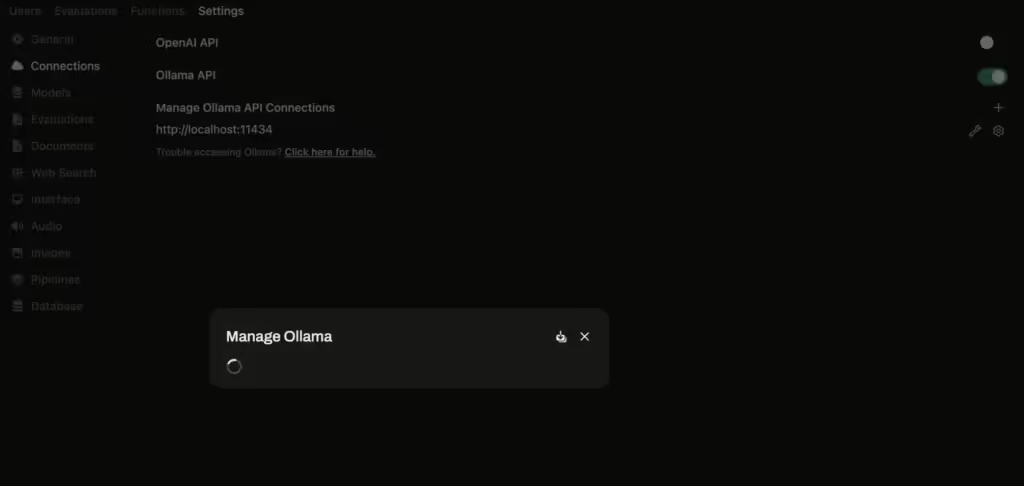

Configuration Setting

Disable OpenAI and enable Ollama configuration by modifying it to http://localhost:11434

Conclusion

Congratulations! You’ve successfully deployed Ollama and Open WebUI in a Kubernetes cluster and can now interact with the models directly through the WebUI. This setup is a great way to leverage Kubernetes for managing your AI-powered applications, and Docker Desktop makes it easy to get started on macOS.

By following these steps, you can quickly spin up and manage machine learning models in a scalable Kubernetes environment while enjoying the benefits of a web interface for easy management and interaction.