Model Context Protocol (MCP) represents a significant advancement in connecting AI models with the external world. As large language models (LLMs) like Claude and GPT continue to evolve, their isolation from real-world data sources and tools has become an increasingly limiting factor. MCP addresses this challenge by providing a standardized way for AI systems to connect with external data sources, tools, and environments.

The Problem: Isolated AI Models

Before diving into MCP’s technical details, let’s understand the fundamental problem it solves:

- Traditional LLMs operate in isolation, cut off from the systems where data lives. Even the most sophisticated models are limited by their disconnection from:

- Real-time data sources

- Enterprise knowledge repositories

- Development environments

- Business applications

- APIs and services

This isolation creates what’s known as the “N×M problem” – where connecting N different AI models to M different tools requires building and maintaining N×M separate integrations. This approach is unsustainable, fragmented, and creates significant redundant development effort.

What is Model Context Protocol?

Model Context Protocol (MCP) is an open-source standard introduced by Anthropic in November 2024. It defines a universal method for connecting AI applications with external data sources and tools through a standardized interface.

Think of MCP like a “USB-C port for AI applications” – it provides a common protocol that allows any AI system to connect with any data source or tool that implements the protocol, much like USB-C allows different devices to connect with various peripherals using the same standard.

Core Architecture

MCP follows a client-server architecture with several key components:

- MCP Host: The user-facing AI interface (e.g., Claude Desktop, IDEs with AI features, or custom AI applications)

- MCP Client: An intermediary component integrated within the host application that manages connections with MCP servers

- MCP Server: External programs that expose specific capabilities (tools, data access, domain prompts) and connect to various data sources like Google Drive, GitHub, databases, etc.

- Transport Layer: The communication mechanism between clients and servers (STDIO for local connections, HTTP+SSE for remote connections)

All communication in MCP uses JSON-RPC 2.0 as the underlying message protocol, providing a standardized structure for requests, responses, and notifications.

Server-side Primitives

MCP servers expose three primary types of capabilities:

Tools

Tools are functions that servers expose to clients, allowing the AI model to perform actions or interact with external systems. Examples include:

- Querying a database

- Sending an email

- Retrieving weather data

- Manipulating files

Tools follow a structured format with names, descriptions, and schemas that define input parameters. Here’s a simple example of a tool definition in JSON-RPC format:

{

"name": "get_weather",

"description": "Retrieves current weather data for a location",

"schema": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City name or coordinates"

},

"units": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"default": "celsius"

}

},

"required": ["location"]

}

}

Resources

Resources are data or content that servers can provide to clients. Unlike tools, resources don’t execute code but rather provide information that can be used as context for LLM interactions.

Examples include:

- Documents

- Database records

- Configuration settings

Prompts

Prompts are predefined templates and workflows that servers can define for standardized LLM interactions. They help guide the model toward specific tasks or domains with established patterns.

Client-side Primitives

Roots

A Root defines a specific location within the host’s file system or environment that the server is authorized to interact with. Roots define the boundaries where servers can operate and allow clients to inform servers about relevant resources and their locations.

Sampling

Sampling is a powerful feature that reverses the traditional client-server relationship. Instead of clients making requests to servers, sampling allows MCP servers to request LLM completions from the client. This gives clients control over model selection, hosting, privacy, and cost management.

Protocol Flow in Action

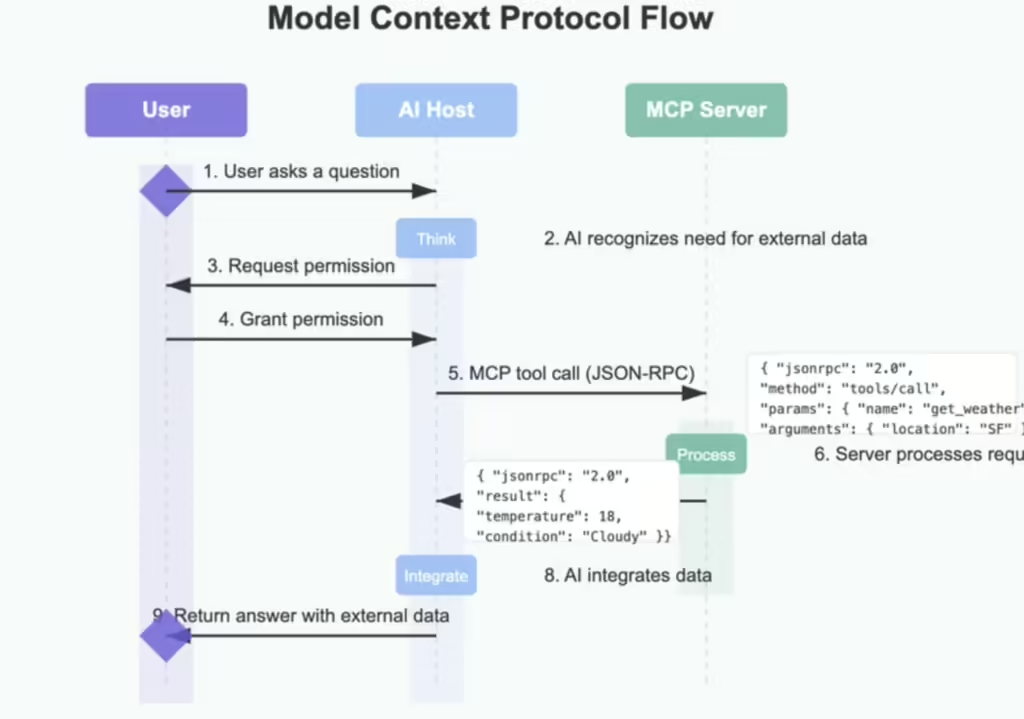

Let’s walk through a typical MCP interaction to understand how it works:

- Connection and Handshake: When an MCP client starts, it connects to configured MCP servers using either STDIO (for local servers) or HTTP+SSE (for remote servers). The client and server exchange capability information.

- User Request: A user asks the AI application a question that requires external information (e.g., “What’s the weather like in San Francisco today?”).

- LLM Assessment: The model recognizes it needs external data and determines which tool to use.

- Permission Request: The client displays a permission prompt, asking the user if they want to allow access to the external tool.

- Tool Invocation: Once approved, the client sends a request to the appropriate MCP server using the standardized protocol format (JSON-RPC).

- Server Processing: The server handles the request, performing whatever action is needed (e.g., querying a weather service).

- Response Return: The server returns the information to the client.

- Context Integration: The LLM receives this information and incorporates it into its understanding.

- Response Generation: The model generates a response using the external data.

Here’s a simplified example of the JSON-RPC messages exchanged:

Client Request:

{

"jsonrpc": "2.0",

"id": 1,

"method": "tools/call",

"params": {

"name": "get_weather",

"arguments": {

"location": "San Francisco",

"units": "celsius"

}

}

}

Server Response:

{

"jsonrpc": "2.0",

"id": 1,

"result": {

"temperature": 18,

"condition": "Partly cloudy",

"humidity": 65,

"wind": "12 km/h"

}

}

Benefits of MCP

MCP transforms how AI systems interact with external tools and data sources in several important ways:

- Standardization: Instead of creating custom integrations for each combination of AI model and tool, developers can use MCP as a common interface.

- Reusability: The growing ecosystem of MCP servers means developers can reuse existing integrations rather than building from scratch.

- Scalability: Adding new tools or data sources becomes simple – just add another MCP server without changing client code.

- Security: MCP includes built-in security features like permission prompts and OAuth authentication for remote servers.

- Cross-model compatibility: The same MCP servers can work with different AI models from various vendors, reducing vendor lock-in.

MCP Authentication and Security

Earlier versions of MCP lacked standardized authentication mechanisms, making it challenging to use with remote services securely. Recent improvements have incorporated OAuth 2.0 authentication, providing:

- Dynamic Client Registration (DCR): Automatic client registration with OAuth servers

- Endpoint Discovery: Automatic discovery of OAuth endpoints

- Secure Token Management: Precise access control with properly scoped tokens

- Multi-User Support: Ability to handle authorization for multiple users simultaneously

These improvements make MCP suitable for enterprise environments where security and access control are critical.

Building Your First MCP Server

Let’s look at a simple example of creating an MCP server in Python:

pythonfrom mcp.server.fastmcp import FastMCP

# Create an MCP server

mcp = FastMCP("WeatherServer")

# Define a weather tool

@mcp.tool()

def get_weather(location: str, units: str = "celsius") -> dict:

"""Get current weather for a location.

Args:

location: City name or coordinates

units: Temperature units (celsius or fahrenheit)

Returns:

Weather data including temperature and conditions

"""

# In a real implementation, this would call a weather API

# This is just a mock example

return {

"temperature": 18 if units == "celsius" else 64,

"condition": "Partly cloudy",

"humidity": 65,

"wind": "12 km/h"

}

# Run the server

if __name__ == "__main__":

mcp.run(transport="stdio")

To run this server, you would execute:

python weather_server.py

MCP Ecosystem

The MCP ecosystem has grown rapidly since its introduction, with clients and servers available for various purposes:

MCP Clients

- Claude Desktop: Anthropic’s primary client implementation

- Cursor: AI-powered code editor with MCP support

- Zed: A code editor with MCP integration

- Sourcegraph Cody: Code intelligence platform with MCP support

- Frameworks: Including FirebaseGenkit, LangChain adapters, and more

MCP Servers

- Reference Servers: PostgreSQL, Slack, GitHub

- Official Integrations: Stripe, JetBrains, Apify

- Community Servers: Discord, Docker, HubSpot, and many more

Comparing MCP to Alternative Approaches

MCP improves upon earlier approaches like ChatGPT plugins or custom integrations by providing an open, standardized protocol that works across different AI models and vendors.

Real-World Applications

MCP enables sophisticated AI applications across various domains:

- Software Development: AI assistants that can understand codebases, run tests, and commit changes

- Data Analysis: LLMs that can directly query databases and visualize results

- Customer Support: AI systems that access knowledge bases and CRM data for context-aware responses

- Business Process Automation: Agents that coordinate across multiple business systems

- Content Creation: AI tools that can access media libraries and content management systems

Conclusion

Model Context Protocol represents a significant advancement in how AI systems interact with external tools and data. By standardizing these interactions, MCP reduces the complexity of building AI applications that can access real-world information and take meaningful actions.

As the ecosystem continues to grow, we can expect MCP to become an essential part of the AI infrastructure, enabling more capable, context-aware AI applications that seamlessly connect with the systems where data lives.

For developers looking to get started with MCP, the open-source repositories, SDKs in multiple languages, and growing collection of pre-built servers provide a solid foundation. Whether you’re building an AI-powered IDE, enhancing a chat interface, or creating custom AI workflows, MCP offers a structured, scalable approach to connecting your AI application with the context it needs.