Imagine an AI assistant helping a software team debug an issue. GitHub Co-Pilot might suggest fixes based on the code visible in the editor, but what if the root cause is buried in a legacy bug-tracking system or a document stored in an outdated repository? Without direct access to those systems, the AI can’t provide a comprehensive solution.

Let’s consider another example – A customer support chatbot. A chatbot might be helping an agent resolve a customer query. The AI can only pull data from the CRM it’s integrated with. But what if the answer requires pulling product manuals from a knowledge base, order history from an ERP system, or troubleshooting guides from a cloud storage platform?

Context Management: The Biggest Headache in 2024

LLMs (Large Language Models) are everywhere—powering chatbots, crunching data, and helping with decision-making. But if you’ve ever tried scaling one in an enterprise setting, you’ve probably hit a few walls.

Context management is one of the biggest headaches. Keeping track of all the moving parts—user inputs, historical data, and domain-specific knowledge—gets messy fast. The real challenge for AI systems lies in accessing important data scattered across different platforms, systems, or locked away in older technologies. This is exactly the problem the Model Context Protocol (MCP) is designed to solve.

How MCP solves this problem

Unlike Co-Pilot, which focuses on helping users within a specific environment, MCP focuses on breaking barriers between systems so AI can access the information it needs, no matter where it’s stored. This helps AI provide better, more accurate answers and perform more powerful tasks, benefiting businesses and users alike.

MCP is like Type C USB

MCP is like the Type-C USB of AI systems—a universal connector that works everywhere. Just as Type-C USB replaced a confusing array of charging cables with one standard that fits all devices, the Model Context Protocol (MCP) replaces the need for custom-built integrations between AI systems and data sources.

How does MCP works?

The Model Context Protocol (MCP) is designed to handle complex context management in a smart and efficient way. It uses real-time state synchronization to ensure that Large Language Models (LLMs) stay context-aware across various tools and data sources.

Here’s a high-level breakdown of how it works:

The MCP acts as a standardized middleware layer that seamlessly connects Large Language Models (LLMs) with the external systems they rely on, such as databases, APIs, and other tools. This protocol layer is designed to address one of the biggest challenges in enterprise AI applications: managing the flow of context between the model and its environment.

From a security perspective, MCP provides strong access controls with detailed permissions and audit trails. This keeps sensitive data safe while maintaining high system performance. It also includes built-in monitoring tools, making it easier to track the flow of context and quickly resolve any issues in production.

How Developers Benefit from MCP?

For developers, MCP simplifies integration work with its SDK-first approach. Instead of creating custom integrations for each new tool or data source, developers can use standardized interfaces, saving time and effort.

MCP’s stateful connection management ensures reliable performance, even as systems scale. Its distributed architecture efficiently manages increasing loads without requiring major changes, eliminating common bottlenecks seen in custom-built solutions.

By offering this comprehensive infrastructure, MCP transforms how LLM applications are developed and deployed. It allows teams to focus on building core features rather than wrestling with complex integration challenges, resulting in more robust and maintainable AI systems.

MCP also addresses key challenges like standardization, interoperability, security, and scalability, providing a solid foundation for enterprise AI. It enables smoother workflows, connects tools like Claude to various systems, and helps save time by streamlining processes.

As more teams adopt MCP, their hands-on experience will contribute to refining and improving the protocol. This collaboration will ensure MCP continues to evolve to meet the growing demands of AI workflows, while maintaining reliability and performance.

MCP servers

This repository is a collection of reference implementations for the Model Context Protocol (MCP), as well as references to community built servers and additional resources.

The list of current servers documents how to update the claude_desktop_config.json to activate these MCP server docker containers on your local host.

MCP Namespace in Docker Hub

Link: https://hub.docker.com/u/mcp

MCP PostgreSQL Server

The MCP PostgreSQL server provides read-only access to PostgreSQL databases, enabling you to:

- Inspect database schemas.

- Execute read-only SQL queries.

Step 1: Prepare Your Environment

Prerequisites:

- Install Docker Desktop

- Node.js and NPM (for npx usage): Install Node.js from nodejs.org.

- PostgreSQL Database: Ensure you have a PostgreSQL database running as part of a Product Catalog Sample App.

git clone https://github.com/dockersamples/catalog-service-node

cd catalog-service-node

docker compose up -d

yarn install

yarn dev

- Claude Desktop App (optional): Download if you plan to use it as the client.

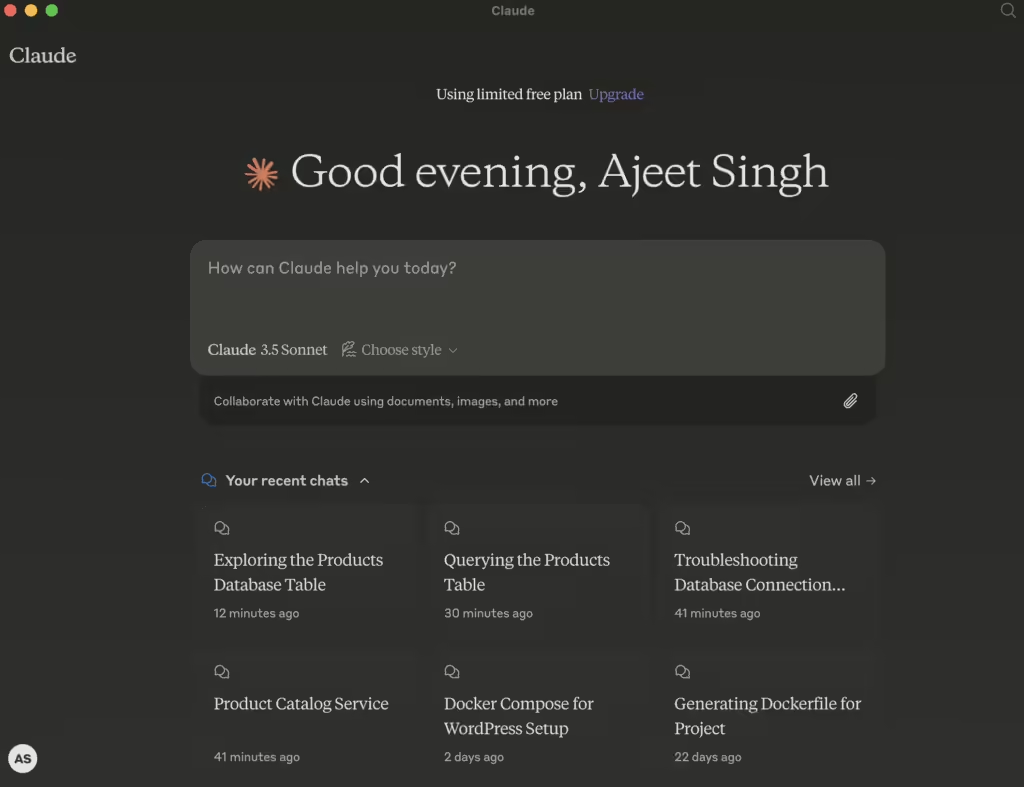

Currently, you won’t see any MCP server shown in the Desktop UI.

Step 3: Using Docker to Run the MCP PostgreSQL Server

- Start the Server Using Docker

Use the following command to start the server:

docker run -i --rm mcp/postgres postgresql://postgres@host.docker.internal:5432/catalog- Add Authentication (Optional)

If your database requires a username and password, include them in the URL:

docker run -i --rm mcp/postgres postgresql://user:password@host:5432/catalogStep 4: Configure Claude Desktop to Use the PostgreSQL Server

If you’re running Claude Desktop on macos, then you can try this today by creating the file ~/Library/Application\ Support/Claude/claude_desktop_config.json. OR

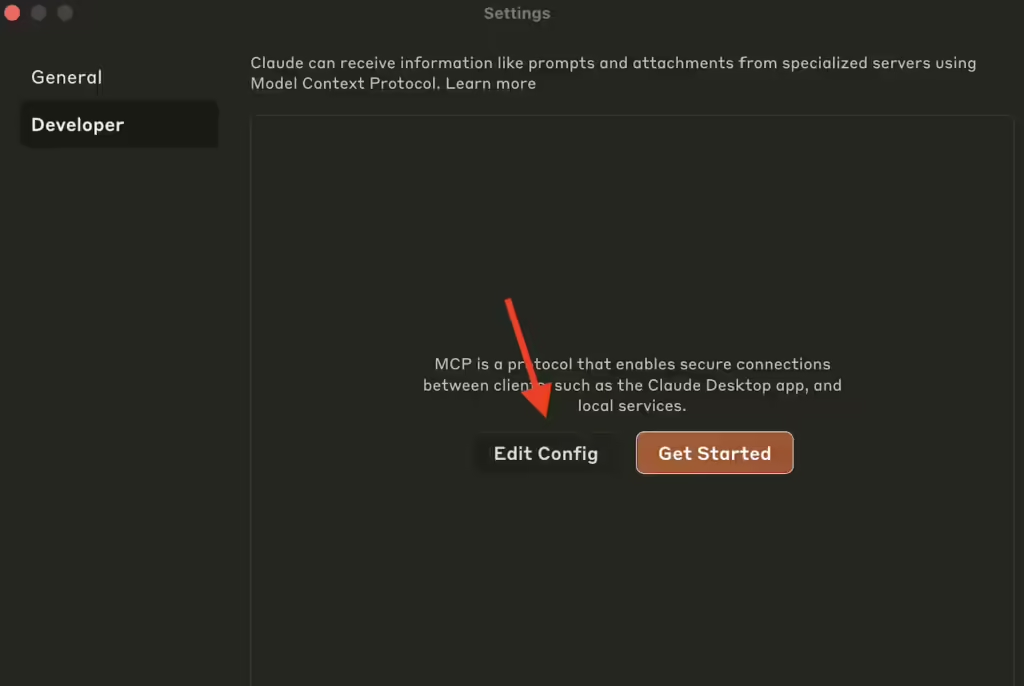

Edit the claude_desktop_config.json file directly by using Claude Desktop > Settings > Developer.

Add the following to the "mcpServers" section using Xcode.

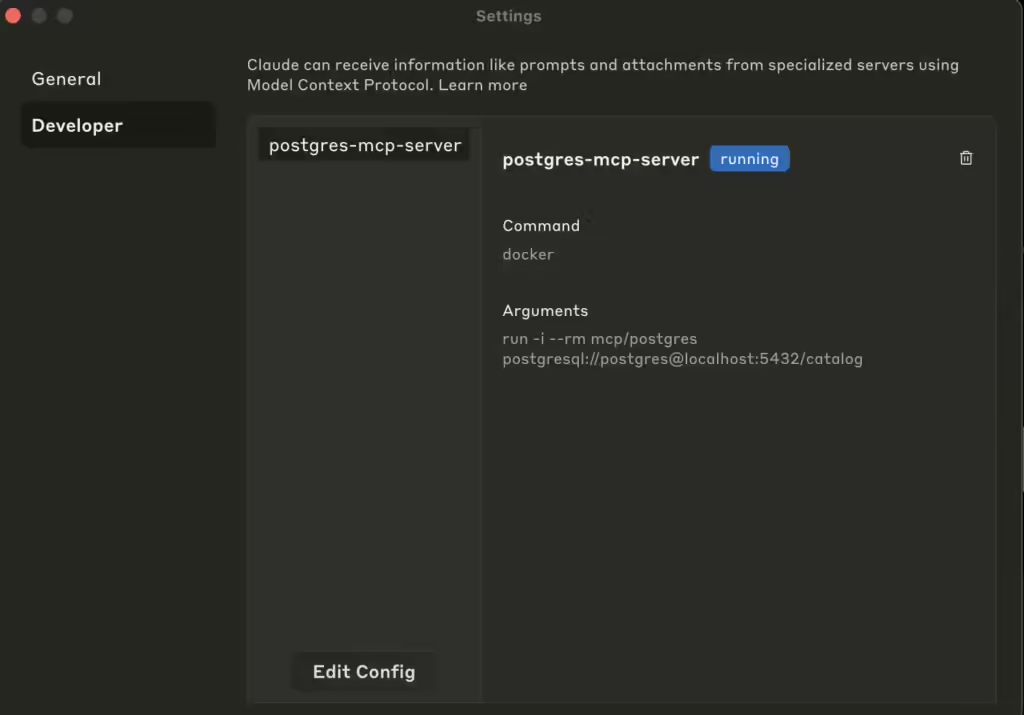

For Docker:

{

"mcpServers": {

"postgres-mcp-server": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"mcp/postgres",

"postgresql://postgres@localhost:5432/catalog"

]

}

}

}

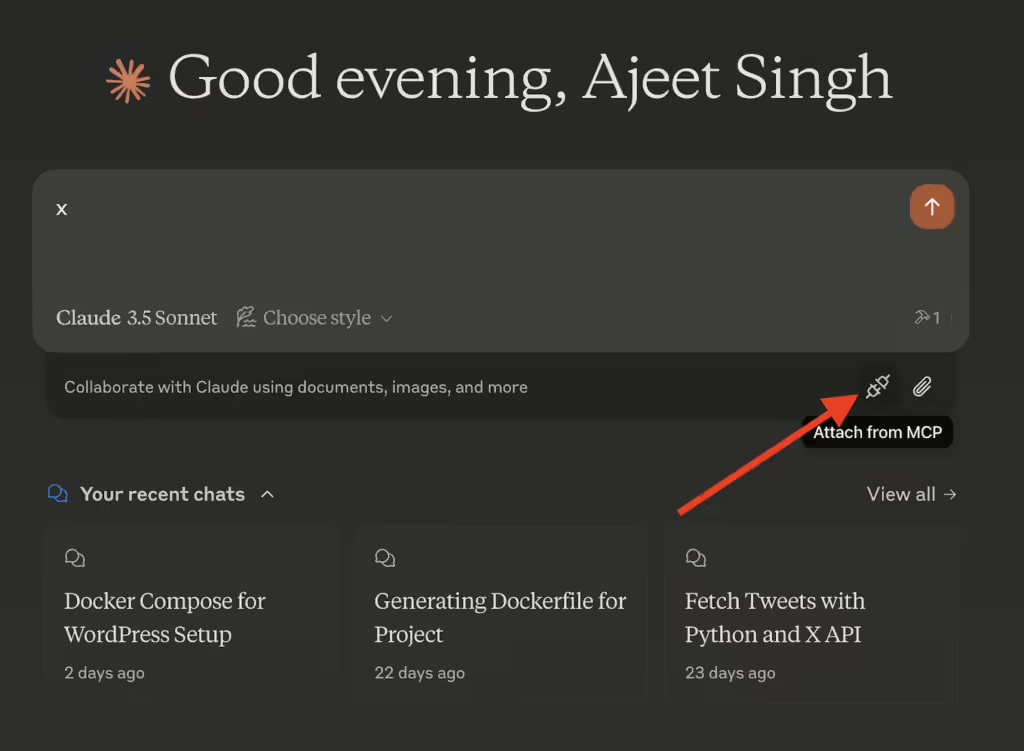

Restart the Claude Desktop by exiting and open it again from Applications. This time, you will see an icon that says “Attach from MCP”

Ensure that you have Postgres MCP Server up and running by using the following command:

docker run -i --rm mcp/postgres postgresql://postgres@localhost:5432/catalogClick on the icon and you’ll find installed MCP servers.

Step 6: Inspect Table Schemas and Execute Queries

The server provides the following capabilities:

- Table Schemas:

Access table schema information at:

postgres://<host>/<table>/schema

It includes:

- Column names.

- Data types.

- Read-Only Queries:

Execute read-only SQL queries using thequerytool. Example:

{

"tool": "query",

"input": {

"sql": "SELECT * FROM my_table LIMIT 10;"

}

}

Conclusion

The Model Context Protocol (MCP) offers a powerful framework for seamlessly integrating server-side capabilities with AI-driven workflows. By providing standardized tools and resources, MCP empowers developers to interact with various data sources and systems efficiently, whether it’s through query execution, schema inspection, or creating custom server implementations. Its flexibility ensures a streamlined approach for enhancing the functionality of AI applications like Claude Desktop and beyond.

The Postgres MCP Server, in particular, exemplifies the versatility of MCP by enabling read-only access to PostgreSQL databases. It simplifies data exploration and analysis by exposing schemas and allowing read-only SQL queries, all while ensuring database integrity through its transactional design. Whether you deploy it via Docker or NPX, the Postgres MCP server is a straightforward yet robust solution for integrating PostgreSQL into your AI and data-centric workflows.

By leveraging the tools and best practices provided by MCP, developers can enhance AI applications with actionable data insights while maintaining a secure and efficient architecture. Start exploring the capabilities of MCP today and unlock the potential of your PostgreSQL databases with ease! 🚀