According to a recent Gartner report, “By the end of 2024, 75% of organizations will shift from piloting to operationalizing artificial intelligence (AI), driving a 5x increase in streaming data analytics infrastructure.” The report, Top 10 Trends in Data and Analytics, 2020, further states, “Getting AI into production requires IT leaders to complement DataOps and ModelOps with infrastructures that enable end-users to embed trained models into streaming-data infrastructures to deliver continuous near-real-time predictions.”

What’s driving this tremendous shift to AI? The accelerated growth in the size and complexity of distributed data needed to optimize real-time decision making.

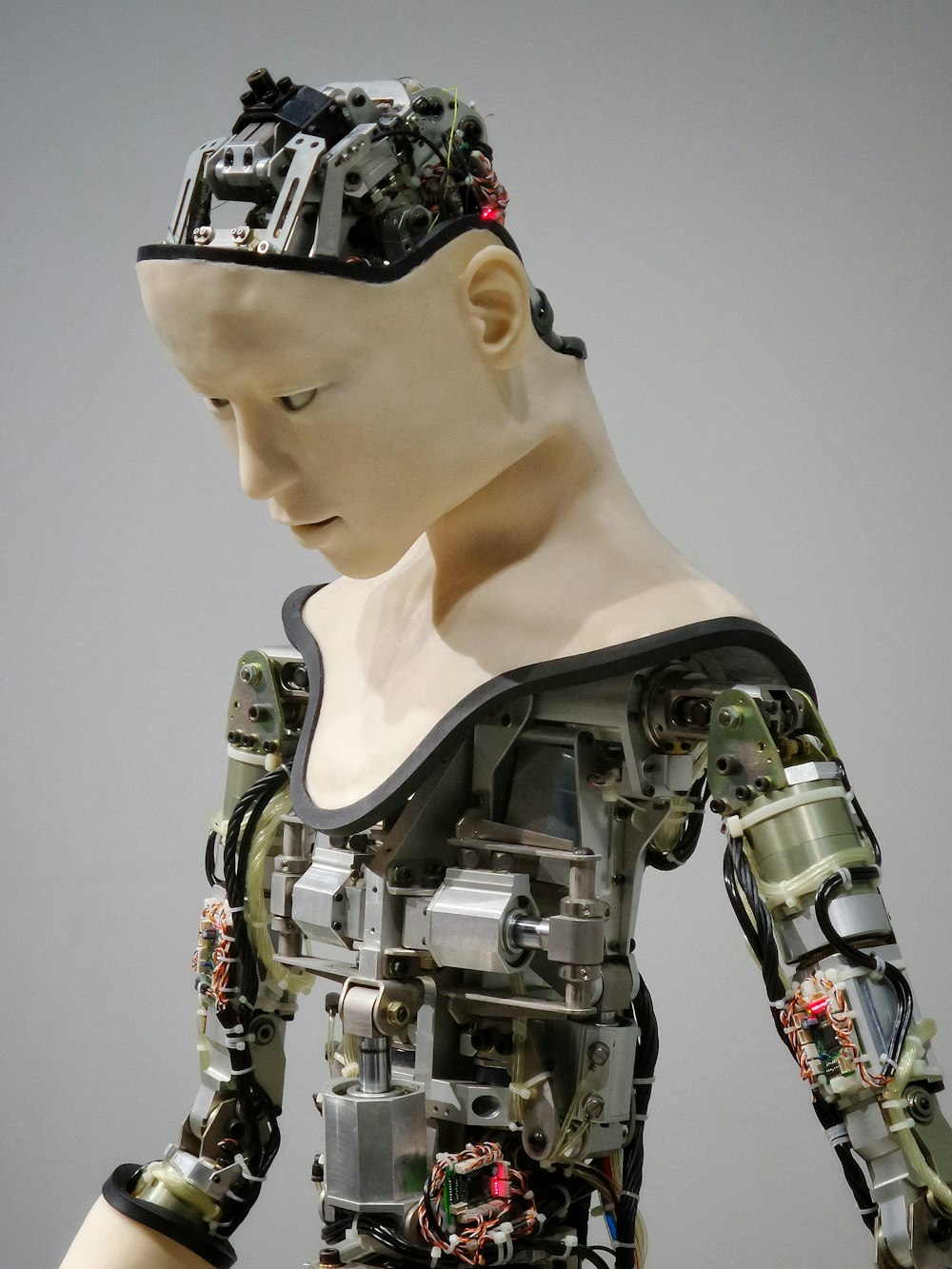

Realizing AI’s value, organizations are applying it to business problems by adopting intelligent applications and augmented analytics and exploring composite AI techniques. AI is finding its way into all aspects of applications, from AI-driven recommendations to autonomous vehicles, from virtual assistants, chatbots, and predictive analytics to products that adapt to the needs and preferences of users.

Surprisingly, the hardest part of AI is not artificial intelligence itself, but dealing with AI data. The accelerated growth of data captured from the sensors in the internet of things (IoT) solutions and the growth of machine learning (ML) capabilities are yielding unparalleled opportunities for organizations to drive business value and create competitive advantage. That’s why ingesting data from many sources and deriving actionable insights or intelligence from it have become a prime objective of AI-enabled applications. In this blog post, we will discuss the AI data pipeline and the challenges of getting AI into real-world production.

From 30,000 feet up, a great AI-enabled application might look simple. But a closer look reveals a complex data pipeline. To understand what’s really happening, let’s break down the various stages of the AI data pipeline.

The first stage is data ingestion. Data ingestion is all about identifying and gathering the raw data from multiple sources, including the IoT, business processes, and so on.

The gathered data is typically unstructured and not necessarily in the correct form to be processed, so you also need a data-preparation stage. This is where the pre-processing of data—filtering, construction, and selection—takes place. Data segregation also happens at this stage, as subsets of data are split in order to train the model, test it, and validate how it performs against the new data.

Next comes the model training phase. This includes incremental training of conventional neural network models, which generates trained models that are deployed by the model serving layer to deliver inferences or predictions. The training phase is iterative. Once a trained model is generated, it must be tested for inference accuracy and then re-trained to improve that accuracy.

In nutshell, the fundamental building block of artificial intelligence comprises everything from ingest through several stages of data classification, transformation, analytics, machine learning, and deep learning model training, and then retraining through inference to yield increasingly accurate insights.

AI pipeline characterization and performance requirements

The AI data pipeline has varying characteristics and performance requirements. The data can be characterized by variety, volume, and disparity. The ingest phase must support fast, large-scale processing of the incoming data, while data quality is the primary focus of the data preparation phase. Both the training and inference phases are sensitive to model quality, data-access latency, response time, throughput, and data-caching capabilities of the AI solution.

In my next blog, I will showcase how AI Data Pipeline is used to build object detection and analytics platform using Edge devices and Cloud-Native application.

References:

Comments are closed.