How to Fix “Can not push Image to DockerHub” Error in Docker

The “Can not push Image to DockerHub” error occurs when images cannot be pushed to DockerHub. This troubleshooting guide...Is Node.js frontend or backend

Explore the powerful world of Node.js with this in-depth guide! Discover how it blurs the lines between frontend and...Is Kubernetes Cloud or DevOps?

Explore how Kubernetes fits into the world of cloud and DevOps. Learn how this open-source platform streamlines containerized application...Hugo vs MkDocs: Choosing the Right Static Site Generator

In the world of web development, static site generators (SSGs) have become a popular choice for building clean, lightning-fast...Automating DevOps Workflows with Jenkins and Ansible

Discover the power of Jenkins and Ansible in streamlining DevOps processes. Learn how to integrate these tools effectively for...Proxies and Encryption: Enhancing Online Privacy and Security

The digital age has made strong online privacy and security measures more necessary than ever before. Due to the...Fix “DNS Server Not Responding” Error [7 Working Fixes]

No one likes being denied access to the internet. One of the worst causes is the “DNS Server Not...Why Runtime Protection Is Key for Defending Against Zero-Day Vulnerabilities

Zero-day exploits pose a serious threat to organizations. Learn how runtime protection can effectively defend against these vulnerabilities and...All Stories

The Difference Between Citizen Developers and Professional Developers: Explained with Lego Analogy

Imagine you’re building a Lego castle. You have all the bricks you need, and you can put them together however you...

WSL Mirrored Mode Networking in Docker Desktop 4.26: Improved Communication and Development Workflow

Discover the new WSL mirrored mode networking in Docker Desktop 4.0! Simplify communication between WSL and Docker containers for improved development...

December Newsletter

Welcome to the Collabnix Monthly Newsletter! We bring you a curated list of latest community-curated tutorials, sample apps, events, and videos....

How To Run Containerd On Docker Desktop

Docker Desktop has become a ubiquitous tool for developers and IT professionals, offering a convenient and accessible platform for working with...

MindsDB Docker Extension: Build ML powered applications at a much faster pace

Imagine a world where anyone, regardless of technical expertise, can easily harness the power of artificial intelligence (AI) to gain insights...

Collabnix Monthly Newsletter – December 2023

Welcome to the Collabnix Monthly Newsletter! Get the latest community-curated tutorials, events, and more. Submit your own article or video for...

Ways to Pass Environment Variables to Docker Containers: -e Flag, .env File, and Docker Compose | Docker

Environment variables are the essential tools of any programmer’s toolkit. They hold settings, configurations, and secrets that shape how our applications...

Understanding HR Analytics and The Business Benefits It Brings

Business leaders made million-dollar decisions about their workforce for decades while flying blind. Hiring strategies, productivity investments, and retention programs –...

The Importance of Docker Container Backups: Best Practices and Strategies

Docker containers provide a flexible and scalable way to deploy applications, but ensuring the safety of your data is paramount. In...

Mastering MySQL Initialization in Docker: Techniques for Smooth Execution and Completion

Docker has revolutionized the way we deploy and manage applications, providing a consistent environment across various platforms. When working with MySQL...

Continuous Integration Unveiled: Building Quality Into Every Code Commit

Software development is a realm where innovation thrives and code evolves. Here, only one key player stands out. Do you know...

AI-powered Medical Document Summarization Made Possible using Docker GenAI Stack

As medical advisors in legal cases, sifting through mountains of complex medical documents is a daily reality. Unraveling medical jargon, deciphering...

How to Capture Screen Using Linux

Most content creators wonder how to make a video in 5 minutes and where to find reliable software for high-quality screen...

IoT Applications with SQL Server

In the dynamic realm of digital innovation, the Internet of Things (IoT) is a technological marvel that is reshaping our interaction...

Docker GenAI Stack on Windows using Docker Desktop

The Docker GenAI Stack repository, with nearly 2000 GitHub stars, is gaining traction among the data science community. It simplifies the...

How to change the default Disk Image Installation directory in Docker Desktop for Windows

Docker Desktop is a powerful tool that allows developers to build, ship, and run applications in containers. By default, Docker stores...

Getting Started With Containerd 2.0

Discover the benefits of Containerd, a software that runs and manages containers on Linux and Windows systems. Join our Slack Community...

TestContainers vs Docker: A Tale of Two Containers

Update: AtomicJar, a company behind testcontainers, is now a part of Docker Inc In the vibrant landscape of software development, containers...

How to Retrieve a Docker Container’s IP Address: Methods, Tools, and Scenarios

Docker, the ubiquitous containerization platform, has revolutionized the way we develop, deploy, and scale applications. One common challenge developers and administrators...

Docker ENTRYPOINT and CMD : Differences & Examples

Docker revolutionized the way we package and deploy applications, allowing developers to encapsulate their software into portable containers. Two critical Dockerfile...

Understanding the Relationship Between Machine Learning (ML), Deep Learning (DL), and Generative AI (GenAI)

Discover the intersection of Machine Learning (ML), Deep Learning (DL), and Generative AI (GenAI). Learn how GenAI leverages the strengths of...

PHP and Docker Init – Boost Your Development Workflow

Introducing docker init: the revolutionary command that simplifies Docker life for developers of all skill levels. Say goodbye to manual configuration...

How to Install and Run Ollama with Docker: A Beginner’s Guide

Let’s create our own local ChatGPT. In the rapidly evolving landscape of natural language processing, Ollama stands out as a game-changer,...

What’s New in Kubernetes 1.29: PersistentVolume Access Mode, Node Volume Expansion, KMS Encryption, Scheduler Optimization, and More

Kubernetes 1.29 draws inspiration from the intricate art form of Mandala, symbolizing the universe’s perfection. This theme reflects the interconnectedness of...

How To Automatically Update Docker Containers With Watchtower

Docker is a containerization tool used for packaging, distributing, and running applications in lightweight containers. However, manually updating containers across multiple...

Essential DevOps Brand Assets for Building a Strong Identity

Building a robust brand identity is crucial for any business, and for those immersed in the DevOps culture, maintaining consistency across...

Containerd Vs Docker: What’s the difference?

Discover the differences between Docker and containerd, and their roles in containerization. Learn about Docker as a versatile container development platform.

5 Benefits of Docker for the Finance and Operations

The dynamic world of finance and operations thrives on agility, efficiency, and resilience. Enter Docker, the game-changing containerization technology poised to...

Top 5 Kubernetes Backup and Storage Solutions: Velero and More

In a sample Kubernetes cluster as shown below, where you have your microservice application running and an elastic-search database also running...

Docker Scout for Your Kubernetes Cluster

Docker Scout is a collection of secure software supply chain capabilities that provide insights into the composition and security of container...

Collabnix: Docker, Kubernetes, and Cloud-Native Collaboration

In today’s technology-driven world, open-source projects have become the cornerstone of innovation and collaboration. Collabnix, a vibrant community of developers, DevOps...

Containerization Revolution: How Docker is Transforming SaaS Development

Join Our Slack Community In the ever-evolving landscape of software development, containerization has emerged as a revolutionary force, and at the...

Effortlessly manage Apache Kafka with Docker Compose: A YAML-powered guide!

Effortlessly Manage Kafka with Docker Compose: A YAML-Powered Guide!

Do You Need Expert Skills to Edit Photos Using CapCut Online Editor?

Many people get worried about how to make viral transformations to photos because of not have any expert photo editing skills....

How to Become a CNCF Ambassador and Join the Cloud-Native Community

The Cloud Native Computing Foundation (CNCF) has become a cornerstone in the world of cloud-native technologies, fostering innovation and collaboration within...

Choosing the Perfect Kubernetes Playground: A Comparison of PWD, Killercoda, and Other Options

Kubernetes is a powerful container orchestration platform, but its complexity can be daunting for beginners. Luckily, several online playgrounds offer a...

What is Containerd and what problems does it solve

Join Our Slack Community Containerd is the software responsible for managing and running containers on a host system; in other words,...

6 Ways to Adopt AI into Your Business

Join Our Slack Community The concept of intelligent machines has captivated human imagination for centuries. While Greek myths depicted mechanical men...

How to run Docker-Surfshark container for Secure and Private Internet Access

Join Our Slack Community To some of you, the idea of running a VPN inside a docker container might seem foreign,...

Optimizing Kubernetes for IoT and Edge Computing: Strategies for Seamless Deployment

The convergence of Kubernetes with IoT and Edge Computing has paved the way for a paradigm shift in how we manage...

The Role of Employee Onboarding Software in Talent Pipelines

Employee onboarding plays a vital role in attracting and retaining talent for organizations. It goes beyond getting employees up to speed;...

5 Tech Trends that Will Impact the Financial Services Sector

Join Our Slack Community The financial services industry is experiencing a swift evolution, with intelligent automation, AI-driven advisory, and asset management...

Ollama: A Lightweight, Extensible Framework for Building Language Models

Join Our Slack Community With over 10,00,000 Docker Pulls, Ollama is highly popular, lightweight, extensible framework for building and running language...

Docker Desktop 4.25.0: What’s New in Containerd

Join Our Slack Community This is a series of blog posts that discusses containerd feature support in all Docker Desktop releases....

Kubernetes Workshop for Beginners: Learn Core Concepts and Hands-On Exercises | Register Now

Join Our Slack Community Are you new to Kubernetes and looking to gain a solid understanding of its core concepts? Do...

DevOps and Paper Writing: How to Streamline Workflows for Technical Documentation

In the fast-paced world of technology, the need for clear and concise technical documentation has become paramount. Whether it’s user manuals,...

Bare Metal vs. VMs for Kubernetes: Performance Benchmarks

In the realm of container orchestration, Kubernetes reigns supreme, but the question of whether to deploy it on bare metal or...

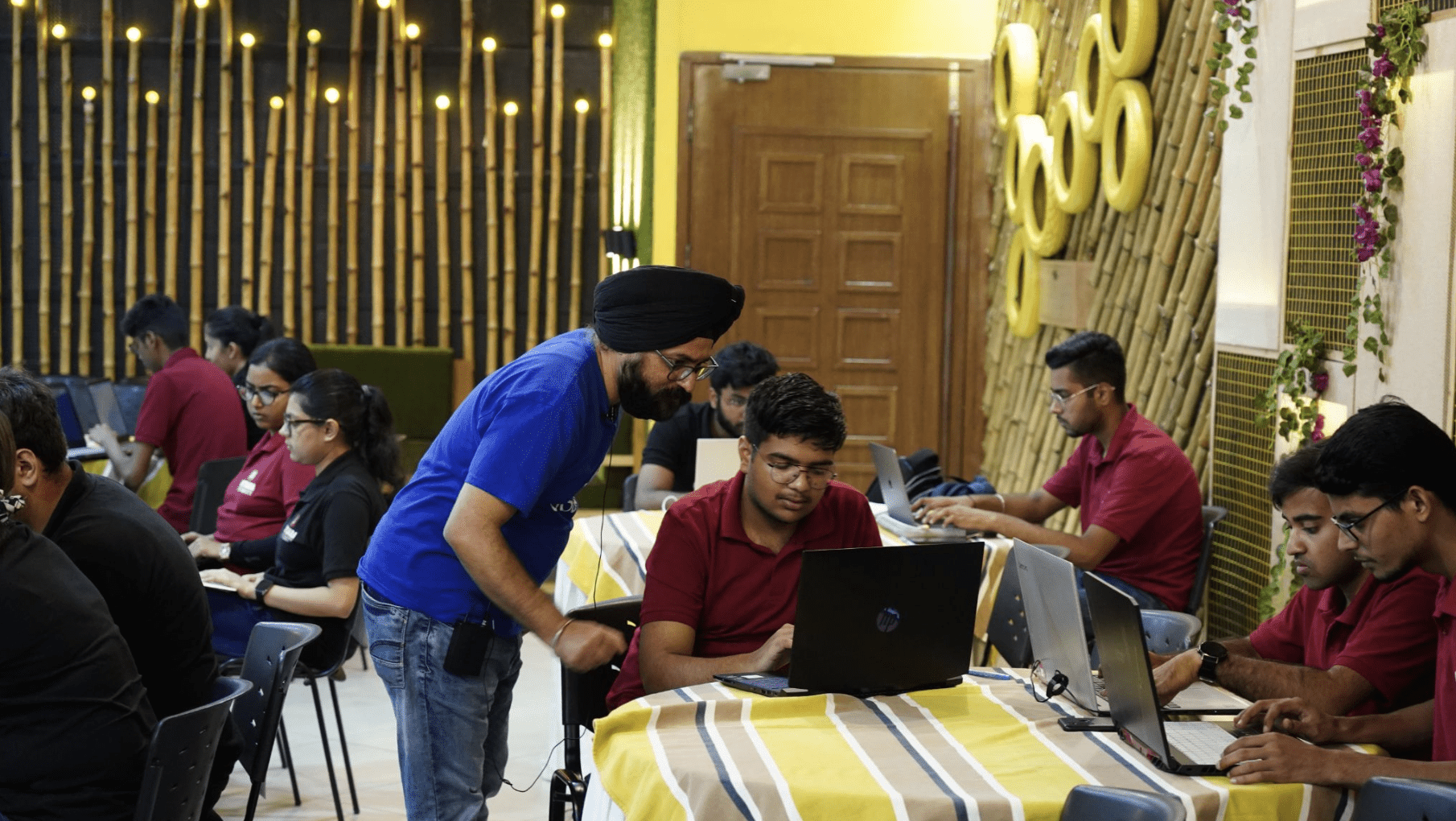

Cloud and DevOps 2.0 x Docker Community Meetup

Join us for an exciting in-person meetup at Bengaluru as we bring everyone together to participate, collaborate, and share their knowledge...

Top 5 Storage Provider Tools for Kubernetes

Join Our Slack Community As Kubernetes keeps progressing, the need for storage management becomes crucial. The chosen storage provider tools in...

Navigating the Future: Emerging Trends in Cloud Technologies for Developing Cloud Applications

The latest advancements in technology have come a long way and have transformed business and its traditional ways. A forward-thinking step...

Understanding LLM Hallucination: Implications and Solutions for Large Language Models

Discover the world of large language models (LLMs) and their intriguing phenomenon of hallucination in artificial intelligence. Explore more in this...

Translate a Docker Compose File to Kubernetes Resources using Kompose

Docker Compose is a tool for defining and running multi-container Docker applications. It is a popular choice for developing and deploying...

The Internet of Things in Education: Definition, Role and Benefits

The power of the Internet in transforming the world is undeniable. The Internet of Things (IoT), which describes the connection of...

Unlocking Scalability and Resilience: Dapr on Kubernetes

Modernizing applications demands a new approach to distributed systems, and Dapr (Distributed Application Runtime) emerges as a robust solution. Dapr simplifies...

Top 5 Machine Learning Tools For Kubernetes

Join Our Slack Community Kubernetes is a platform that enables you to automate the process of deploying, scaling, and managing applications...

Foundation Vs Generative AI Model Demystified

In the realm of artificial intelligence, the terms "foundation AI model" and "generative AI model" are often used interchangeably, leading to...

Using AI To Fund Your Startup

Anyone would agree that the world has made more progress with artificial intelligence (AI) in the past year than in the...

Understanding the Role of Knowledge Management in an Organization

Knowledge management is akin to being the guide of shared intelligence within an organization. It goes beyond just collecting data; it’s...

Top 5 Alert and Monitoring Tools for Kubernetes

Kubernetes has emerged as the go-to choice for running applications in containers. It brings advantages compared to traditional deployment methods, like...

What is Python development: 7 major use cases

In the vast realm of programming languages, Python stands out as a powerhouse, renowned for its simplicity, versatility, and widespread adoption....

5 Most Interesting Announcements from Kubecon & CloudNativeCon North America 2023

KubeCon + CloudNativeCon North America 2023 was held in Chicago, Illinois, from November 6–9, 2023. It is the flagship conference of...

Top 5 Cluster Management Tools for Kubernetes in 2023

Kubernetes, also known as K8s, is a platform that allows you to efficiently manage your containerized applications across a group of...

10 Tips for Right Sizing Your Kubernetes Cluster

Kubernetes has become the de facto container orchestration platform for managing containerized applications at scale. However, ensuring that your Kubernetes cluster...

Top 5 Generative AI Challenges and Possible Solutions

Generative AI (GenAI) is a rapidly advancing field with the potential to revolutionize various industries and aspects of our lives. However,...

DevOps in the Real World: Making the World a Better Place

DevOps, short for Development and Operations, is not just a buzzword in the tech industry; it’s a transformative approach that's making...

Benefits and Projects of the Cloud Native Computing Foundation (CNCF)

The Cloud Native Computing Foundation (CNCF) is a nonprofit organization that fosters the development, adoption, and sustainability of cloud native software....

What are Large Language Models: Popularity, Use Cases, and Case Studies

Unveiling LLMs: A Glimpse into Their Popularity, Versatile Use Cases, and Real-World Case Studies

Kubecon + CloudNativeCon North America 2023: A Must-Attend Event for Kubernetes Users

Kubecon + CloudNativeCon North America 2023 is the world’s largest Kubernetes conference, and it’s back in Chicago, Illinois from November 6-9,...

Architecting Kubernetes clusters- how many should you have

There are different ways to design Kubernetes clusters depending on the needs and objectives of users. Some common cluster architectures include:...

Generative AI: What It Is, Applications, and Impact

Generative AI is a type of artificial intelligence that can create new and original content, chat responses, designs, synthetic data, or...

Getting Started with Docker Desktop on Windows using WSL 2

Docker Desktop and WSL are two popular tools for developing and running containerized applications on Windows. Docker Desktop is a Docker...

The Impact of Big Data Development on Industries

The term "big data" is usually associated with companies like Google and Amazon, but the impact of big data development on...

Optimising Production Applications with Kubernetes: Tips for Deployment, Management and Scalability

Kubernetes is a container orchestration platform that automates many of the manual processes involved in deploying, managing, and scaling containerized applications....

Deploying ArgoCD with Amazon EKS

ArgoCD is a tool that helps integrate GitOps into your pipeline. The first thing to note is that ArgoCD does not...

Step-by-Step Guide to Deploying and Managing Redis on Kubernetes

Redis is a popular in-memory data structure store that is used by many applications for caching, messaging, and other tasks. It...

Legal Tech AI: How AI revolutionized the Practice of Law

As technologies rapidly evolve and seep into all domains of our lives, even the traditionally analog industries such as law are...

Introduction to KEDA – Automating Autoscaling in Kubernetes

Embarking on KEDA: A Guide to Kubernetes Event-Driven Autoscaling

Using Kubernetes and Slurm Together

Slurm is a job scheduler that is commonly used for managing high-performance computing (HPC) workloads. Kubernetes is a container orchestration platform...

WebAssembly: The Hottest Tech Whiz making Devs Dance!

In the fast-paced world of technology, a new star is born, and it’s got developers around the globe shaking their booties...

Budgeting for Success: Integrating DevOps into AAA-Game Production

DevOps is a new way of thinking about the relationship between development and operations. It’s not just about automating deployment, but...

How to Integrate Docker Scout with GitLab

GitLab is a DevOps platform that combines the functionality of a Git repository management system with continuous integration (CI) and continuous...

How The Adoption of Open Source Can Impact Mission-Critical Environments

Open source software – any software that is freely and available shared with others – has quickly become a staple in...

Docker Init for Go Developers

Are you a Go developer who still writes Dockerfile and Docker Compose manually? Containerizing Go applications is a crucial step towards...

What is Kubesphere and what problem does it solve

As a Kubernetes engineer, you likely have little trouble navigating around a Kubernetes cluster. Setting up resources, observing pod logs, and...

What is Docker Compose Include and What problem does it solve?

Docker Compose is a powerful tool for defining and running multi-container Docker applications. It enables you to manage complex applications with multiple...

Using FastAPI inside a Docker container

Discover the power of FastAPI for Python web development. Learn about its async-first design, automatic documentation, type hinting, performance, ecosystem, and...

Leveraging Compose Profiles for Dev, Prod, Test, and Staging Environments

Explore how Docker Compose Profiles revolutionize environment management in containerization. Simplify your workflows with this game-changing feature! #Docker #Containerization

What is Hugging Face and why it is damn popular?

In the dynamic landscape of Natural Language Processing (NLP) and machine learning, one name stands out for its exceptional contributions and...

Docker Best Practices – Slim Images

Docker images are the building blocks of Docker containers. They are lightweight, executable packages of software that include everything needed to...

Docker vs Virtual Machine (VM) – Key Differences You Should Know

Let us understand this with a simple analogy. Virtual machines have a full OS with its own memory management installed with...

What a Career in Full Stack Development Looks Like

A career in full-stack development is both thrilling and dynamic. Full-stack developers wear many hats and are therefore invaluable to many...

Navigating Challenges in Cloud Migration with Consulting

Are you a business leader in the modern economy attempting to migrate data to cloud computing? Is it challenging for your...

The Best Paraphrasing Tools for Students in 2023

Plagiarism has made paraphrasing the immediate need of students these days. Submitting plagiarized assignments and papers will make you face excruciating...

OpenPubkey or SigStore – Which one to choose?

Container signing is a critical security practice for verifying the authenticity and integrity of containerized applications. It helps to ensure that...

What is Docker Compose Watch and what problem does it solve?

Quick Update: Docker Compose File Watch is no longer an experimental feature. I recommend you to either use the latest version...

Running Ollama 2 on NVIDIA Jetson Nano with GPU using Docker

Ollama is a rapidly growing development tool, with 10,000 Docker Hub pulls in a short period of time. It is a...

Future Trends in Retail Plan Software

The retail industry constantly evolves, driven by technological advancements and shifting consumer behaviors. To stay ahead of the curve, retailers must...

Getting Started with GenAI Stack powered with Docker, LangChain, Neo4j and Ollama

At DockerCon 2023, Docker announced a new GenAI Stack – a great way to quickly get started building GenAI-backed applications with...

Tech Tips for Students: Ace Research Papers

Academic life can be daunting, especially when it comes to writing research papers. However, in this technology-driven era, a plethora of...

10 Design Principles for Better Product Usability

When it comes to product usability, there are 10 key design principles that you should keep in mind when creating a...

Streamlining the Deal Making Process with Virtual Data Rooms

Today, we will look at the best data room providers and how they can optimize the business processes that take place...

CI/CD and AI Observability: A Comprehensive Guide for DevOps Teams

I. Introduction High-quality software solutions are now more important than ever in the fast-paced world of technology, especially where cloud infrastructure...

What is Resource Saver Mode in Docker Desktop and what problem does it solve?

Resource Saver mode is a new feature introduced in Docker Desktop 4.22 that allows you to conserve resources by reducing the...

How DevOps Skills Can Boost Job Opportunities for Students

In the rapidly evolving tech industry, acquiring relevant skills such as DevOps can significantly boost job opportunities for students. This skill...

5 Reasons to Like Linux: Beginner’s Introduction

Feeling a tad frustrated with the humdrum operating systems that have been holding you back? Or maybe you’re simply on the...

Docker for Data Science: Streamline Your Workflows and Collaboration

Data science is a dynamic field that revolves around experimentation, analysis, and model building. Data scientists often work with various libraries,...

Error message “cannot enable Hyper-V service” on Windows 10

Docker is a popular tool for building, running, and shipping containerized applications. However, some users may encounter the error message “cannot...

DockerCon 2023: Developers at the Heart of Container Innovation

Are you a developer ready for an immersive learning experience like no other? DockerCon 2023 is back, live and in person,...

🐳 Boost Your Docker Workflow: Introducing Docker Init for Python Developers 🚀

Are you a Python developer still building Dockerfile and Docker Compose files manually? If the answer is “yes,” then you’re definitely...

Install Redis on Windows in 2 Minutes

Redis, a powerful open-source in-memory data store, is widely used for various applications. While Redis is often associated with Linux, you...

Automating Docker Container Restarts Based on CPU Usage: A Guide

Effectively managing Docker containers involves monitoring resource consumption and automating responses to maintain optimal performance. In this guide, we’ll delve into...

How to Build and Containerise Sentiment Analysis Using Python, Twitter and Docker

Sentiment analysis is a powerful technique that allows us to gauge the emotional tone behind a piece of text. In today’s...

Skills and Qualifications of Brazilian Software Developers

This blog post will emphasize special abilities and qualifications that make Brazilian software developers pretty talented. Offering overviews of Brazil’s top...

Guide to Implementing DevOps Successfully in Your Organization

Digital transformation is becoming increasingly important as time changes. One effective approach to enhance software development and management is by implementing...

Smart Cities and Sports: Innovations in Stadium Management and Fan Engagement

Over the past decade, there has been a consistent decrease in fan attendance at live sporting events. The advancement of traditional...

Easy way to colorify Your Shell Prompt Experience on The Mac

Have you ever attended a developer conference and marveled at the colorful and eye-catching terminal prompts displayed by presenters? If you’ve...

User Data Privacy in Mobile App Development: A Guide to Best Practices and Legal Requirements

User data privacy is no longer just a good-to-have feature; it’s a necessity in mobile app development. With increasing cyber threats,...

How to Containerise a Large Language Model(LLM) App with Serge and Docker

Large language models (LLMs) are a type of artificial intelligence (AI) that are trained on massive datasets of text and code....

What is Docker Swarm and what problem does it solve?

Docker Swarm is a container orchestration tool built and managed by Docker, Inc. It is the native clustering tool for Docker....

Sidecar vs Init Containers: Which One Should You Use?

Sidecars have been a part of Kubernetes since its early days. They were first described in a blog post in 2015...

Guide to Different Types of Coding Languages: Your Source of Urgent Help in Programming Dilemmas

The path of a programming specialist starts at college when a student learns different types of coding languages. This could become...

How to Fix “Pods stuck in Terminating status” Error

Kubernetes, a powerful container orchestration platform, has revolutionized the deployment and management of containerized applications. However, as with any technology, it...

What is LangChain and Why it is damn popular? A Step-by-Step Guide using OpenAI, LangChain, and Streamlit

LangChain is an open-source framework that makes it easy to build applications using large language models (LLMs). It was created by...

Streamlining Success: How DevOps Powers Online Casino Games

Digital gambling is growing bigger thanks to its versatile game selection paired with excellent bonus opportunities. But what you may not...

Unlocking Global Business Opportunities: Multilingual Collaboration in the Tech Industry

As our world has become more diverse and interconnected than ever before, the ability to communicate effectively has never been more...

How User Verification Processes Are Accelerated by Automated ID Verification

Strong user verification procedures are now more critical than ever in our increasingly digital society, where online services and transactions are...

Large Language Models (LLMs) and Docker: Building the Next Generation Web Application

Large language models (LLMs) are a type of artificial intelligence (AI) that are trained on massive datasets of text and code....

Understanding How Search Engines Work

There are more than 20 search engines around the world, but Google rules them all, getting more than 89 billion visits...

How to use Stable Diffusion to create AI-generated images

The convergence of Artificial Intelligence (AI) and art has birthed captivating new horizons in creative expression. Among the innovative techniques, Stable...

How To Remove Search Marquis on a Mac?

Search Marquis is very similar to the Bing redirect virus, it’s actually an upgraded version. The problem with it is it...

Highlights of the Docker and Wasm Day Community Meetup Event

The Docker Bangalore and Collabnix (Wasm) communities converged at the Microsoft Reactor Office for a groundbreaking meetup that explored the fusion...

Kubernetes on Docker Desktop in 2 Minutes

Docker Desktop is the easiest way to run Kubernetes on your local machine – it gives you a fully certified Kubernetes cluster...

Docker System Prune: Cleaning Up Your Docker Environment

Docker has revolutionized how software applications are developed, deployed, and run. Containers provide a consistent environment for applications, making them portable...

The Role of Brokers in Financial Markets: A Comprehensive Guide

In the vast and complex world of financial markets, brokers play a crucial role in facilitating transactions between buyers and sellers....

Top 20 companies that uses Wasm

WebAssembly (Wasm) is a binary instruction format designed to be as efficient as native machine code. It is being used by...

Docker Dev Tools: Turbocharge Your Workflow!

In today’s rapidly evolving development landscape, maximizing productivity and streamlining workflows are paramount. Docker, with its cutting-edge developer tools, presents an...

What is Real-Time Data Warehousing? A Comprehensive Guide

Organizations are always attempting to extract meaningful insights from their data in real time to influence choices and preserve a competitive...

Announcing the Meetup Event – Container Security Monitoring for Developers using Docker Scout

There are various security tools available today in the market. While there are similarities and differences between all tools of this...

The State of Docker Adoption for AI/ML

Artificial intelligence (AI) and machine learning (ML) are now part of many applications, and this trend is only going to continue....

How to Monitor Node Health in Kubernetes using Node Problem Detector Tool?

Kubernetes is a powerful container orchestration platform that allows users to deploy and manage containerized applications efficiently. However, the health of...

Building a Multi-Tenant Machine Learning Platform on Kubernetes

Machine learning platforms are the backbone of the modern data-driven enterprises. They help organizations to streamline their data science workflows and...

Enhancing Business Efficiency through Streamlined Document Management and Collaboration in Virtual Data Room

Did you know that employee productivity has skyrocketed by 61.8% between 1979 and 2020? That’s incredible! And a big part of...

Implementing Automated RDS Backup and Restore Strategy with Terraform

In today’s fast-paced digital world, data protection and business continuity are of paramount importance. For organizations leveraging Amazon RDS (Relational Database...

How to Fix “Cannot connect to the Docker daemon at unix:/var/run/docker.sock” Error Message?

Docker is a popular platform for building, shipping, and running applications in containers. However, sometimes when you try to run Docker...

Rate Limiting in Redis Explained

Rate limiting is a crucial mechanism used to control the flow of incoming requests and protect web applications and APIs from...

How to Integrate Docker Scout with GitHub Actions

Docker Scout is a collection of software supply chain features that provide insights into the composition and security of container images. It...

The Shift to Virtual Boardrooms: Leveraging Technology for Remote Collaboration and Decision-Making

It's remarkable how boardroom portals have transformed the world. Get acquainted with this technology as well.

Infrastructure as Code (IaC): Navigating the Future of DevOps Tools and Education

In the ever-evolving world of technology, the concept of Infrastructure as Code (IaC) has become an indispensable part of DevOps practices....

Seamless Integration with Tomorrow.io Weather API:Enhance Your App’s Weather Experience

Integrating weather data into your application can significantly enhance its functionality and user experience. By seamlessly incorporating the Tomorrow.io Weather API,...

Using AI in Software Development for a Competitive Advantage

In today’s rapidly evolving technological landscape, software development plays a pivotal role in shaping businesses’ success. Companies strive to deliver innovative,...

Docker Secrets Best Practices: Protecting Sensitive Information in Containers

Docker has revolutionized the way we build, ship, and run applications. However, when it comes to handling sensitive information like passwords,...

The Importance of DevOps for SEO Teams and Processes

Check this simple guide to learn how essential DevOps principles and practices can contribute to the efficiency of your SEO teams...

Performing CRUD operations in Mongo using Python and Docker

MongoDB is a popular open-source document-oriented NoSQL database that uses a JSON-like document model with optional schemas. It was first released in...

Efficient Strategies and Best Practices to Minimize Resource Consumption of Containers in Host Systems

Containers have revolutionized the way applications are deployed and managed. However, as the number of containers increases within a host system,...

How to Boost Employee Engagement with Top-rated Software Solutions

Keeping your employees engaged and motivated in today’s fast-paced business world can be challenging. However, employee engagement a crucial in determining...

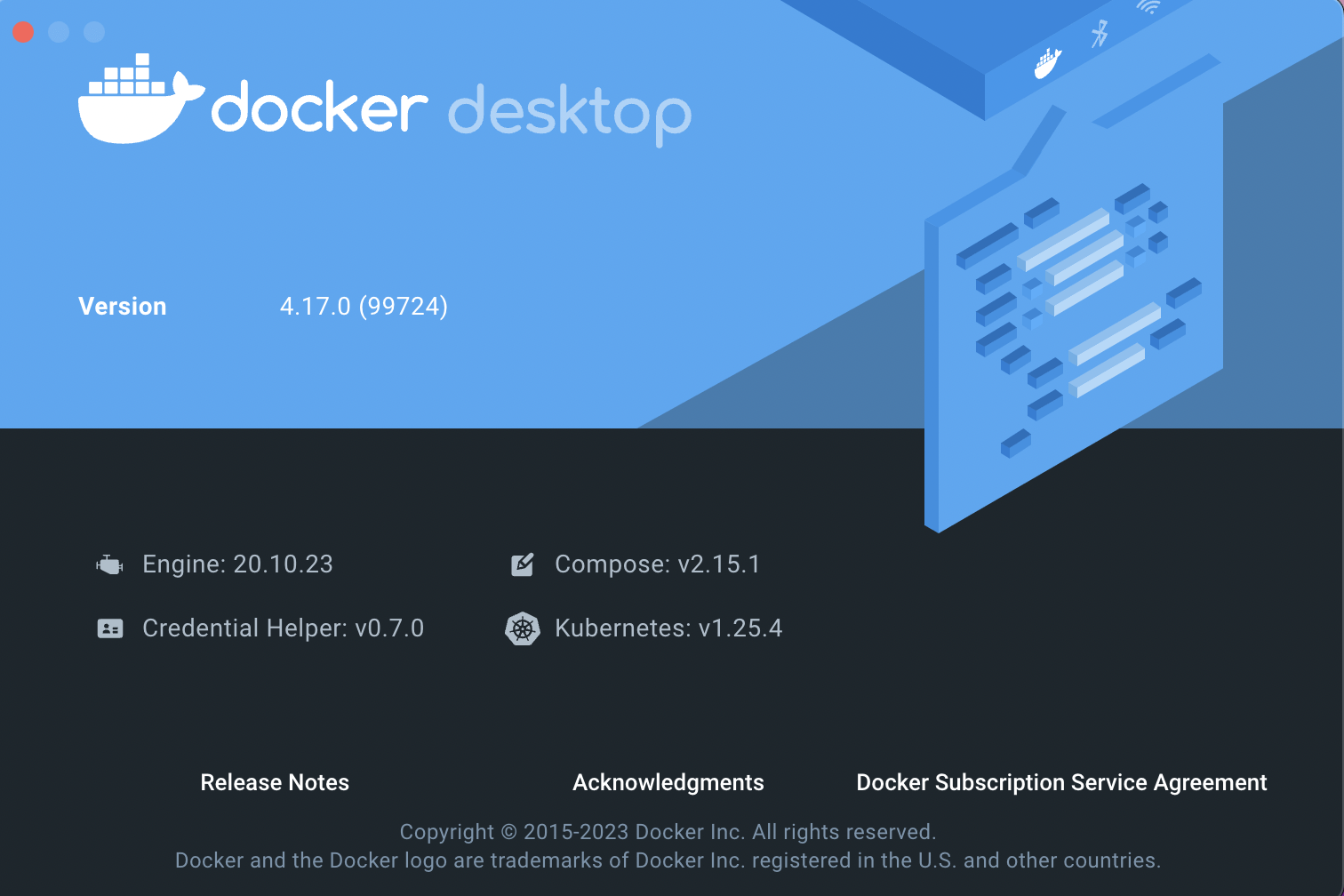

A First Look at Docker Scout – A Software Supply Chain Security for Developers

With the latest Docker Desktop 4.17 release, the Docker team introduced Docker Scout. Docker Scout is a collection of software supply chain features that...

Getting Started with the Low-Cost RPLIDAR Using NVIDIA Jetson Nano

Conclusion Getting started with low-code RPlidar with Jetson Nano is an exciting journey that can open up a wide range of...

Introduction to Karpenter Provisioner

Karpenter is an open-source provisioning tool for Kubernetes that helps manage the creation and scaling of worker nodes in a cluster....

Update Your Kubernetes App Configuration Dynamically using ConfigMap

ConfigMap is a Kubernetes resource that allows you to store configuration data separately from your application code. It provides a way to...

Mastering the DevOps Mindset: Essential Tips for Students

The demand for skilled professionals in programming, computer science, and software development is soaring in the rapidly evolving world of technology....

How to Choose an IT Career Path that is Right for You

The field of Information Technology (IT) offers a wide array of career opportunities, each with its own unique set of skills...

Shift Left Testing: What It Means and Why It Matters

Shift Left Testing is an approach that involves moving the testing phase earlier in the software development cycle. In traditional models,...

Docker Vs Podman Comparison: Which One to Choose?

Today, every fast-growing business enterprise has to deploy new features of their app rapidly if they really want to survive in...

Kubernetes 101: A One-Day Workshop for Beginners

Are you new to Kubernetes and looking to gain a solid understanding of its core concepts? Join us for a one-day...

Is it a good practice to include go.mod file in your Go application?

Including a go.mod file in your Go application is generally considered a good practice. The go mod command and the go.mod file were...

Think Beyond the Walls of Your Office

A few years ago, a colleague of mine questioned the time and effort I dedicated to organizing Meetups in and outside...Cybersecurity’s Paradigm Shift: Embracing Zero Trust Security for the Digital Frontier

In a world plagued by persistent cyber threats, traditional security models crumble under the weight of rapidly evolving attack vectors. It’s...Linkerd Service Mesh on Amazon EKS Cluster

Linkerd is a lightweight service mesh that provides essential features for cloud-native applications, such as load balancing, service discovery, and observability....

Exploring the Future of Local Cloud Development: Highlights from the Cloud DevXchange Meetup

The recent Cloud DevXchange meetup, organized by LocalStack in collaboration with KonfHub and Collabnix, brought together developers and cloud enthusiasts in Bengaluru for a day of knowledge-sharing...

Automating Configuration Updates in Kubernetes with Reloader

Managing and updating application configurations in a Kubernetes environment can be a complex and time-consuming task. Changes to ConfigMaps and Secrets...

Introducing Karpenter – An Open-Source High-Performance Kubernetes Cluster Autoscaler

Kubernetes has become the de facto standard for managing containerized applications at scale. However, one common challenge is efficiently scaling the...

CI/CD for Docker Using GitHub Actions

Learn how you can use GitHub Actions with Docker containers

How to Effectively Monitor and Manage Cron Jobs

Conclusion In conclusion, cron jobs can be a powerful tool for automating repetitive tasks, but they can also create problems if...

DevOps and Digital Citizenship: Teaching Responsible Internet Navigation for Seamless Collaboration

In today’s interconnected world, where the internet has become an integral part of our daily lives, it is essential for students...

Adapting to the AI Revolution: How Writers for Hire Embrace DevOps Challenges

The AI revolution is making waves in virtually every field, including DevOps. The DevOps landscape is becoming more complex with each...

DevOps-Security Collaboration: Key to Effective Cloud Security & Observability

This guest post is authored by Jinal Lad Mehta, working at Middleware AI-powered cloud observability tool. She is known for writing...

Enhancing Collaboration in DevOps: Maximizing Group Work Efficiency With Project Management Software

DevOps is a big trend in IT today. This methodology has been known for a while and is still being actively...

Empowering College Students with Disabilities in DevOps: 5 Essential Resources and Tools

Walking into a classroom or firing up a laptop for an online course can bring a rush of excitement and a...

How to build Wasm container and push it to Docker Hub

WebAssembly (Wasm) has gained significant traction in the technology world, offering a powerful way to run code in web browsers and...

Balancing DevOps Workflows: Choosing Between AI and Expert Writers

DevOps is a set of practices that integrates software development (Dev) and IT operations (Ops), aimed at shortening the systems development...

How to Clear Docker Cache?

Explore how to clean Docker cache to improve performance and optimize disk usage.

Understanding the Mindset of Software Engineers: A Guide for Marketers

I already see you rolling your eyes at this title. Publishing an article on the ‘engineering mindset’ here might seem weird....

Managing Containers with Kubernetes: Best Practices and Tools

Manage containers, and achieve optimal performance, security, and scalability with Kubernetes monitoring. Find essential tools for containerization and observability.

Transforming DevOps Practices: The Impact of Microsoft Surface on Team Productivity

Technological progress has touched all spheres of our lives, especially those connected to information technologies. In the last few years, the...